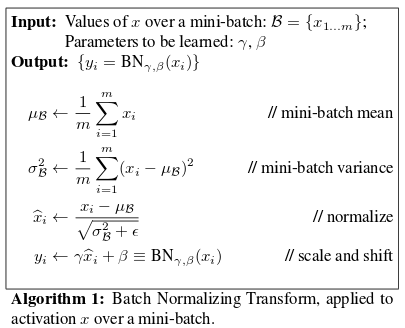

Across the batch dimension, any neuron to have unit gaussian distribution

레이어마다 Normalization을 하는 레이어를 두어, 변형된 분포가 나오지 않도록 하는 것

Layer Normalization 에서 차원 하나만 바꾸면 된다

Limitation

- dependent to mini batch size

- hard to apply to RNN

Batch Normalization Methods

배치 정규화(Batch Normalization)

gaussian37's blog

https://gaussian37.github.io/dl-concept-batchnorm/

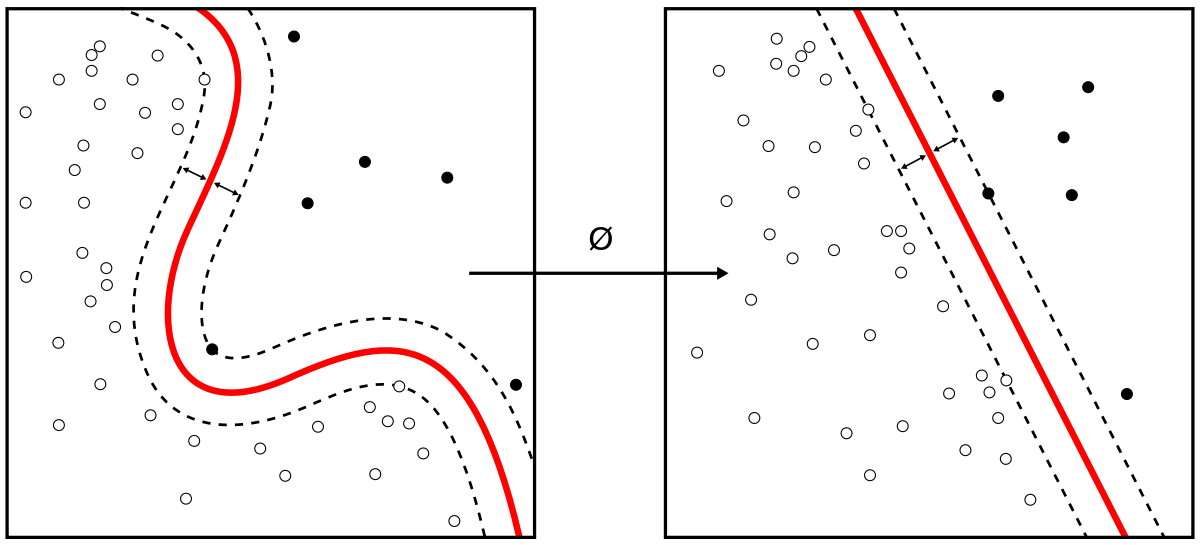

Batch normalization

Batch normalization is a method used to make training of artificial neural networks faster and more stable through normalization of the layers' inputs by re-centering and re-scaling. It was proposed by Sergey Ioffe and Christian Szegedy in 2015.

https://en.wikipedia.org/wiki/Batch_normalization

Google Patent

Batch normalization layers

Methods, systems, and apparatus, including computer programs encoded on computer storage media, for processing inputs using a neural network system that includes a batch normalization layer. One of the methods includes receiving a respective first layer output for each training example in the batch; computing a plurality of normalization statistics for the batch from the first layer outputs; normalizing each component of each first layer output using the normalization statistics to generate a respective normalized layer output for each training example in the batch; generating a respective batch normalization layer output for each of the training examples from the normalized layer outputs; and providing the batch normalization layer output as an input to the second neural network layer.

https://patents.google.com/patent/US20160217368A1/en

Seonglae Cho

Seonglae Cho