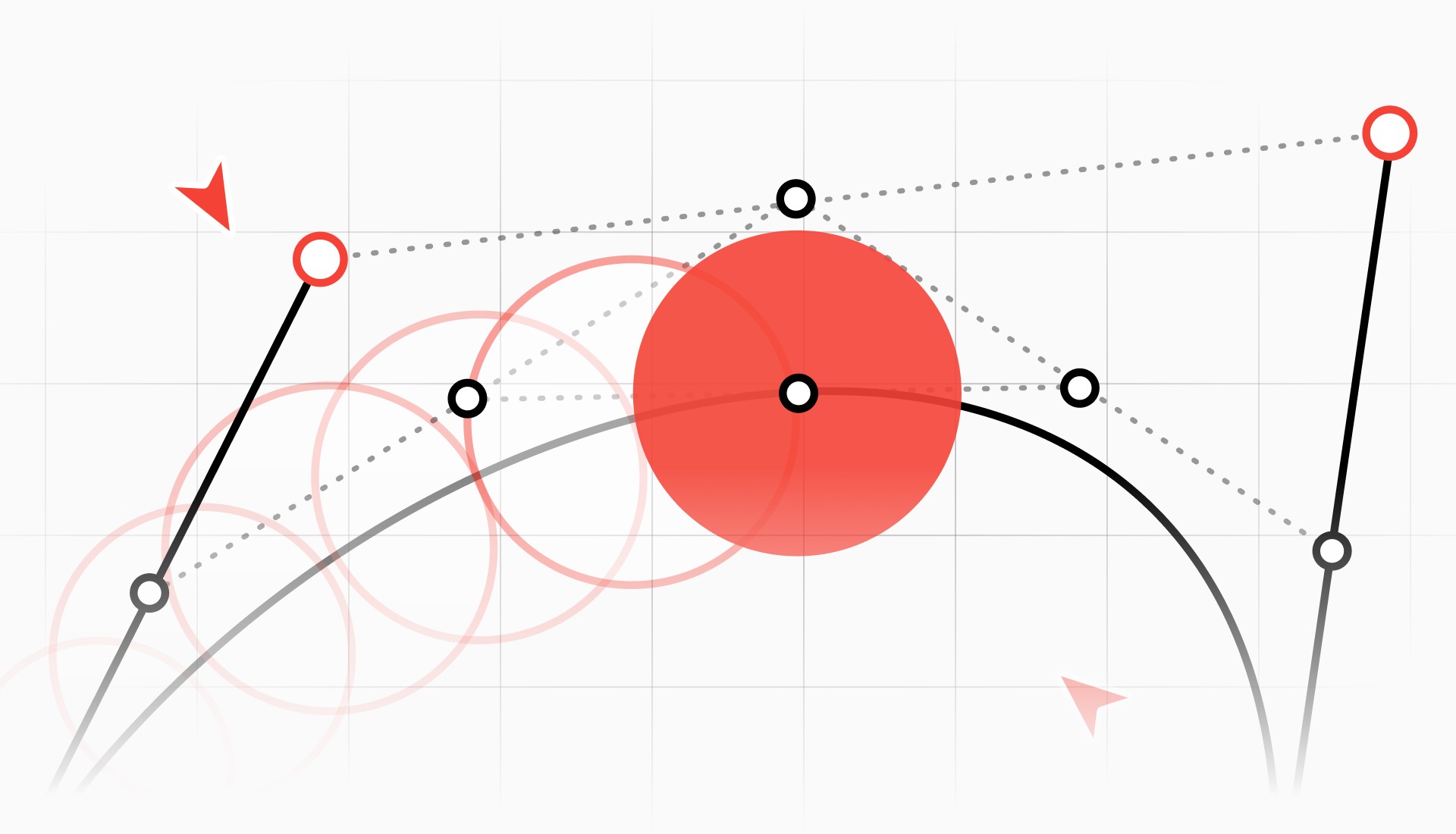

Parametric curve which means all the coordinates of the curve depend on an independent variable t

Unlike polynomial curve which can diverge easily, interpolation based Bézier curve draw a more smoother curve.

Bézier curve Notion

Bézier Curves - and the logic behind them | Richard Ekwonye

The logic behind Bézier Curves used in CSS animations and visual elements.

https://blog.richardekwonye.com/bezier-curves

bernstein basis shows the contribution of each point for interpolation

Kolmogorov-Arnold Networks: MLP vs KAN, Math, B-Splines, Universal Approximation Theorem

In this video, I will be explaining Kolmogorov-Arnold Networks, a new type of network that was presented in the paper "KAN: Kolmogorov-Arnold Networks" by Liu et al.

I will start the video by reviewing Multilayer Perceptrons, to show how the typical Linear layer works in a neural network. I will then introduce the concept of data fitting, which is necessary to understand Bézier Curves and then B-Splines.

Before introducing Kolmogorov-Arnold Networks, I will also explain what is the Universal Approximation Theorem for Neural Networks and its equivalent for Kolmogorov-Arnold Networks called Kolmogorov-Arnold Representation Theorem.

In the final part of the video, I will explain the structure of this new type of network, by deriving its structure step by step from the formula of the Kolmogorov-Arnold Representation Theorem, while comparing it with Multilayer Perceptrons at the same time.

We will also explore some properties of this type of network, for example the easy interpretability and the possibility to perform continual learning.

Paper: https://arxiv.org/abs/2404.19756

Slides PDF: https://github.com/hkproj/kan-notes

Chapters

00:00:00 - Introduction

00:01:10 - Multilayer Perceptron

00:11:08 - Introduction to data fitting

00:15:36 - Bézier Curves

00:28:12 - B-Splines

00:40:42 - Universal Approximation Theorem

00:45:10 - Kolmogorov-Arnold Representation Theorem

00:46:17 - Kolmogorov-Arnold Networks

00:51:55 - MLP vs KAN

00:55:20 - Learnable functions

00:58:06 - Parameters count

01:00:44 - Grid extension

01:03:37 - Interpretability

01:10:42 - Continual learning

https://youtu.be/-PFIkkwWdnM?si=j6Q0bYFukESvZmqU

Seonglae Cho

Seonglae Cho