Cerebras

Cerebras is the go-to platform for fast and effortless AI training. Learn more at cerebras.ai.

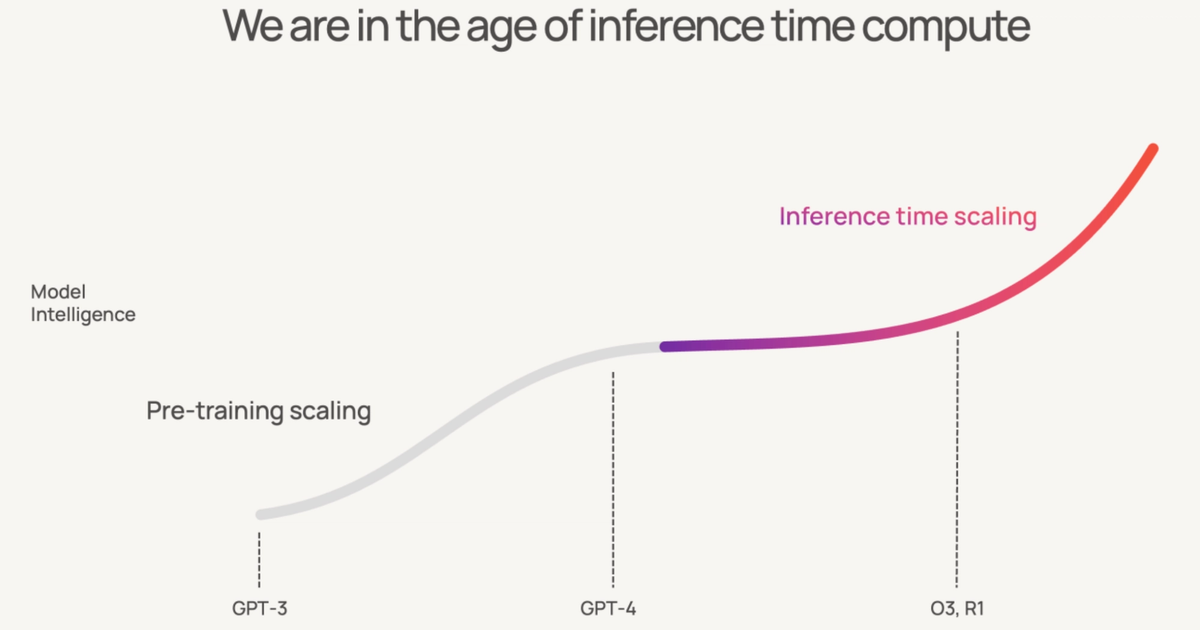

https://www.cerebras.ai/blog/the-cerebras-scaling-law-faster-inference-is-smarter-ai

openai

OpenAI partners with Cerebras

OpenAI is partnering with Cerebras to add 750MW of ultra low-latency AI compute to our platform.

https://openai.com/index/cerebras-partnership/

2100tokens /s

Cerebras Inference now 3x faster: Llama3.1-70B breaks 2,100 tokens/s - Cerebras

[vc_row][vc_column column_width_percent=”90″ gutter_size=”3″ overlay_alpha=”50″ shift_x=”0″ shift_y=”0″ shift_y_down=”0″ z_index=”0″ medium_width=”0″ mobile_width=”0″ width=”1/1″ uncode_shortcode_id=”158708″][vc_column_text uncode_shortcode_id=”182361″]Today we’re announcing the biggest update to Cerebras […]

https://cerebras.ai/blog/cerebras-inference-3x-faster

Just as like Groq AI is focusing on AI Server API serving

Cerebras Systems throws down gauntlet to Nvidia with launch of ‘world’s fastest’ AI inference service

Cerebras Systems throws down gauntlet to Nvidia with launch of 'world's fastest' AI inference service - SiliconANGLE

https://siliconangle.com/2024/08/27/cerebras-systems-throws-down-gauntlet-to-nvidia-launch-of-worlds-fastest-ai-inference-service/

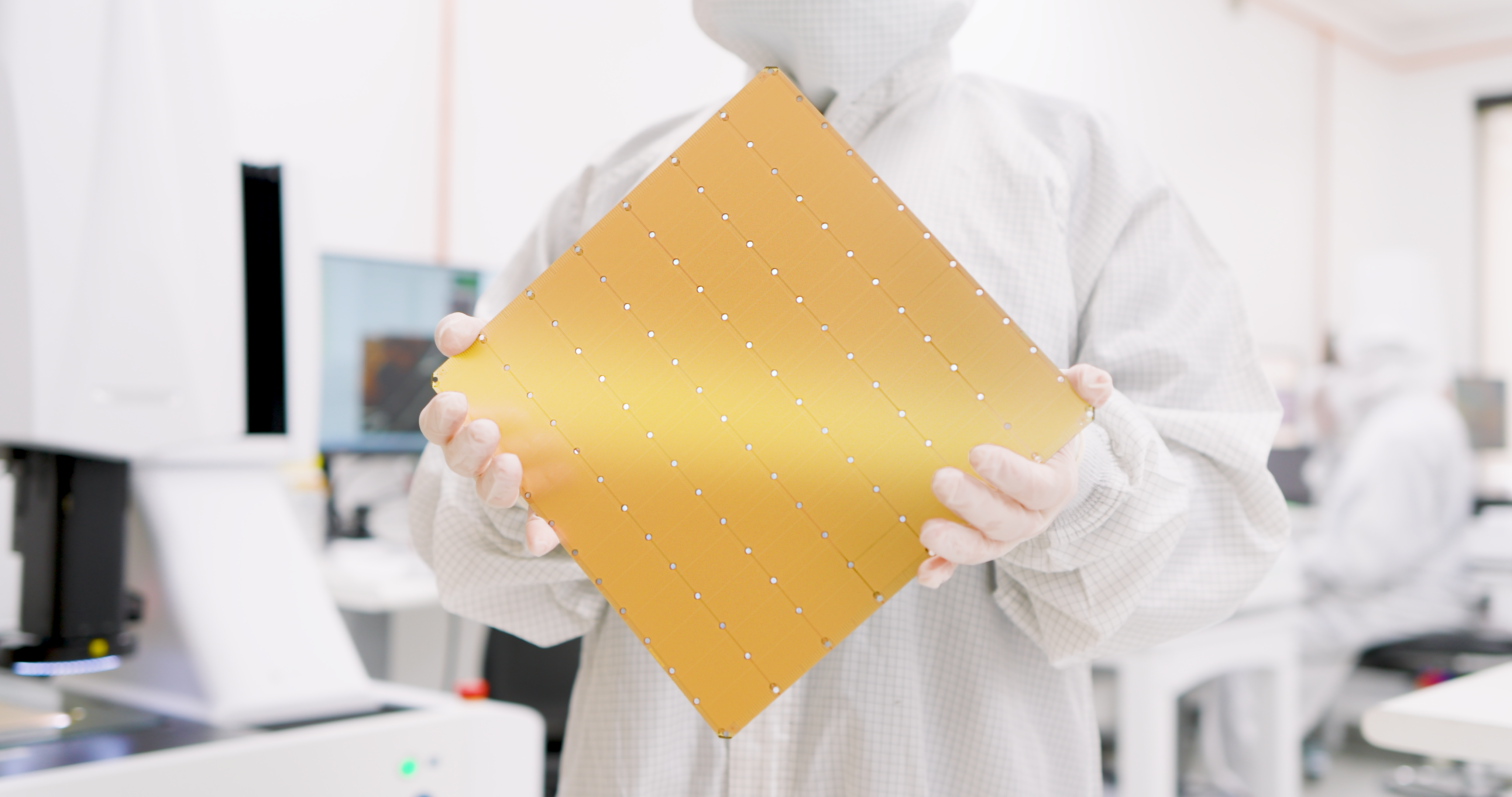

Giant Chips Give Supercomputers a Run for Their Money

This dinner-plate sized chip can cut the energy cost of inference by a factor of three for large language models like ChatGPT. It can also do scientific calculations in the field of molecular dynamics at an unprecedented speed, allowing for simulation of new regimes relevant for fusion energy.

https://spectrum.ieee.org/cerebras-wafer-scale-engine

Seonglae Cho

Seonglae Cho