statement about the Entropy of a discrete Probability Distribution

Several other bounds on the entropy of probability distributions are derived from Gibbs' inequality

(KL ≥ 0)

Gibbs' inequality

In information theory, Gibbs' inequality is a statement about the information entropy of a discrete probability distribution. Several other bounds on the entropy of probability distributions are derived from Gibbs' inequality, including Fano's inequality.

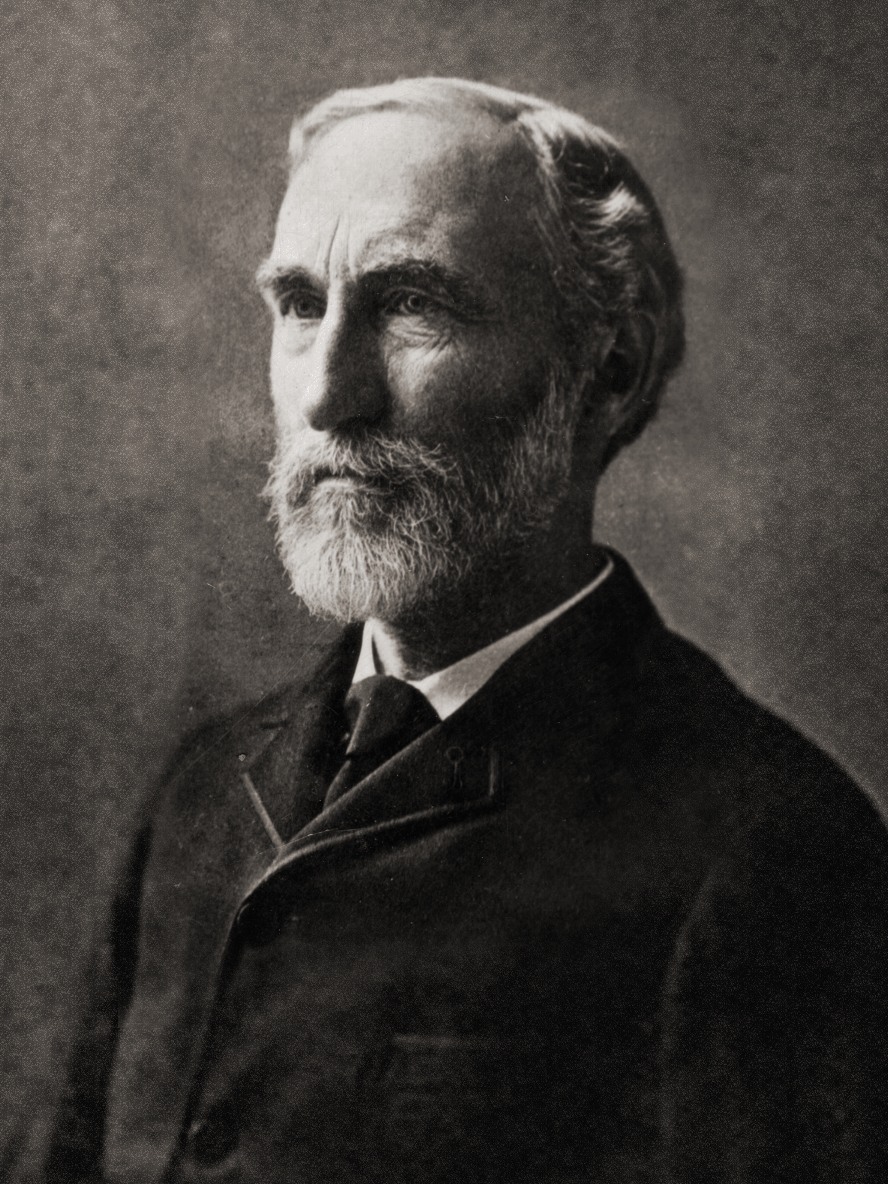

It was first presented by J. Willard Gibbs in the 19th century.

https://en.wikipedia.org/wiki/Gibbs'_inequality

Seonglae Cho

Seonglae Cho