Gated Linear Unit

Gated mechanism usually means point-wise operation between sigmoid function to control flow (the function should not be a sigmoid but usually sigmoid is used)

Gating Mechanism 과 달리 weight는 없다

GLU functions

Papers with Code - GLU Explained

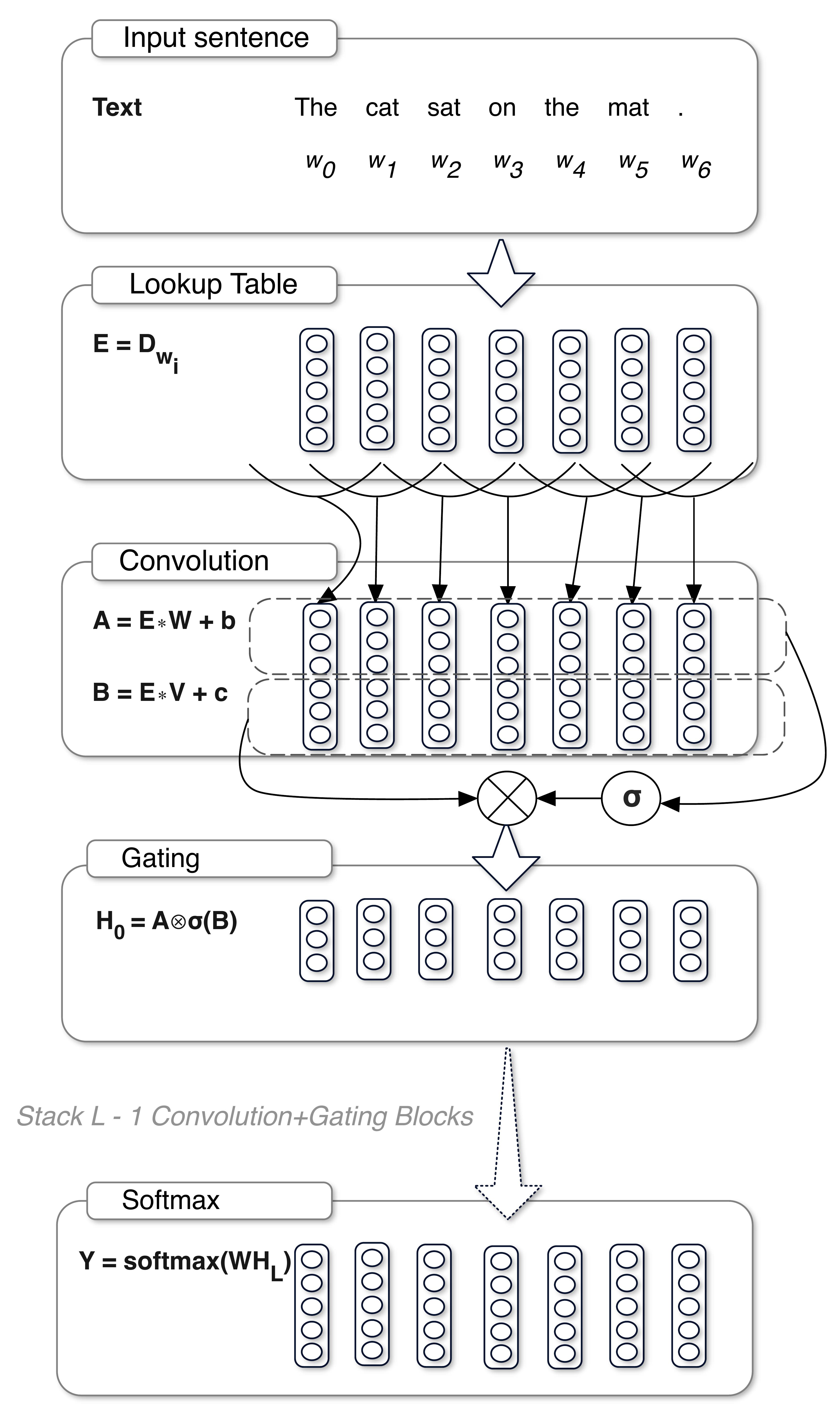

A Gated Linear Unit, or GLU computes: $$ \text{GLU}\left(a, b\right) = a\otimes \sigma\left(b\right) $$ It is used in natural language processing architectures, for example the Gated CNN, because here $b$ is the gate that control what information from $a$ is passed up to the following layer. Intuitively, for a language modeling task, the gating mechanism allows selection of words or features that are important for predicting the next word. The GLU also has non-linear capabilities, but has a linear path for the gradient so diminishes the vanishing gradient problem.

https://paperswithcode.com/method/glu

SwiGLU Activation Function 설명

안녕하세요, 오늘은 SwiGLU Activation Function에 대해 리뷰해볼까 합니다. 얼마 전에 Meta에서 발표한 LLAMA 2나 비전에서 최근 좋은 성능을 보여준 EVA-02를 포함한 많은 논문에서 SwiGLU를 채택하고 있습니다. 딥러닝을 공부하다보면 활성화 함수는 다소 사소하게 여겨질 수 있지만 실제로는 그렇지 않고, 심하게는 모델 학습이 정상적으로 되느냐 마느냐를 결정지을 수 있는 중요한 요소입니다. 논문: GLU Variants Improve Transformer SwiGLU 배경 SwiGLU는 Swish + GLU, 두개의 Activation Functions를 섞어 만든 함수입니다. 왜 이런 함수를 설계했는지 하나씩 살펴보고 합쳐서 이해하면 좋겠습니다. Swish Activatio..

https://thecho7.tistory.com/entry/SwiGLU-Activation-Function-설명

Seonglae Cho

Seonglae Cho