NTK theory

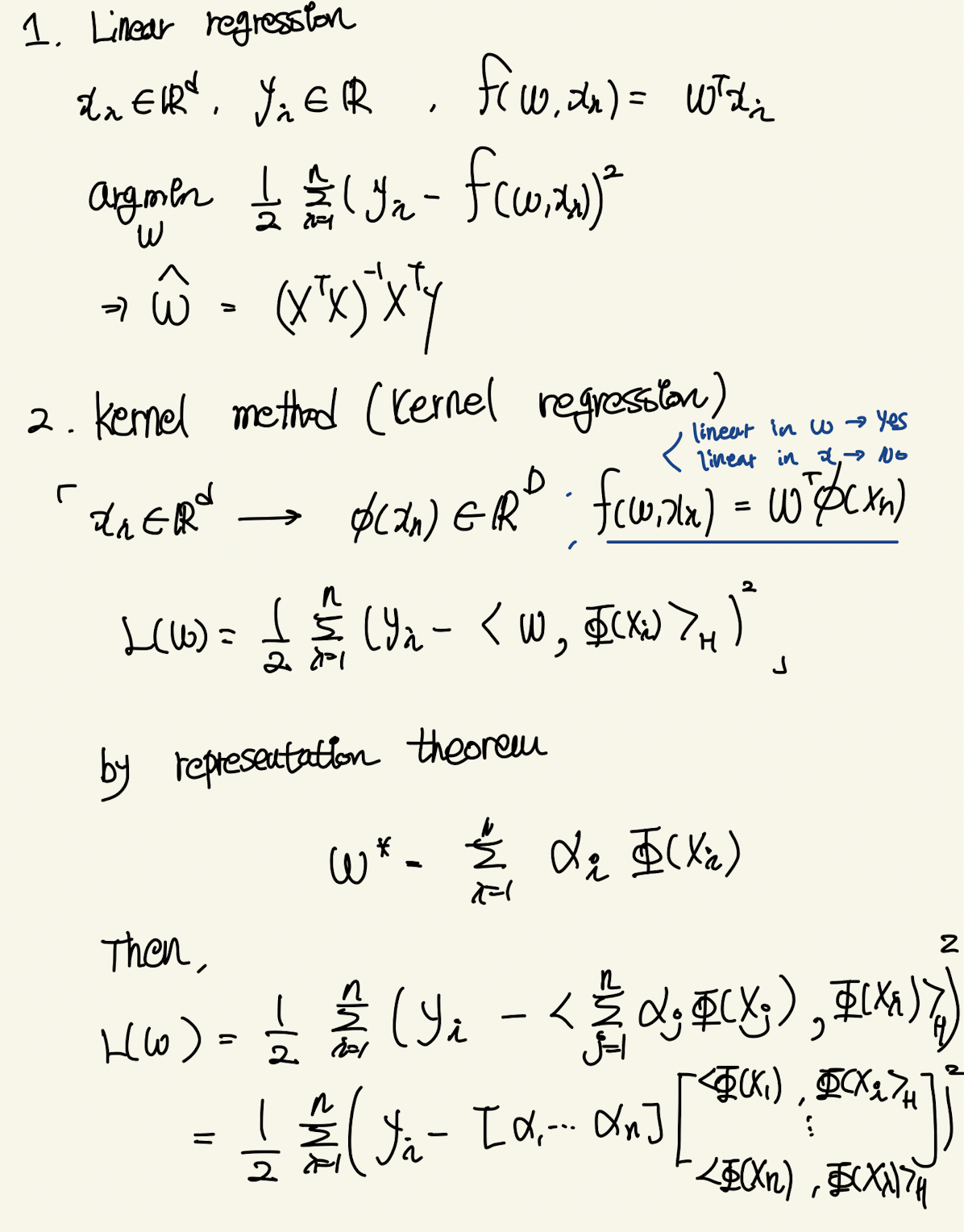

Neural network over-parameterizes but good at generalization which is similar to the kernel method. Neural Network to linear model by Taylor Series expansion approximation which is similar to the Back Propagation. Back propagation’s gradient flow can always find solution to make loss as zero since NTL can convert ANN with infinite nodes (parameters) and epsilon learning rate. It is called "tangent" because the kernel maps how the gradient of the output (y) changes linearly (i.e., in a tangent-like manner) with respect to small changes in the network's weights.

Universal Approximation Theorem explains the results of ability while NTL focuses on gradient flow and training dynamics.

As a result, linearity means that we can write a closed form solution for the training dynamics, and this closed form solution depends critically on the neural tangent kernel. Each element of the neural tangent kernel consists of the inner product of the vectors of derivatives for a pair of training data examples. This can be calculated for any network and we call this the empirical NTK. If we let the width become infinite, then we can get closed-form solutions, which are referred to as analytical NTKs.

Fourier featuring

Analyzing gradient descent using this, we can understand the convergence properties of neural networks. By decomposing the dynamics of the output using the chain-rule under the least-square loss assumption, we can obtain an ODE composed of NTK. In the over-parameterized regime, the kernel is positive-definite, allowing for eigenvalue decomposition. At this point, the eigenvalue is the convergence rate of the neural network.

It can be seen that Fourier features help NN learn high-frequency information better. Generally, natural data have large magnitudes of low-frequency information and small magnitudes of high-frequency information. Analyzing the NTK of inputs that have passed through Fourier features shows that the eigenvalue falloff is not greater than that of the traditional MLP NTK. This indicates that NN can sufficiently learn up to high frequencies.

Paper

Neural Tangent Kernel: Convergence and Generalization in Neural Networks

At initialization, artificial neural networks (ANNs) are equivalent to Gaussian processes in the infinite-width limit, thus connecting them to kernel methods. We prove that the evolution of an ANN...

https://arxiv.org/abs/1806.07572

Mathematics

Understanding the Neural Tangent Kernel

My attempt at distilling the ideas behind the neural tangent kernel that is making waves in recent theoretical deep learning research.

https://rajatvd.github.io/NTK/

Some Math behind Neural Tangent Kernel

Neural networks are well known to be over-parameterized and can often easily fit data with near-zero training loss with decent generalization performance on test dataset. Although all these parameters are initialized at random, the optimization process can consistently lead to similarly good outcomes. And this is true even when the number of model parameters exceeds the number of training data points. Neural tangent kernel (NTK) (Jacot et al. 2018) is a kernel to explain the evolution of neural networks during training via gradient descent.

https://lilianweng.github.io/posts/2022-09-08-ntk/

The Neural Tangent Kernel - Borealis AI

In this blog, we examine the linear behaviour in infinitely wide networks, and delve into the concept of Neural Tangent Kernel (NTK).

https://www.borealisai.com/research-blogs/the-neural-tangent-kernel/

Korean

Neural Tangent Kernel 리뷰

이번에 리뷰할 Neural Tangent Kernel (NTK) 논문은 NIPS 2018에 실린 논문으로 많은 인용수를 자랑하는 파급력 높은 논문입니다. 하지만 논문을 이해하려면 수학적 배경지식이 많이 필요해서 읽기가 어렵습니다. 다행히 설명을 잘해놓은 외국 블로그([2])가 있어 많은 참고를 하였습니다. 이 논문은 2 hidden layer와 infinite nodes의 neural network는 linear model로 근사하여 생각할 수 있고, 그 덕분에 문제를 convex 하게 만들어 해가 반드시 존재한다는 것을 보여줍니다. 어떻게 linear model로 근사하여 생각할 수 있다는 것인지 차근차근 알아보겠습니다. Taylor Expansion Taylor expansion은 매우 작은 영역에서..

https://simpling.tistory.com/56

(paper review) Neural Tangent Kernel

1. Introduction 저자는 neural network가 over-parameterize 하지만 good-generalization의 결과에 주목하며 kernel-method와의 비슷함을 말한다. 그 이유에 대해 추측해보면, kernel method는 low-

https://velog.io/@ynwag09/paper-review-Neural-Tangent-Kernel

Seonglae Cho

Seonglae Cho