Perform regularization as a method to increase bias and reduce variance

Regularization concept is first adapted to Ridge Regression model

not like linear regression, focus on Regularizer too not only on Fitting error

Linear regression + L2 Norm

find 0 point in function derivation by

- controls the tradeoff between Fitting error, Regularizer (overfitting and underfitting)

alpha, lambda, regularization parameter, penalty term is same

Ridge regression shrinks the model’s coefficients but not does not make them zero.

Dual solution (dual representation)

At ridge regression

Setting the derivative of the cost function with respect to to 0 yields the following equation:

Alternatively, we can rewrite the equation in terms of :

Where . is thus a linear combination of the training examples. Dual representation refers to learning by expressing the model parameters as a linear combination of training samples instead of learning them directly (primal representation).

The dual representation with proper regularization enables efficient solution when p>N (Sample-to-feature ratio) as the complexity of the problem depends on the number of examples and instead of on the number of input dimensions .

We have two distinct methods for solving the ridge regression optimization:

- Primal solution (explicit weight vector):

- Dual solution (linear combination of training examples):

The crucial observation about the dual solution is that the information from the training examples is captured via inner products between pairs of training points in the matrix . Since the computation only involves inner products, we can substitute for all occurrences of inner products by a function that computes:

This way we obtain an algorithm for ridge regression in the feature space defined by the mapping .

arxiv.org

https://arxiv.org/pdf/1509.09169

Lasso, Ridge regularization - 회귀에서 selection과 shrinkage

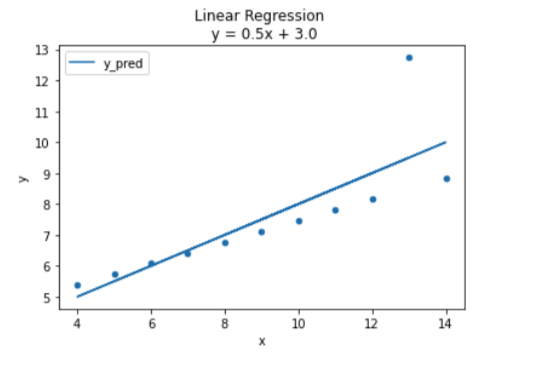

Ridge regression과 Lasso regression은 선형회귀 기법에서 사용되는 Regularization이다. 그럼 Regularization은 무엇인가? 를 이해하기 위해, Bias와 Variance, 그리고 Overfitting부터 이해하도록 한다. 1. Bias vs Variance 1) Bias and Variance in Linear model Bias는 학습된 모델이 Training data에 대해 만들어낸 예측값과, Training data값과의 Error를 말한다.Variance는 학습된 모델이 Test data에 대한 예측값과, 정답과의 Error를 말한다. 따라서 위와같이 선형함수의 경우, Training data를 완벽하게 맞추지 못하며 Bias가 어느정도 있다는 것을 알..

https://sosal.kr/1104

Ridge regression (릿지 회귀) python

릿지 회귀분석은 선형회귀분석의 과대적합 문제를 해소하기 위해 L2 규제를 적용하는 방식을 사용합니다. 과대 적합은 다음과 같은 표로 해석할 수 있습니다. 전체 Error는 분산과 편향의 제곱 합으로 표시할 수 있습니다. 즉 전체 Error를 최소화하는 회귀분석이 Least Square Method, 즉 OLS인 것입니다. 밑에 식을 한번 보겠습니다. N은 데이터의 개수입니다. 만약 1000개의 샘플데이터가 있다면 N=1000이 되는 것이죠. P는 feature의 개수입니다. 단순선형회귀인 경우에는 P=1이 되고 다중회귀에서는 P가 2이상인 값을 가집니다. y는 실제 target의 값입니다. ß는 가중치로서 OLS의 feature 계수라고 보시면 됩니다. 람다는 ridge 모형의 하이퍼파라미터로 alpha..

https://signature95.tistory.com/46

Ridge regression(릿지회귀)

과적합(overfitting)을 해결하는 방법으로는 크게 두가지가 있다. 특성의 갯수를 줄이기 주요특징을 직접 선택 or model selection algorithm 사용정규화를 수행하기 모든 특성을 사용, 하지만 파라미터의 값을 줄인다.여기서 릿지 회귀가 바로 정규

https://velog.io/@zhenxi_23/Ridge-regression릿지회귀

Seonglae Cho

Seonglae Cho