CSM

csm

SesameAILabs • Updated 2025 Jun 15 18:11

Compute amortization

To mitigate the limitations of the Delay Pattern, CSM introduces Compute Amortization. The backbone predicts the zeroth codebook (basic semantic information) for all frames, while the decoder learns to predict the remaining N-1 stages by sampling only random 1/16 frames. This enables fast learning with significantly reduced memory and computational burden without loss of voice quality. This approach is similar to how RNN limitations were addressed by making it an Autoregressive Model with Next Token Prediction.

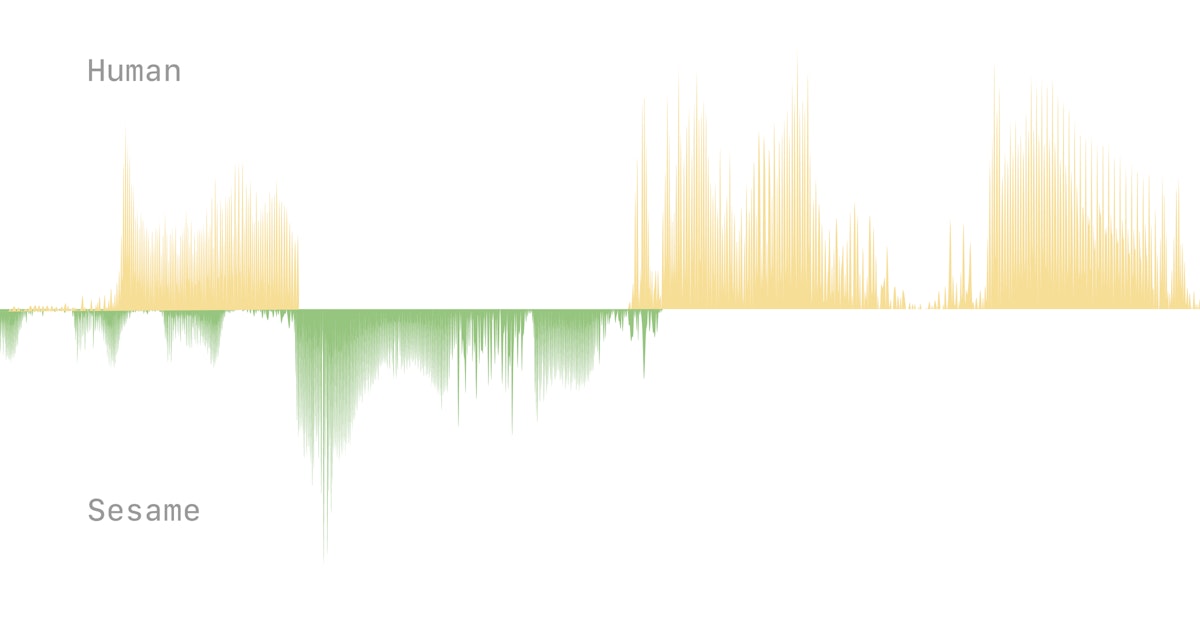

Crossing the uncanny valley of conversational voice

At Sesame, our goal is to achieve “voice presence”—the magical quality that makes spoken interactions feel real, understood, and valued.

https://www.sesame.com/research/crossing_the_uncanny_valley_of_voice

sesame/csm-1b · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/sesame/csm-1b

Seonglae Cho

Seonglae Cho