Turing Award-winning French computer scientist

Silver Professor at New York University's Courant Institute of Mathematical Sciences and Vice President, Chief AI Scientist at Meta

AI won't destroy jobs forever

Meta scientist Yann LeCun says AI won't destroy jobs forever

Prof Yann LeCun says fears that AI will pose a threat to humanity are "preposterously ridiculous".

https://www.bbc.com/news/technology-65886125

Convolutional Network Demo from 1989

This is a demo of "LeNet 1", the first convolutional network that could recognize handwritten digits with good speed and accuracy.

It was developed in early 1989 in the Adaptive System Research Department, headed by Larry Jackel, at Bell Labs in Holmdel, NJ.

This "real time" demo ran on a DSP card sitting in a 486 PC with a video camera and frame grabber card. The DSP card had an AT&T DSP32C chip, which was the first 32-bit floating-point DSP and could reach an amazing 12.5 million multiply-accumulate operations per second.

The network was trained using the SN environment (a Lisp-based neural net simulator, the predecessor of Lush, itself a kind of ancestor to Torch7, itself the ancestor of PyTorch).

We wrote a kind of "compiler" in SN that produced a self-contained piece of C code that could run the network. The network weights were array literals inside the C source code.

The network architecture was a ConvNet with 2 layers of 5x5 convolution with stride 2, and two fully-connected layers on top. There were no separate pooling layer (it was too expensive).

It had 9760 parameters and 64,660 connections.

Shortly after this demo was put together, we started working with a development group and a product group at NCR (then a subsidiary of AT&T). NCR soon deployed ATM machines that could read the numerical amounts on checks, initially in Europe and then in the US. The ConvNet was running on the DSP32C card sitting in a PC inside the ATM. Later, NCR deployed a similar system in large check reading machines that banks use in their back offices. At some point in the late 90's these machines were processing 10 to 20% of all the checks in the US.

The network shown in this demo is described in our NIPS 1989 paper "Handwritten digit recognition with a back-propagation network".

https://direct.mit.edu/neco/article-abstract/1/4/541/5515/Backpropagation-Applied-to-Handwritten-Zip-Code

The check reading system is described in our 1998 Proc. IEEE paper "Gradient-Based Learning Applied to Document Recognition" and in various shorter papers before that.

Thanks to Larry Jackel for digitizing and editing the old VHS tape (and for holding the camera). There are cameo appearances by Donnie Henderson (who put together much of the demo) and Rich Howard, our lab director.

https://www.youtube.com/watch?v=FwFduRA_L6Q

lecture

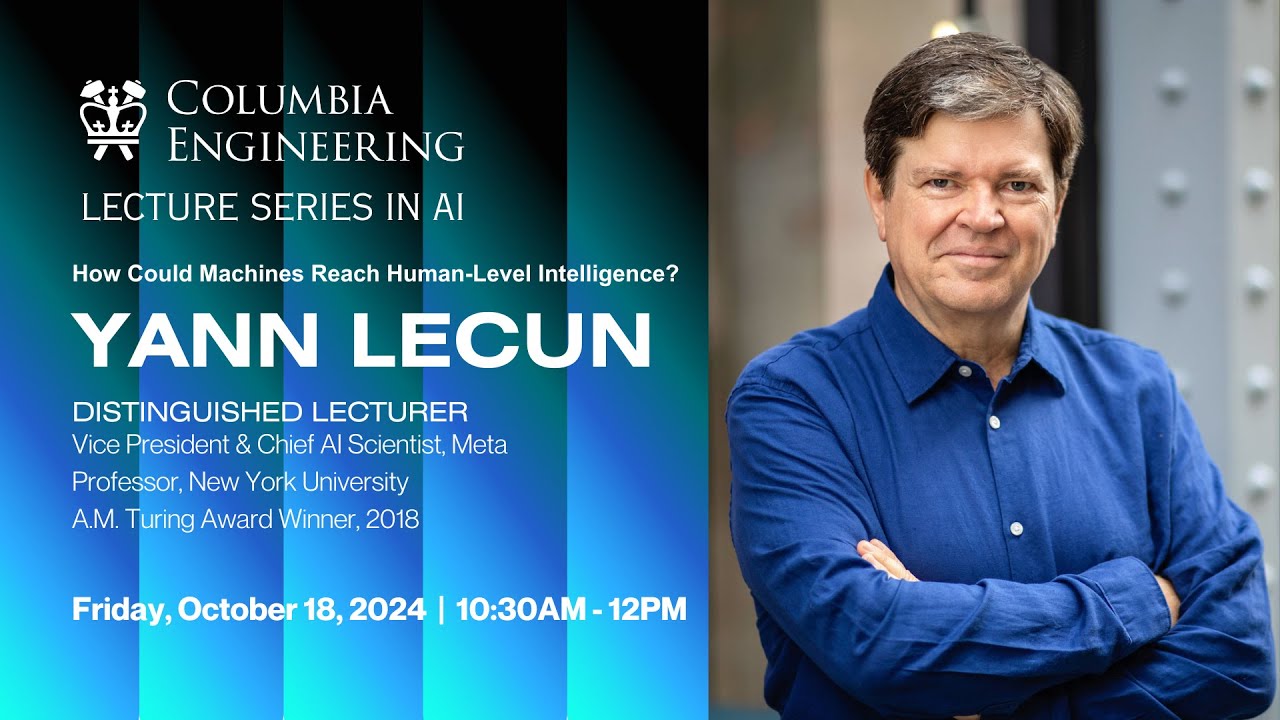

Lecture Series in AI: “How Could Machines Reach Human-Level Intelligence?” by Yann LeCun

ABOUT THE LECTURE

Animals and humans understand the physical world, have common sense, possess a persistent memory, can reason, and can plan complex sequences of subgoals and actions. These essential characteristics of intelligent behavior are still beyond the capabilities of today's most powerful AI architectures, such as Auto-Regressive LLMs.

I will present a cognitive architecture that may constitute a path towards human-level AI. The centerpiece of the architecture is a predictive world model that allows the system to predict the consequences of its actions. and to plan sequences of actions that that fulfill a set of objectives. The objectives may include guardrails that guarantee the system's controllability and safety. The world model employs a Joint Embedding Predictive Architecture (JEPA) trained with self-supervised learning, largely by observation.

The JEPA simultaneously learns an encoder, that extracts maximally-informative representations of the percepts, and a predictor that predicts the representation of the next percept from the representation of the current percept and an optional action variable.

We show that JEPAs trained on images and videos produce good representations for image and video understanding. We show that they can detect unphysical events in videos. Finally, we show that planning can be performed by searching for action sequences that produce predicted end state that match a given target state.

ABOUT THE SPEAKER

Yann LeCun, VP & Chief AI Scientist, Meta; Professor, NYU; ACM Turing Award Laureate

Yann LeCun is VP & Chief AI Scientist at Meta and a Professor at NYU. He was the founding Director of Meta-FAIR and of the NYU Center for Data Science. After a PhD from Sorbonne Université and research positions at AT&T and NEC, he joined NYU in 2003 and Meta in 2013. He received the 2018 ACM Turing Award for his work on AI. He is a member of the US National Academies and the French Académie des Sciences.

ABOUT THE COLUMBIA ENGINEERING LECTURE SERIES IN AI

Columbia Engineering's Lecture Series in AI explores the most cutting-edge topics in artificial intelligence and brings to campus thinkers and leaders who are shaping tomorrow’s technology landscape in a wide variety of fields. Join us to unravel the complexities and possibilities of AI in today's rapidly evolving world.

https://www.youtube.com/watch?v=xL6Y0dpXEwc

Seonglae Cho

Seonglae Cho