Papers with Code - Cross-Modal Retrieval

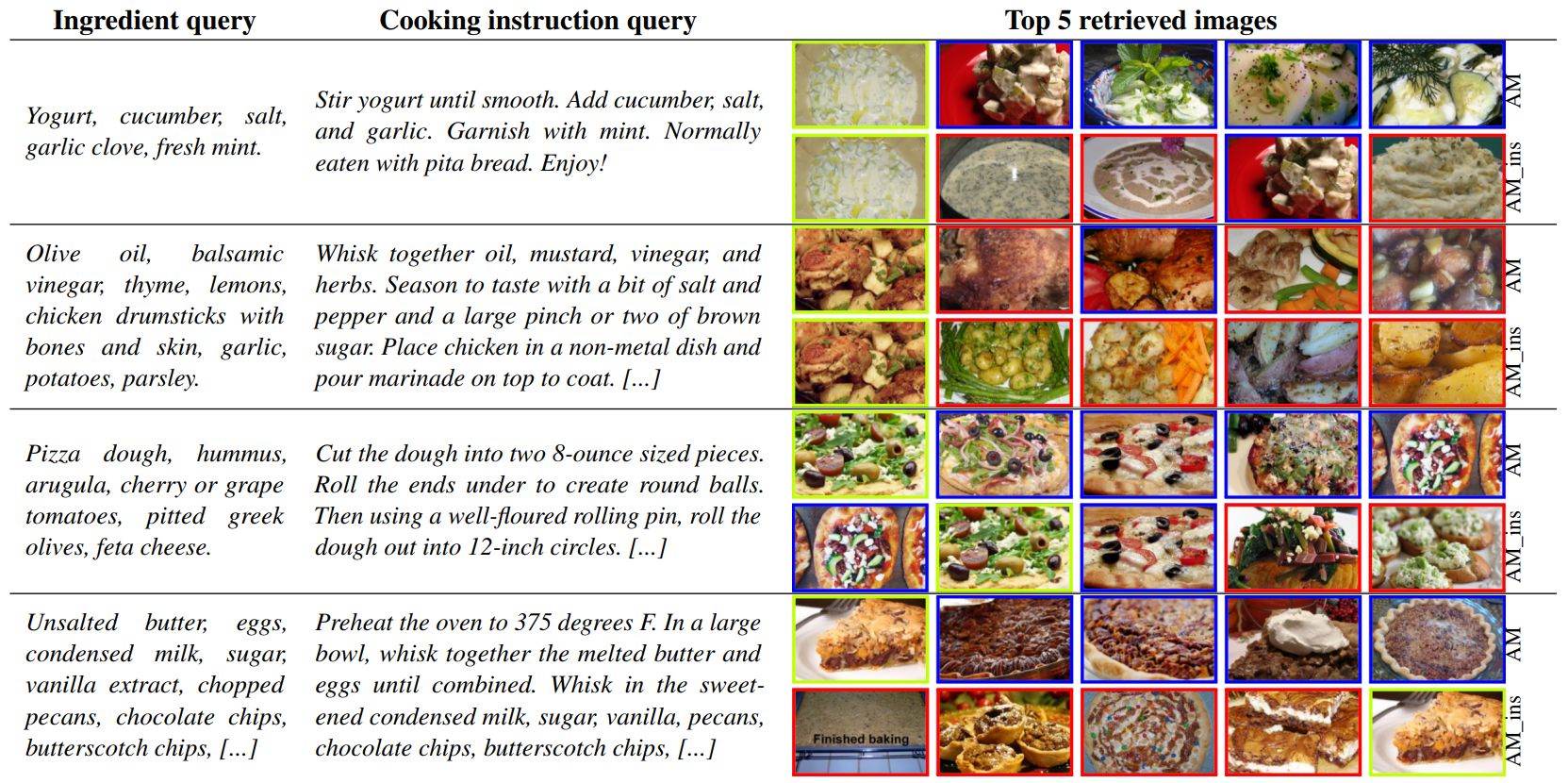

**Cross-Modal Retrieval** is used for implementing a retrieval task across different modalities. such as image-text, video-text, and audio-text Cross-Modal Retrieval. The main challenge of Cross-Modal Retrieval is the modality gap and the key solution of Cross-Modal Retrieval is to generate new representations from different modalities in the shared subspace, such that new generated features can be applied in the computation of distance metrics, such as cosine distance and Euclidean distance. References: <span class="description-source">[1] [Scene-centric vs. Object-centric Image-Text Cross-modal Retrieval: A Reproducibility Study](https://arxiv.org/abs/2301.05174)</span> <span class="description-source">[2] [Deep Triplet Neural Networks with Cluster-CCA for Audio-Visual Cross-modal Retrieval ](https://arxiv.org/abs/1908.03737)</span>

https://paperswithcode.com/task/cross-modal-retrieval

Seonglae Cho

Seonglae Cho