A Dataset for Diverse, Explainable Multi-hop Question Answering

In the distractor setting, a question-answering system reads 10 paragraphs to provide an answer (Ans) to a question. They must also justify these answers with supporting facts (Sup).

In the fullwiki setting, a question-answering system must find the answer to a question in the scope of the entire Wikipedia. Similar to in the distractor setting, systems are evaluated on the accuracy of their answers (Ans) and the quality of the supporting facts they use to justify them (Sup).

HotpotQA: A Dataset for Diverse, Explainable Multi-hop Question Answering

Explore HotpotQA

https://hotpotqa.github.io/explorer.html

Papers with Code - HotpotQA Benchmark (Question Answering)

The current state-of-the-art on HotpotQA is Beam Retrieval. See a full comparison of 76 papers with code.

https://paperswithcode.com/sota/question-answering-on-hotpotqa

Papers with Code - hotpot_qa Benchmark (Sequence-to-sequence Language Modeling)

The current state-of-the-art on hotpot_qa is bart-qg-finetuned-hotpotqa. See a full comparison of 0 papers with code.

https://paperswithcode.com/sota/sequence-to-sequence-language-modeling-on-41

HotpotQA: A Dataset for Diverse, Explainable Multi-hop Question Answering

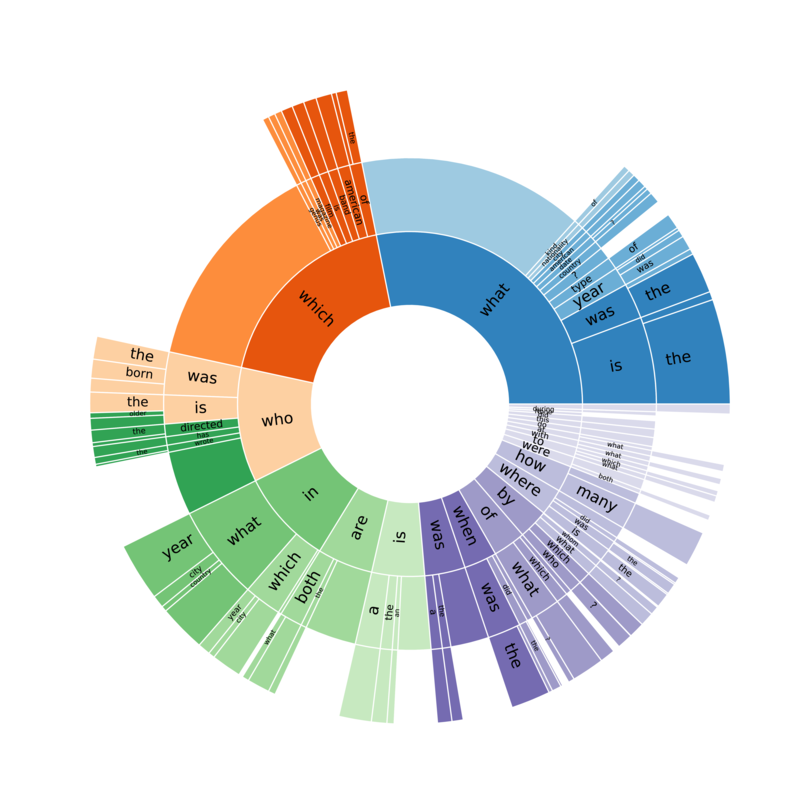

HotpotQA is a question answering dataset featuring natural, multi-hop questions, with strong supervision for supporting facts to enable more explainable question answering systems. It is collected by a team of NLP researchers at Carnegie Mellon University, Stanford University, and Université de Montréal.

https://hotpotqa.github.io/

Seonglae Cho

Seonglae Cho