Teach language models to follow instructions to solve a task

By using instruction datasets for Finetuning, we can improve Zero shot learning performance without needing to show data directly, unlike Few Shot Prompting.

The key industrial significance is that anyone can easily create datasets. Large datasets aren't necessary for instruction learning - around 50k examples is sufficient, and data quality is more important than quantity.

Instruction Tuning Notion

Instruction Tuning Usages

Evaluation for Instruction tuning

Paper page - Instruction-Following Evaluation for Large Language Models

Join the discussion on this paper page

https://huggingface.co/papers/2311.07911

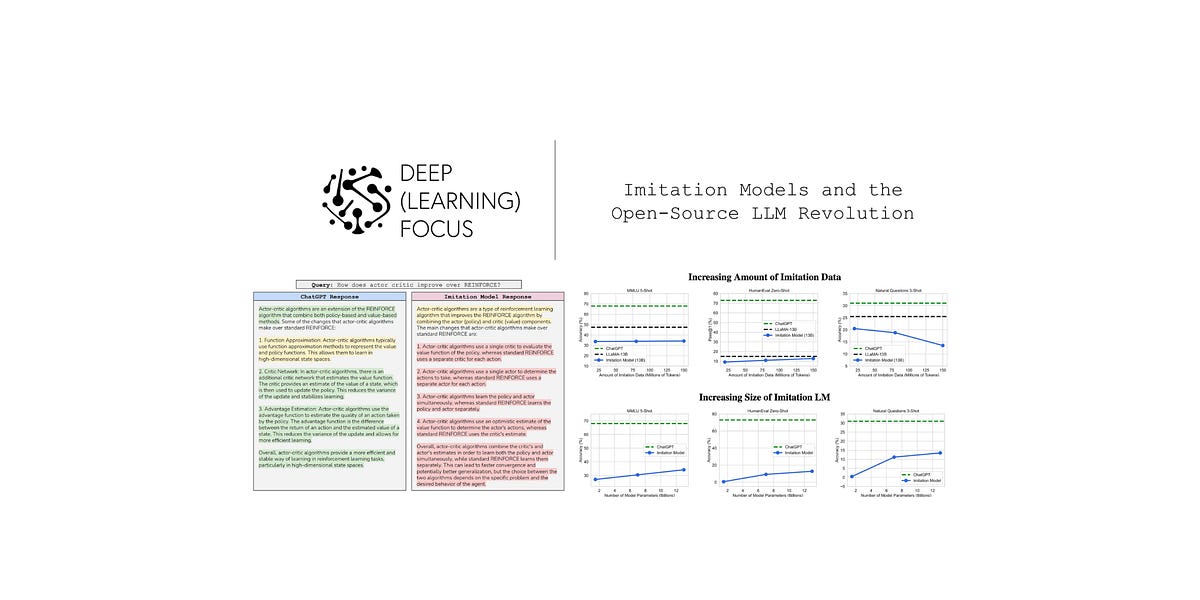

Imitation Models and the Open-Source LLM Revolution

Are proprietary LLMs like ChatGPT and GPT-4 actually easy to replicate?

https://cameronrwolfe.substack.com/p/imitation-models-and-the-open-source

Fine tuned LLM are more good at Positional Information processing such as Entity Tracking than base model. Position Transmitter or Value Fetcher mechanism using Activation Patching reproduced similar ability with Fine tuned model in base model.

arxiv.org

https://arxiv.org/pdf/2402.14811

Instruction tuned model’s Attention head are more attending on verbs to understand instruction.

aclanthology.org

https://aclanthology.org/2024.naacl-long.130.pdf

Seonglae Cho

Seonglae Cho