Activation Probing

Monkey Patching for LLM

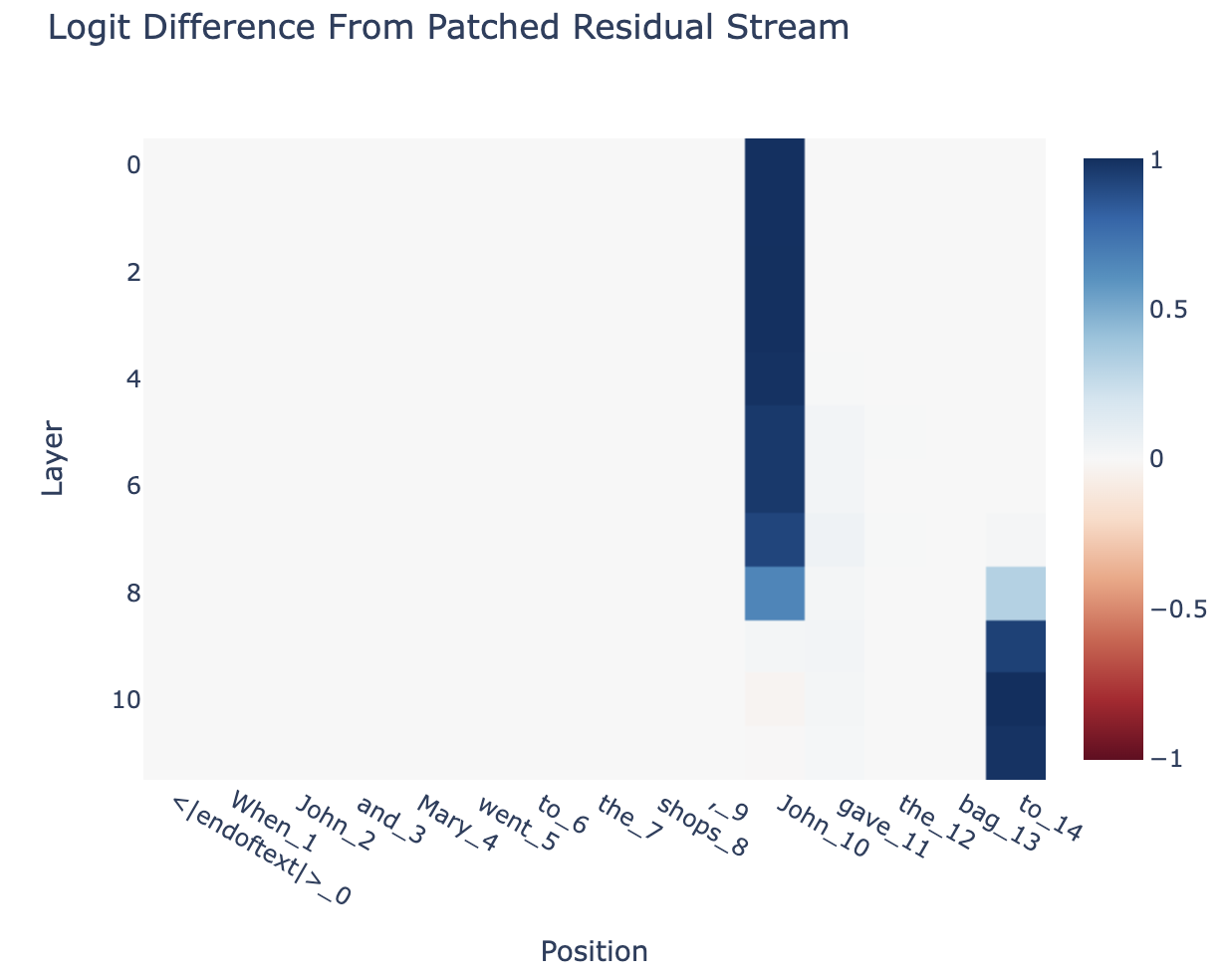

Activation patching is a technique used to understand how different parts of a model contribute to its behavior. In an activation patching experiment, we modify or “patch” the activations of certain model components and observe the impact on model output.

For example, Extracting Steering Vector for CAA

- Clean run

- Corrupted run

- Patched run

Example

- Clean input “What city is the Eiffel Tower in?” → Save clean activation

- Corrupted input “What city is the Colosseum in?” → Save corrupted output

- Patch activation on the corrupted input from the clean activation → observe which activation layer or attention head is important for producing the correct answer, “Paris”

Activation Patching Methods

hands-on

Activation Patching — nnsight

📗 You can find an interactive Colab version of this tutorial here.

https://nnsight.net/notebooks/tutorials/activation_patching/

Neel nanda method

Attribution Patching: Activation Patching At Industrial Scale — Neel Nanda

A write-up of an incomplete project I worked on at Anthropic in early 2022, using gradient-based approximation to make activation patching far more scalable

https://www.neelnanda.io/mechanistic-interpretability/attribution-patching

arxiv.org

https://arxiv.org/pdf/2309.16042

Contrastive Activation Addition SVs operate unstably for specific inputs within the data in-distribution and have limitations in generalization OOD data

arxiv.org

https://arxiv.org/pdf/2407.12404

linear probing

arxiv.org

https://arxiv.org/pdf/1610.01644

runtime monitor

Runtime Monitoring Neuron Activation Patterns

For using neural networks in safety critical domains, it is important to know if a decision made by a neural network is supported by prior similarities in training. We propose runtime neuron...

https://arxiv.org/abs/1809.06573

Seonglae Cho

Seonglae Cho