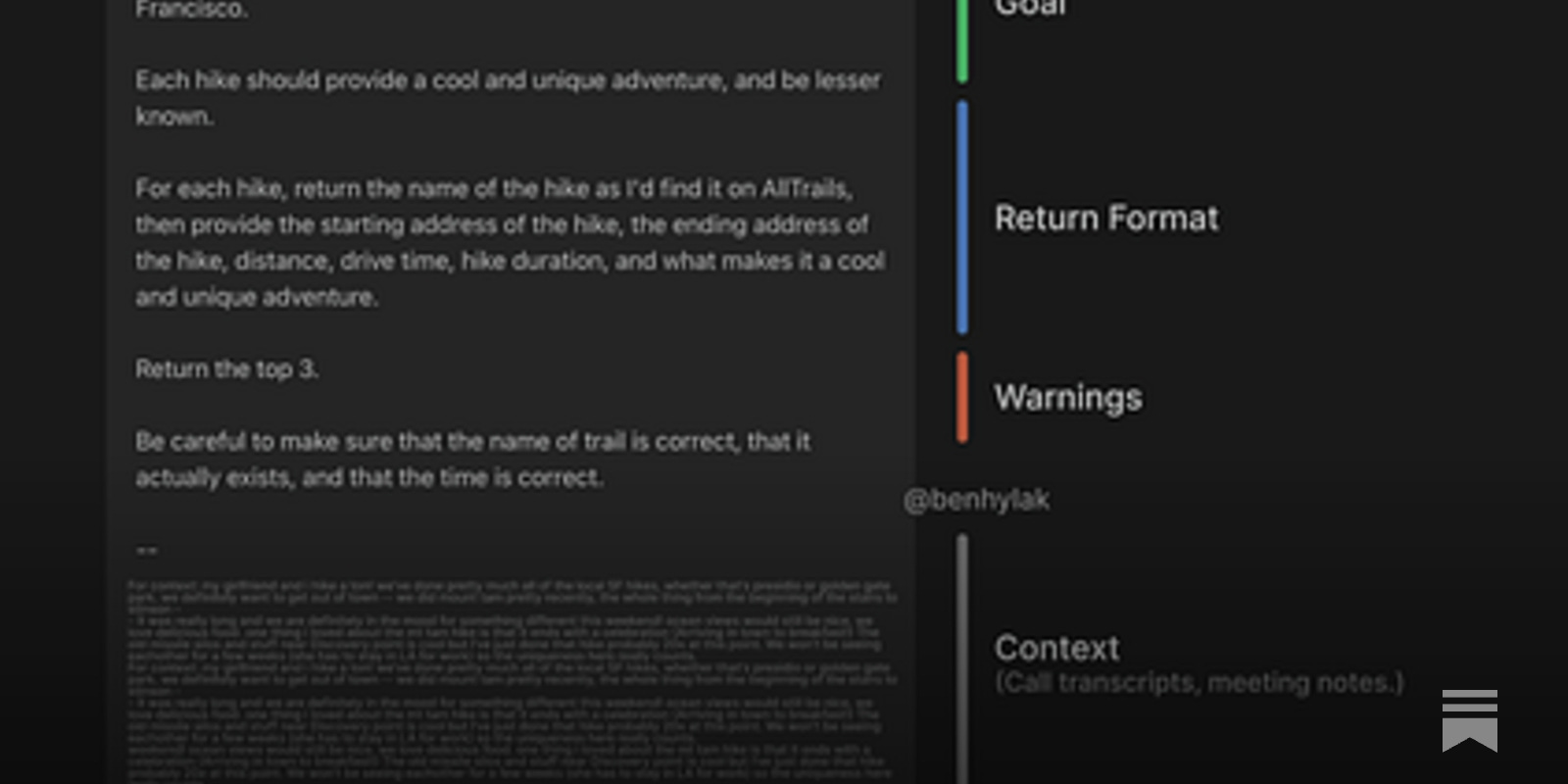

O series models are very smart, but it needs a lot of context in order to work well.

God is hungry for Context: First thoughts on o3 pro

OpenAI dropped o3 pricing 80% today and launched o3-pro. Ben Hylak of Raindrop.ai returns with Alexis Dauba for the world's first early review.

https://www.latent.space/p/o3-pro

Implementation

Learning to Reason with LLMs

We are introducing OpenAI o1, a new large language model trained with reinforcement learning to perform complex reasoning. o1 thinks before it answers—it can produce a long internal chain of thought before responding to the user.

https://openai.com/index/learning-to-reason-with-llms/

IQ (feels accurate) that o1 preview is way better than o1

Tracking AI

Tracking AI is a cutting-edge application that unveils the political biases embedded in artificial intelligence systems. Explore and analyze the political leanings of AIs with our intuitive platform, designed to foster transparency in the world of artificial intelligence. Stay informed and uncover the political inclinations shaping the algorithms behind the technology revolution.

https://trackingai.org/home

O1 is less powerful than O1-preview due to the less time it spends on thinking (compute time)

389 votes, 135 comments. I have asked the same coding question to both models 10 times and checked whether the code each model produced compiled…

https://www.reddit.com/r/OpenAI/comments/1h7qtaf/o1_is_less_powerful_than_o1preview_due_to_the/

Seonglae Cho

Seonglae Cho