More intelligence for free by scaling

Andrej Karpathy said that It will be surprisingly small since the current models are wasting a ton of capacity remembering stuff that does not matter. There will be a cognitive core (math, physics, computing, predicting) like human brain similar to brain’s layered structure.

AI Scaling is not Everything. In a same sense, techniques like reasoning incentive and memory scaffolding might help, but there's no guarantee they will solve core deficits.

Recommended that training tokens should be scaled linearly with model size. The constraints on scaling test-time compute approach differ substantially from those of LLM pretraining.

- Pretraining Scaling is reaching its limits due to finite data.

- Reasoning Model scaling through CoT and AI Agent evolves reasoning capability.

- Due to Compounding Error, increasing threat to ensuring AI Alignment

AI Scaling Notion

AI Scaling Methods

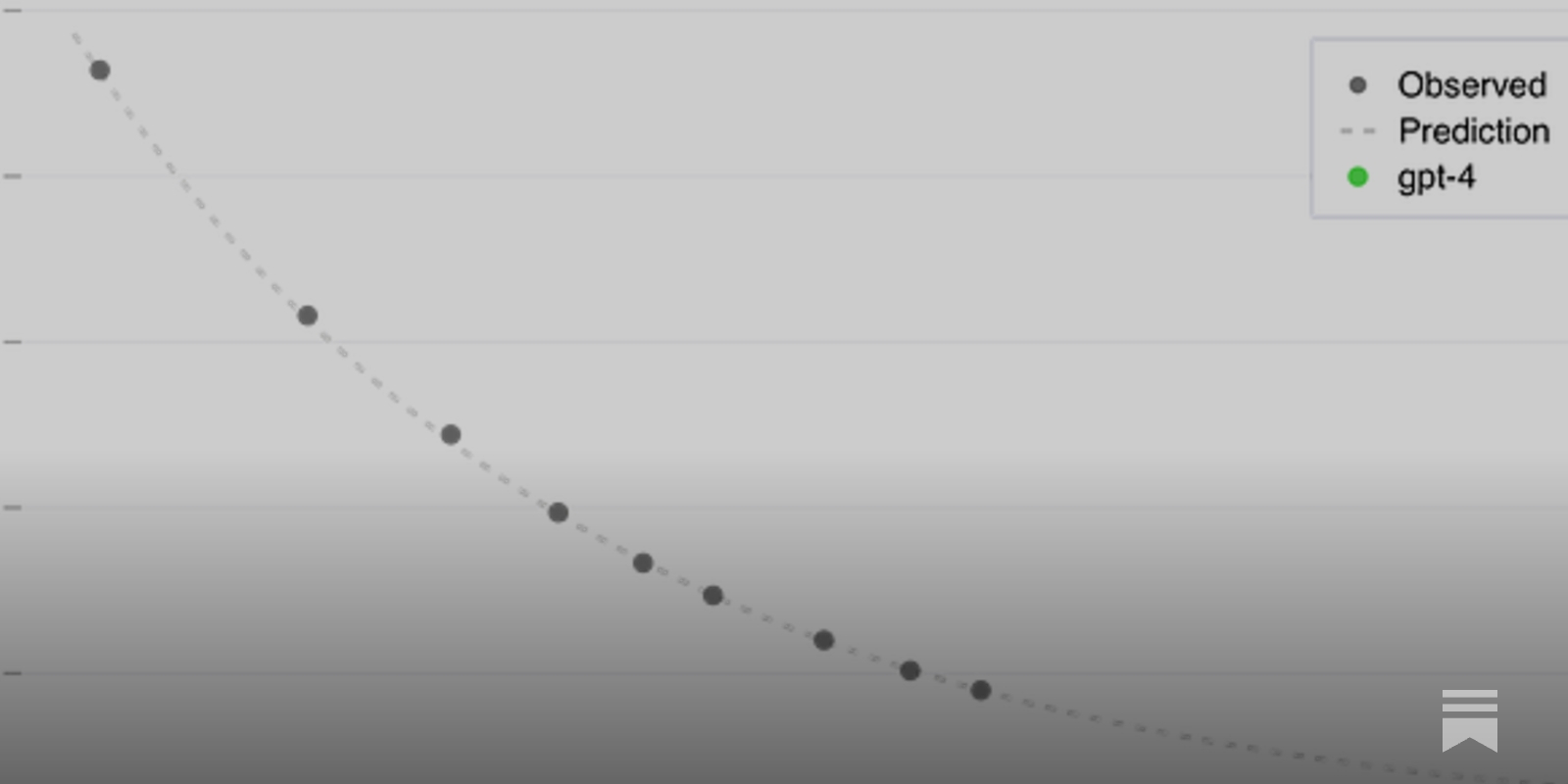

Scaling Law (OpenAI 2020)

arxiv.org

https://arxiv.org/pdf/2001.08361.pdf

Primate neural architecture that’s really scalable in comparison to the brains of other kinds of species, analogous to how transformers have better scaling curves than LSTMs and RNNs.

Will scaling work?

Data bottlenecks, generalization benchmarks, primate evolution, intelligence as compression, world modelers, and other considerations

https://www.dwarkeshpatel.com/p/will-scaling-work

Human brain Neuron scaling process

NCBI - WWW Error Blocked Diagnostic

Your access to the NCBI website at www.ncbi.nlm.nih.gov has been

temporarily blocked due to a possible misuse/abuse situation

involving your site. This is not an indication of a security issue

such as a virus or attack. It could be something as simple as a run

away script or learning how to better use E-utilities,

http://www.ncbi.nlm.nih.gov/books/NBK25497/,

for more efficient work such that your work does not impact the ability of other researchers

to also use our site.

To restore access and understand how to better interact with our site

to avoid this in the future, please have your system administrator

contact

info@ncbi.nlm.nih.gov.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2776484/

Computing is bottleneck

The Bitter Lesson

http://www.incompleteideas.net/IncIdeas/BitterLesson.html

Scaling is important

3 ways AI is scaling helpful technologies worldwide

AI I was first introduced to neural networks as an undergraduate in 1990. Back then, many people in the AI community were excited about the potential of neural networks, which were impressive, but couldn't yet accomplish important, real-world tasks. I was excited, too!

https://blog.google/technology/ai/ways-ai-is-scaling-helpful/

First-principles on AI scaling

How likely are we to hit a barrier?

https://dynomight.net/scaling

How to scale

openreview.net

https://openreview.net/pdf?id=iBBcRUlOAPR

Sam Altman scaling insights (2025)

Three Observations

Our mission is to ensure that AGI (Artificial General Intelligence) benefits all of humanity. Systems that start to point to AGI* are coming into view, and so we think it’s important to...

https://blog.samaltman.com/three-observations

blog.samaltman.com

https://blog.samaltman.com/three-observations

Seonglae Cho

Seonglae Cho