Expected return of a policy is the expected return over all possible trajectories

Derivation, and the meaning is simply that to increase reward, we need to increase the probability of action a given state s in the training data (which is intuitive). Terms without are eliminated

For training stability, the method evolves in the direction of reducing variance (batch gradient, diagonal sum of reward)

We can estimate reward by averaging over sequences, or predict it for a single point. We can reduce the sum of rewards or reduce them individually

By summing only rewards after each action diagonally, the coefficient becomes larger for early actions, providing more incentive. Subtracting b doesn't change the gradient, but normalization is not applied

Markov Property is applied when transitioning to the third line

- Produce a high-variance (of reward in action) gradient

- Reward can drastically change with a minor change in actions

- Hard to find optimum, hard to optimize

- Require on-policy data

- The derivation of the policy gradients assume data come from policy rollouts

Online Learning

Baseline

Why variance matters

Policy Gradient Theorem Notion

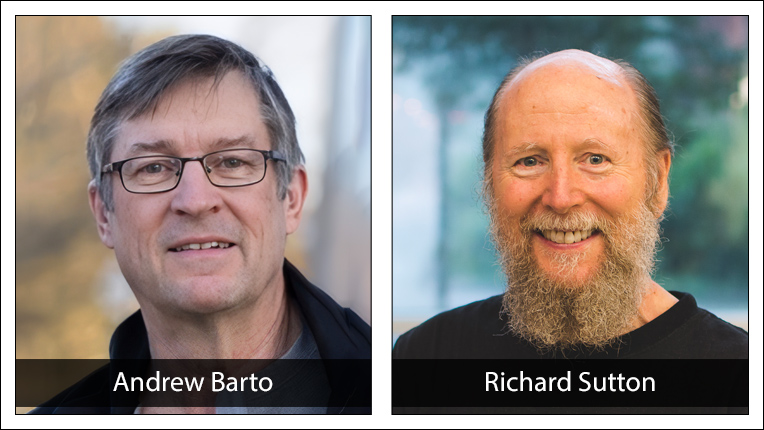

Turing 2024 (Richard Sutton)

- Andrew Barto

Andrew Barto and Richard Sutton are the recipients of the 2024 ACM A.M. Turing Award for developing the conceptual and algorithmic foundations of reinforcement learning.

For developing the conceptual and algorithmic foundations of reinforcement learning.

https://awards.acm.org/about/2024-turing

proceedings.neurips.cc

https://proceedings.neurips.cc/paper_files/paper/1999/file/464d828b85b0bed98e80ade0a5c43b0f-Paper.pdf

Going Deeper Into Reinforcement Learning: Fundamentals of Policy Gradients

As I stated in my last blog post, I am feverishly trying to read moreresearch papers. One category of papers that seems to be coming up a lotrecently are tho...

https://danieltakeshi.github.io/2017/03/28/going-deeper-into-reinforcement-learning-fundamentals-of-policy-gradients/

04. Policy Gradient methods - Part1

https://julien-vitay.net/deeprl/PolicyGradient.html#sec:policy-gradient-methods https://lilianweng…

https://wikidocs.net/164397

Seonglae Cho

Seonglae Cho