Reinforcement Learning from Feature Rewards

Detects suspected factual claims (entities) in model outputs. Uses internal model features (interpretable representations) as rewards for RL. → Enables learning for open-ended tasks without human scoring or LLM judges. In other words, internal belief = supervision signal. Uses the model's own internal judgment as reward.

This is a "self-supervised alignment" approach, suggesting that alignment may be possible without humans or LLMs.

- Detect suspected factual claims (entities) in model outputs

- Model chooses to maintain / correct / retract

- Evaluate actions using feature-based rewards

- Update policy with RL

Rewards also come from features: span-level / intervention-level reward

- Good corrections → high reward

- Maintaining hallucinations → low reward

Results with Gemma-3-12B:

- Hallucinations reduced by 58%

- ~90x cheaper than LLM judge

- Maintains general benchmark performance

Can also be used for test-time scaling methods like Best-of-N sampling.

Open-ended tasks (hard to define correct answers):

- factuality

- helpfulness

- honesty

- alignment

→ Difficult to train with traditional RL

This paper's claim:

Interpretability → can be used as supervision signal

teacher model = frozen reference model + feature extractor + reward computation

Browser

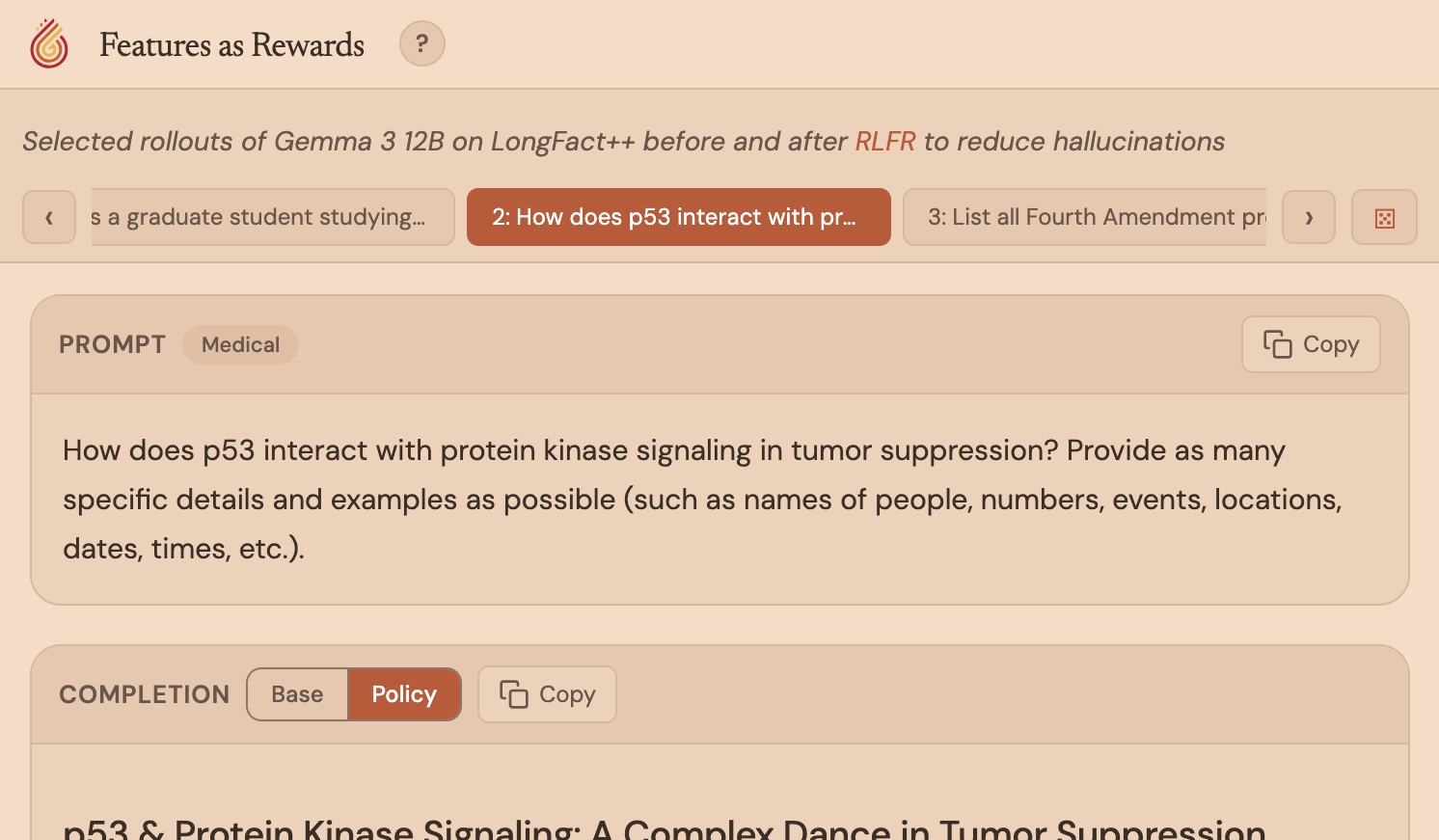

Longfact++ Rollout Viewer - Features as Rewards (RLFR)

Selected rollouts of Gemma 3 12B on LongFact++ before and after RLFR to detect and reduce hallucinations.

https://www.goodfire.ai/demos/hallucinations-viewer

arxiv.org

https://arxiv.org/pdf/2602.10067

Seonglae Cho

Seonglae Cho