LLN

When iid, which

Condition

Basically requires iid however Ergodicity or

Variance

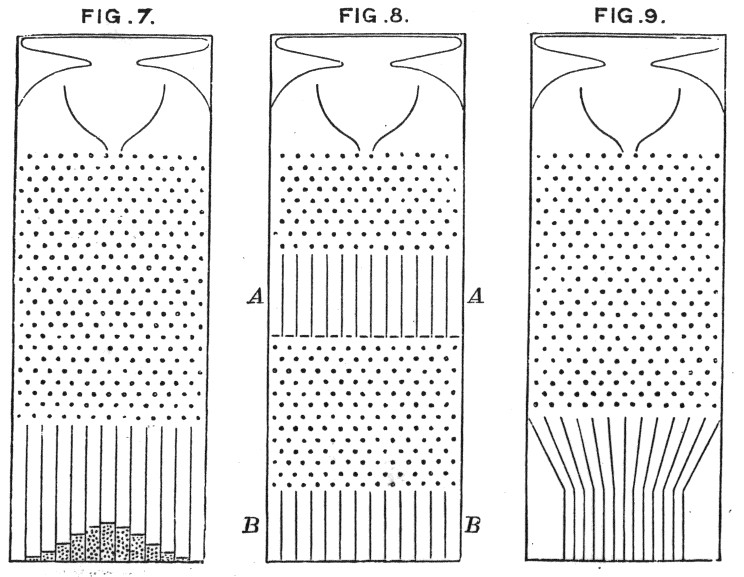

De Moivre–Laplace theorem

In probability theory, the de Moivre–Laplace theorem, which is a special case of the central limit theorem, states that the normal distribution may be used as an approximation to the binomial distribution under certain conditions. In particular, the theorem shows that the probability mass function of the random number of "successes" observed in a series of independent Bernoulli trials, each having probability of success, converges to the probability density function of the normal distribution with mean and standard deviation , as grows large, assuming is not or .

https://en.wikipedia.org/wiki/De_Moivre–Laplace_theorem

Seonglae Cho

Seonglae Cho