AI Counting (linebreaking)

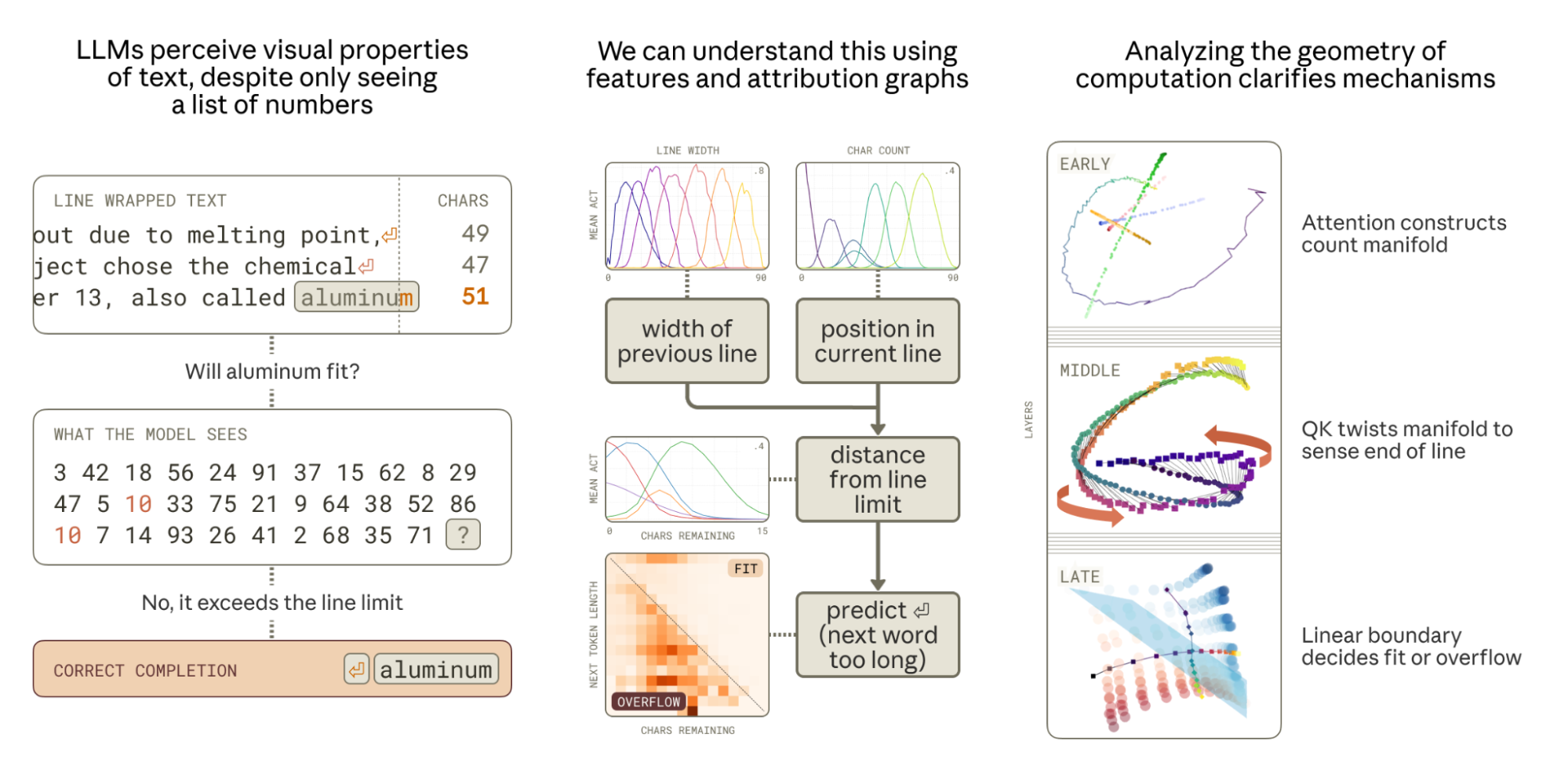

In fixed-width text, the model must predict

\n by determining whether the next word exceeds the line boundary. The model represents character count (current line length), total line width, remaining character count, next word length, etc. on a 1-dimensional "feature Manifold". This manifold takes a Helix shape embedded in a low-rank subspace (≈6D) of the high-dimensional residual stream, with features tiling the manifold. QK rotation (attention) performs boundary detection by rotating and aligning one manifold to another (line width representation). Multiple boundary heads cooperate with different offsets to estimate remaining character count at high resolution. Next word length and remaining character count are represented in nearly orthogonal subspaces, making "line break decision" linearly separable. Gibbs phenomenon (ringing) creates a rippled manifold—an oscillatory residual pattern (overshoot–undershoot oscillation such as Moire and Aliasing) that emerges when projecting signals from high to low dimensions or approximating continuous values in finite dimensions. When Models Manipulate Manifolds: The Geometry of a Counting Task

We find geometric structure underlying the mechanisms of a fundamental language model behavior.

https://transformer-circuits.pub/2025/linebreaks/index.html

Debugging why LLM thinks 9.11 > 9.8

Monitor: An AI-Driven Observability Interface

This write-up is a technical demonstration, which describes and evaluates the use of a new piece of technology. For technical demonstrations, we still run systematic experiments to test our findings, but do not run detailed ablations and controls. The claims are ones that we have tested and stand behind, but have not vetted as thoroughly as in our research reports.

https://transluce.org/observability-interface

Attention tracks relationships between numbers, pattern matching, while MLP performs calculations

aclanthology.org

https://aclanthology.org/2023.emnlp-main.435.pdf

Limitation

패턴매칭이라 진정한 논리적이나 수학적 추론이 아니라 같은 문제라도 수치에 따라 정답률 다름

질문에 불필요한 정보가 추가될 때, LLM은 이 정보를 무시하지 못하고 성능이 크게 감소한다

arxiv.org

https://arxiv.org/pdf/2410.05229

arithmetic is important for world modeling

Arithmetic is an underrated world-modeling technology — LessWrong

Of all the cognitive tools our ancestors left us, what’s best? Society seems to think pretty highly of arithmetic. It’s one of the first things we le…

https://www.lesswrong.com/posts/r2LojHBs3kriafZWi/arithmetic-is-an-underrated-world-modeling-technology

arxiv.org

https://arxiv.org/pdf/2411.03766

A Mechanistic Interpretation of Arithmetic Reasoning in Language Models using Causal Mediation Analysis

arxiv.org

https://arxiv.org/pdf/2305.15054

arxiv.org

https://arxiv.org/pdf/2210.12023

Seonglae Cho

Seonglae Cho