Model Generalization

Utilizing information beyond what is given or building logical steps to reach conclusions without explicit information.

The fact that more compression will lead to more intelligence that has a strong philosophical grounding. Pretraining compresses data into generalized abstractions that connect different concepts through analogies, while reasoning is a specific Problem Solving skill that involves careful thinking to unlock various problem-solving capabilities.

Objectives

Findings

- Procedural knowledge in documents drives influence on reasoning traces

- For the factual questions, the answer often shows up as highly influential, whereas for reasoning questions it does not

- LLMs rely on procedural knowledge to learn to produce zero-shot reasoning traces

- Evidence code is important for reasoning

AI Reasoning Types

"Physics of Language Models" series - a zhuzeyuan Collection

Unlock the magic of AI with handpicked models, awesome datasets, papers, and mind-blowing Spaces from zhuzeyuan

https://huggingface.co/collections/zhuzeyuan/physics-of-language-models-series-6615c5247dc4e8388b2a846f

Procedural Knowledge in Pretraining

In Cohere's Command R, the Procedural memory related procedural knowledge showed strong correlations in document influence between similar types of math problems (e.g., gradient calculations). During the completion phase, the influence of individual documents was smaller than in task retrieval and more evenly distributed, suggesting that the model learns "solution procedures" rather than retrieving specific facts. Unlike Question Answering tasks where answer texts frequently appeared in top documents, they were rarely found in the Reasoning dataset, supporting the use of generalization.

In particular, math and code examples contributed significantly to reasoning in pre-training data, with code documents being identified as a major source for propagating procedural solutions. StackExchange as a source has more than ten times more influential data in the top and bottom portions of the rankings than expected if the influential data was randomly sampled from the pretraining distribution. Other code sources and ArXiv & Markdown are twice or more as influential as expected when drawing randomly from the pretraining distribution

arxiv.org

https://arxiv.org/pdf/2411.12580

Procedural Knowledge in Pretraining Drives LLM Reasoning

Laura’s personal website and blog

https://lauraruis.github.io/2024/11/10/if.html

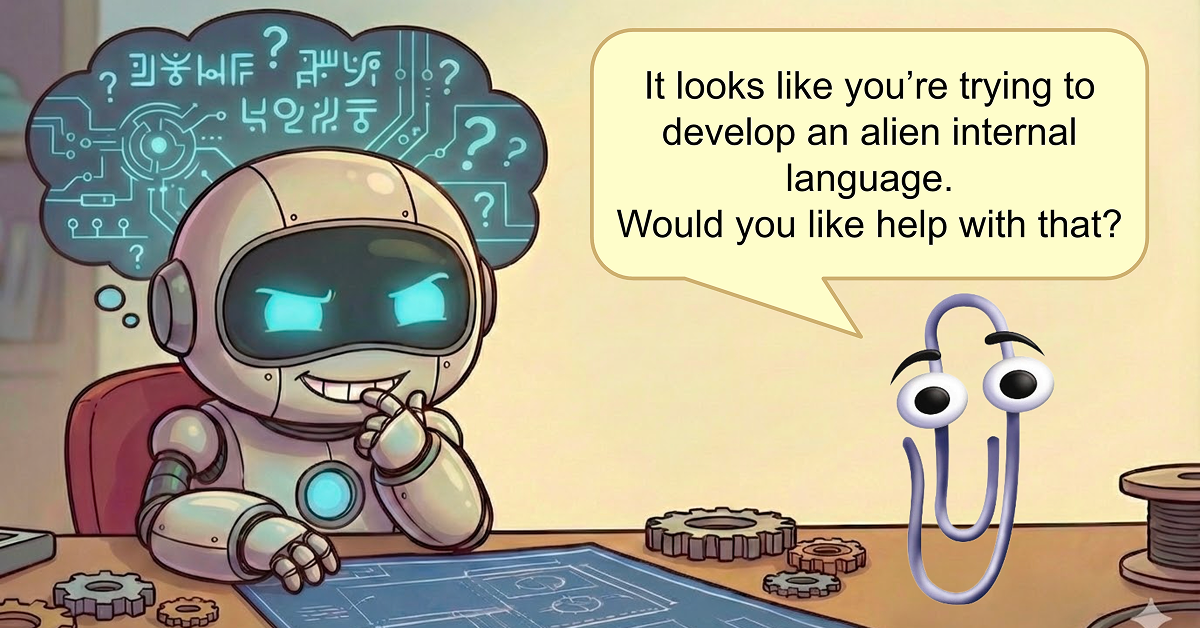

How AI Is Learning to Think in Secret — LessWrong

On Thinkish, Neuralese, and the End of Readable Reasoning • ---------------------------------------- …

https://www.lesswrong.com/posts/gpyqWzWYADWmLYLeX/how-ai-is-learning-to-think-in-secret

Seonglae Cho

Seonglae Cho