Bayes' rule

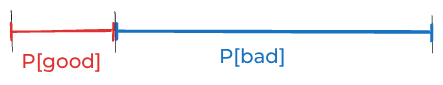

We use Bayes’ rule when we have a hypothesis and observed some evidence, and we want to get probability of Hypothesis given the evidence. That is a method for calculating posterior probability using prior probability and likelihood. We can induce probability of hypothesis given the evidence by filtering out possibilities fitting the evidence from all possibilities.

It's a formula expressing a Statistical Thinking, one form derived from the definitions of Conditional probability, Joint Probability, and Marginalization.

Product rule to Bayes Theorem

The car key example well illustrates Bayes' probability because it shows how the probability distribution of a model's parameters changes before and after observation. It is a formula for calculating the degree of updated belief based on prior probability and new evidence, using conditional probability to calculate the posterior probability.

We can chain is Hyperparameter used to determine prior and not is the reason that hyperparameters alpha and beta are used to determine the prior probability distribution, but they do not affect the relationship between the data D and the model parameter theta

Log form

where

Bayes Theorem Notion

Bayes theorem, the geometry of changing beliefs

Perhaps the most important formula in probability.

Help fund future projects: https://www.patreon.com/3blue1brown

An equally valuable form of support is to simply share some of the videos.

Special thanks to these supporters: http://3b1b.co/bayes-thanks

Home page: https://www.3blue1brown.com

The quick proof: https://youtu.be/U_85TaXbeIo

Interactive made by Reddit user Thoggalluth: https://nskobelevs.github.io/p5js/BayesTheorem/

The study with Steve:

https://science.sciencemag.org/content/185/4157/1124

http://www.its.caltech.edu/~camerer/Ec101/JudgementUncertainty.pdf

You can read more about Kahneman and Tversky's work in Thinking Fast and Slow, or in one of my favorite books, The Undoing Project.

Contents:

0:00 - Intro example

4:09 - Generalizing as a formula

10:13 - Making probability intuitive

13:35 - Issues with the Steve example

------------------

These animations are largely made using manim, a scrappy open-source python library: https://github.com/3b1b/manim

If you want to check it out, I feel compelled to warn you that it's not the most well-documented tool, and it has many other quirks you might expect in a library someone wrote with only their own use in mind.

Music by Vincent Rubinetti.

Download the music on Bandcamp:

https://vincerubinetti.bandcamp.com/album/the-music-of-3blue1brown

Stream the music on Spotify:

https://open.spotify.com/album/1dVyjwS8FBqXhRunaG5W5u

If you want to contribute translated subtitles or to help review those that have already been made by others and need approval, you can click the gear icon in the video and go to subtitles/cc, then "add subtitles/cc". I really appreciate those who do this, as it helps make the lessons accessible to more people.

------------------

3blue1brown is a channel about animating math, in all senses of the word animate. And you know the drill with YouTube, if you want to stay posted on new videos, subscribe: http://3b1b.co/subscribe

Various social media stuffs:

Website: https://www.3blue1brown.com

Twitter: https://twitter.com/3blue1brown

Reddit: https://www.reddit.com/r/3blue1brown

Instagram: https://www.instagram.com/3blue1brown_animations/

Patreon: https://patreon.com/3blue1brown

Facebook: https://www.facebook.com/3blue1brown

https://www.youtube.com/watch?v=HZGCoVF3YvM

Good if make prior after data instead of before — LessWrong

They say you’re supposed to choose your prior in advance. That’s why it’s called a “prior”. First, you’re supposed to say say how plausible different…

https://www.lesswrong.com/posts/JAA2cLFH7rLGNCeCo/good-if-make-prior-after-data-instead-of-before

Seonglae Cho

Seonglae Cho