Optimize cost expensive objective function using Bayesian way

Function values are modeled as a probability distribution to find the optimal value, instead of directly searching for them. In other words, the function value is found through probability distribution optimization.

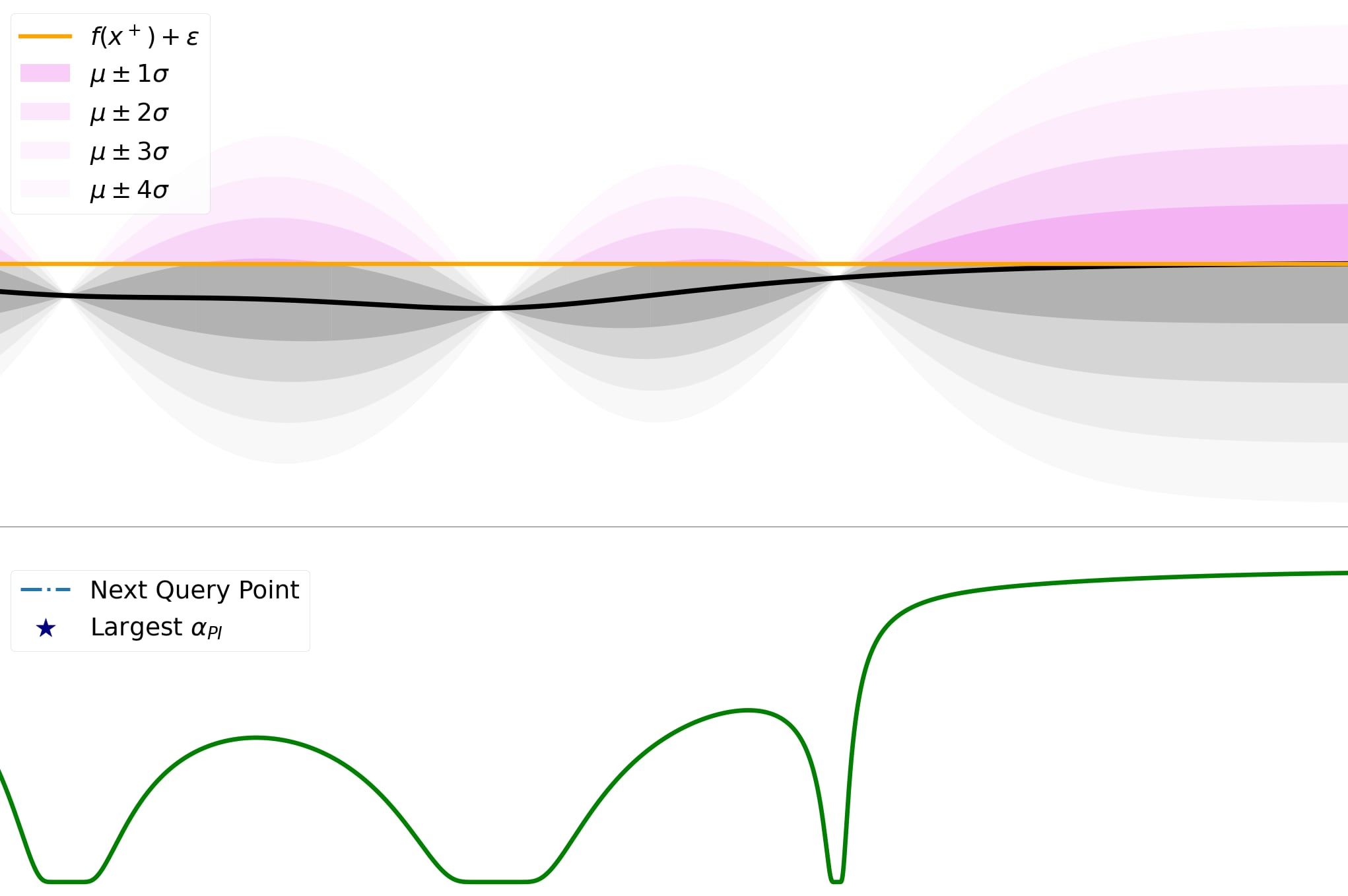

The Gaussian process is used to probabilistically estimate unknown values of the function, and the acquisition function selects the next exploration point based on that probability distribution.

Bayesian Optimization Notion

Bayesian Optimization Usages

Great introduction

Bayesian Optimization, a suite of techniques often used to tune Hyperparameter. More generally, Bayesian Optimization can be used to optimize any black-box function.

Active Learning aims to model the entire function, while Bayesian Optimization aims to find the optimal point of a black-box function considering Acquisition Function while both use Gaussian Process as a surrogate model (with a prior over the space of objective functions) which serves as a surrogate for function approximation, uncertainty estimation, and uncertainty reduction.

Of course, we could do active learning to estimate the true function accurately and then find its maximum. But that seems pretty wasteful Bayesian Optimization is well suited when the function evaluations are expensive, making grid or exhaustive search impractical. So core question in Bayesian Optimization: “Based on what we know so far, which point should we evaluate next?” In the active learning case, we picked the most uncertain point, but in Bayesian Optimization, we need to balance exploring uncertain regions, against focusing on regions we already know have higher gold content.

We make this decision with something called an acquisition function by optimizing it at every step. Acquisition functions are heuristics for how desirable it is to evaluate a point, based on our present model. We then update our model and repeat this process to determine the next point to evaluate.

Bayesian Optimization Process

- Choose a Surrogate model for modeling the true function and define its prior over the space of objective functions to model our black-box function.

- Bayes Update: obtain or update Surrogate Posterior using Bayes’ rule by incorporating set of observations into the surrogate model

- Use Acquisition functions , which depend on the surrogate posterior, to determine next sample evaluation point

- Add newly sampled data to the set of observations and goto step #2 till convergence or budget elapses

This sequential optimization is inexpensive and highly practical.

Exploring Bayesian Optimization

How to tune hyperparameters for your machine learning model using Bayesian optimization.

https://distill.pub/2020/bayesian-optimization/

Acquisition Functions | BoTorch

Acquisition functions are heuristics employed to evaluate the usefulness of one

https://botorch.org/docs/acquisition/

Seonglae Cho

Seonglae Cho