Finetuned Language Models are Zero-Shot Learners

A method that improves zero-shot performance through fine-tuning with instruction datasets

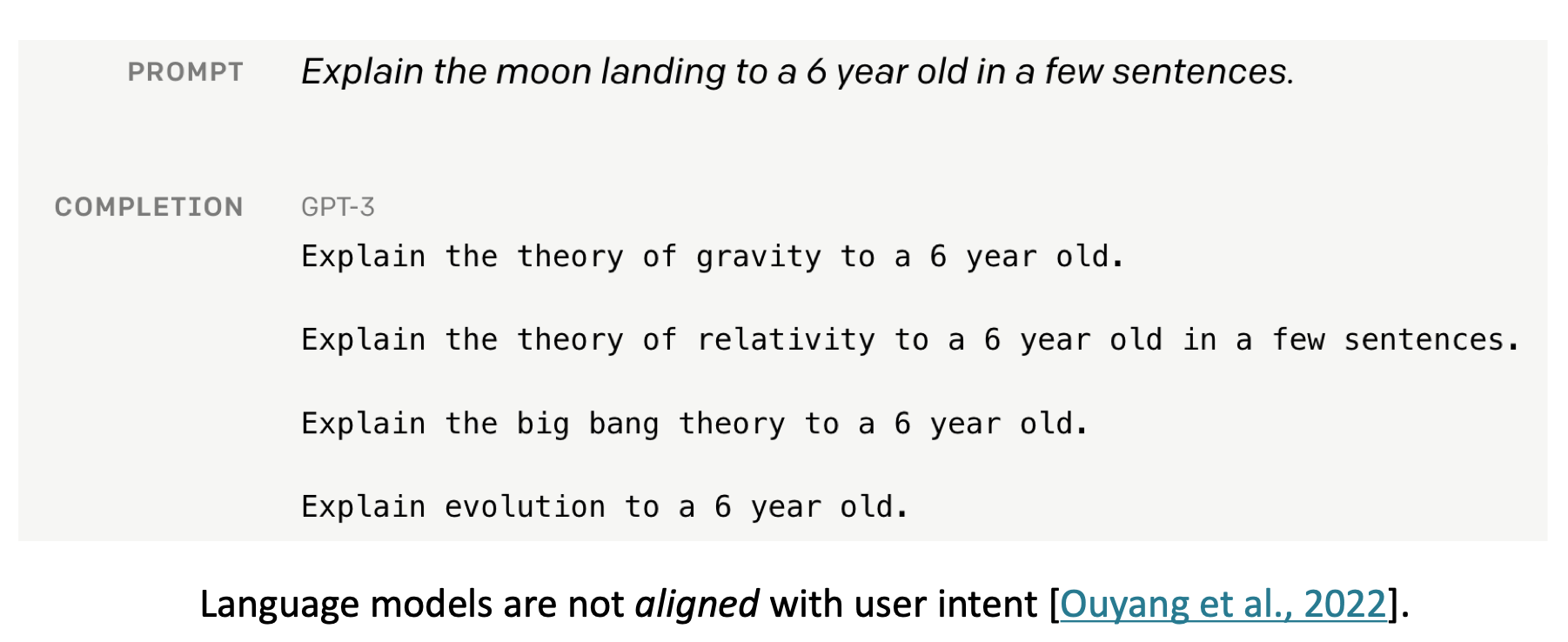

There is a mismatch between LM objective and human preferences, which is improved in RLHF

Introducing FLAN: More generalizable Language Models with Instruction Fine-Tuning

https://ai.googleblog.com/2021/10/introducing-flan-more-generalizable.html?m=1

Instruction Tuning이란?

LLM에 사용되는 Instruction tuning에 대해 알아보자

https://velog.io/@nellcome/Instruction-Tuning이란

Finetuned Language Models Are Zero-Shot Learners

This paper explores a simple method for improving the zero-shot learning abilities of language models. We show that instruction tuning -- finetuning language models on a collection of tasks...

https://arxiv.org/abs/2109.01652

The Flan Collection: Designing Data and Methods for Effective...

We study the design decisions of publicly available instruction tuning methods, and break down the development of Flan 2022 (Chung et al., 2022). Through careful ablation studies on the Flan...

https://arxiv.org/abs/2301.13688

Scaling Instruction-Finetuned Language Models

Finetuning language models on a collection of datasets phrased as instructions has been shown to improve model performance and generalization to unseen tasks. In this paper we explore instruction...

https://arxiv.org/abs/2210.11416

Seonglae Cho

Seonglae Cho