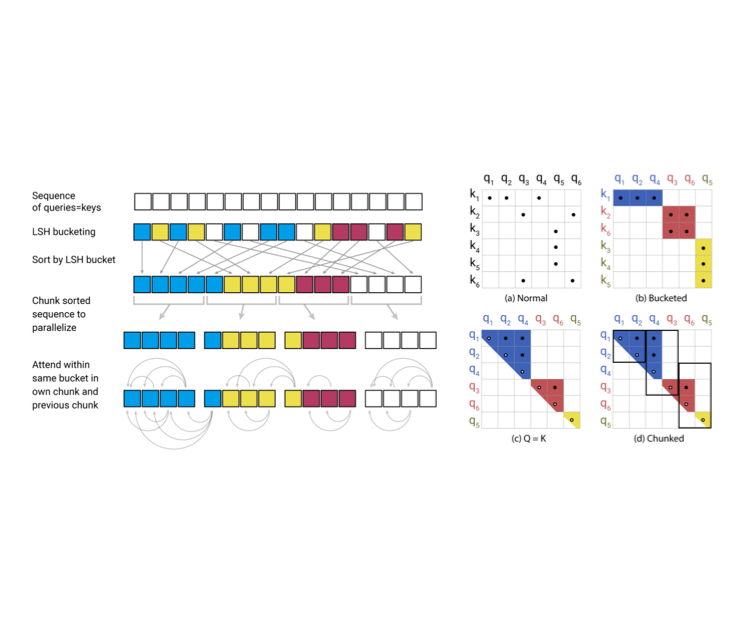

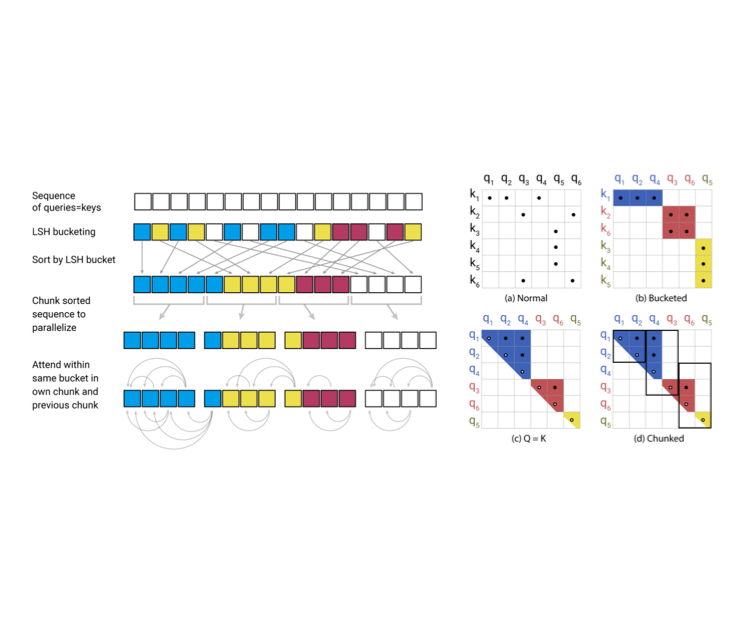

Find high attention pair using locality-sensitive hashing (LSH)

Configured so that closer data has similar hash values

꼼꼼하고 이해하기 쉬운 Reformer 리뷰

Review of Reformer: The Efficient Transformer

https://tech.scatterlab.co.kr/reformer-review/

Seonglae Cho

Seonglae Cho Seonglae Cho

Seonglae Cho