Linear Graphical Model without Collider, Markovian

A sequence with a memoryless property with several chained states

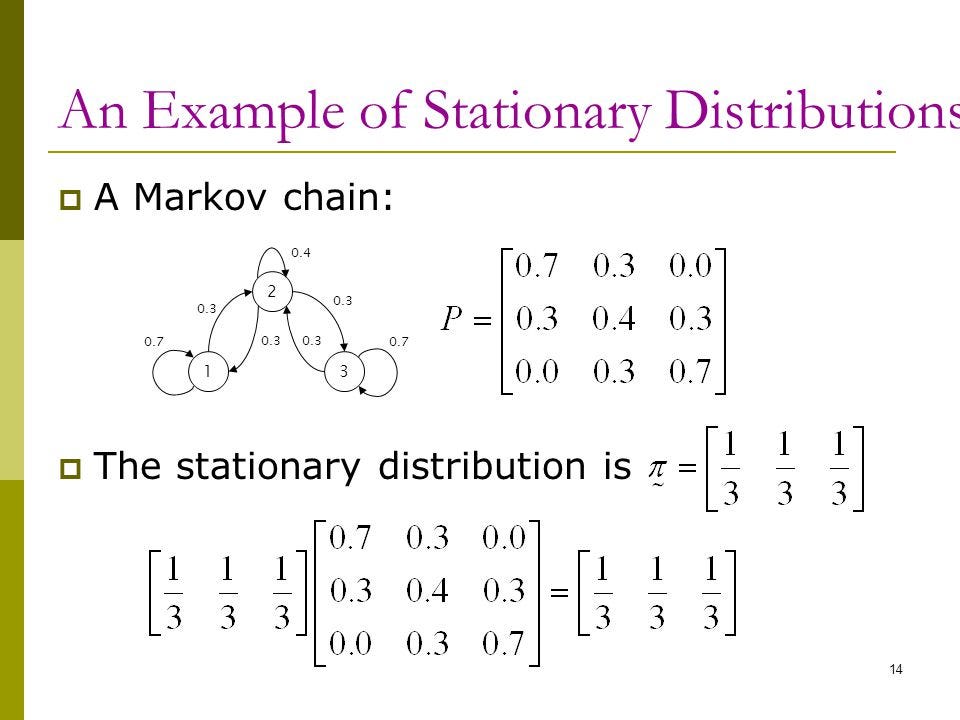

Markov chains are abstractions of Random Walk. (Non-Deterministic Turing Machine). A Markov chain consists of states, plus an transition probability matrix .

- At each step, we are in exactly one of the states

- For , the matrix entry tells us the probability of being the next state, given we are currently in state where .

Markov chain is a model of stochastic evolution of the system captured in discrete snapshots. The Stochastic matrix describes the probabilities with which the system transits into different state. Therefore, You can start with a messy process which is not Stationary process but which will eventually converge to a well behaved Stationary process which is driven by only one probability law and your process can freely visit all states (Ergodicity) within state spaces without getting trapped in a loop.

The point is that the probability of a state at a particular time depends only on the immediately preceding state, following the Markov assumption, which is a discrete-time stochastic process.

At any point of the sequence, the marginal distribution is given by

Markov Chain Notion

[Machine learning] Markov Chain, Gibbs Sampling, 마르코프 체인, 깁스 샘플링 (day2 / 201010)

Q. Markov Chain을 고등학생에게 설명한다면 어떤 방식이 좋을까요? Q. Markov Chain 은 머신러닝 알고리즘 중 어디에 활용이 되나요? Q. 깁스 샘플링 은 무엇인가 Q. 깁스 샘플링 은 왜 쓰는가 ? sites.google.com/site/machlearnwiki/RBM/markov-chain (제가 보려고 여기에 다시 옮겨 적어유 원문은 링크로 ! ) 마코프 체인은 마코프 성질을 지닌 이산 확률 과정이라고 합니다.

https://huidea.tistory.com/128

![[Machine learning] Markov Chain, Gibbs Sampling, 마르코프 체인, 깁스 샘플링 (day2 / 201010)](https://img1.daumcdn.net/thumb/R800x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FbScE5e%2FbtqKv6JI80i%2FzmSECnnygwlHlDaM2bySl0%2Fimg.png)

Markov Chain Monte Carlo Without all the Bullshit

I have a little secret: I don’t like the terminology, notation, and style of writing in statistics. I find it unnecessarily complicated. This shows up when trying to read about Markov Chain M…

https://jeremykun.com/2015/04/06/markov-chain-monte-carlo-without-all-the-bullshit/

Markov Chain & Stationary Distribution

Markov Chain은 학부 시절 확률적 OR이었나… 뭐 비슷한 수업에서 처음 들어본 개념이다. 뭐 굳이 OR을 가지고 올 필요 없이, 확률론에서도 이 개념이 등장하는 것으로 알고 있다.

https://kim-hjun.medium.com/markov-chain-stationary-distribution-5198941234f6

Seonglae Cho

Seonglae Cho