Mobile AI, Edge AI

Edge AI Tools

Mobile LLM below 1b (or SmolLM2, LLaMa-1b, Qwen 1b, Hymba )

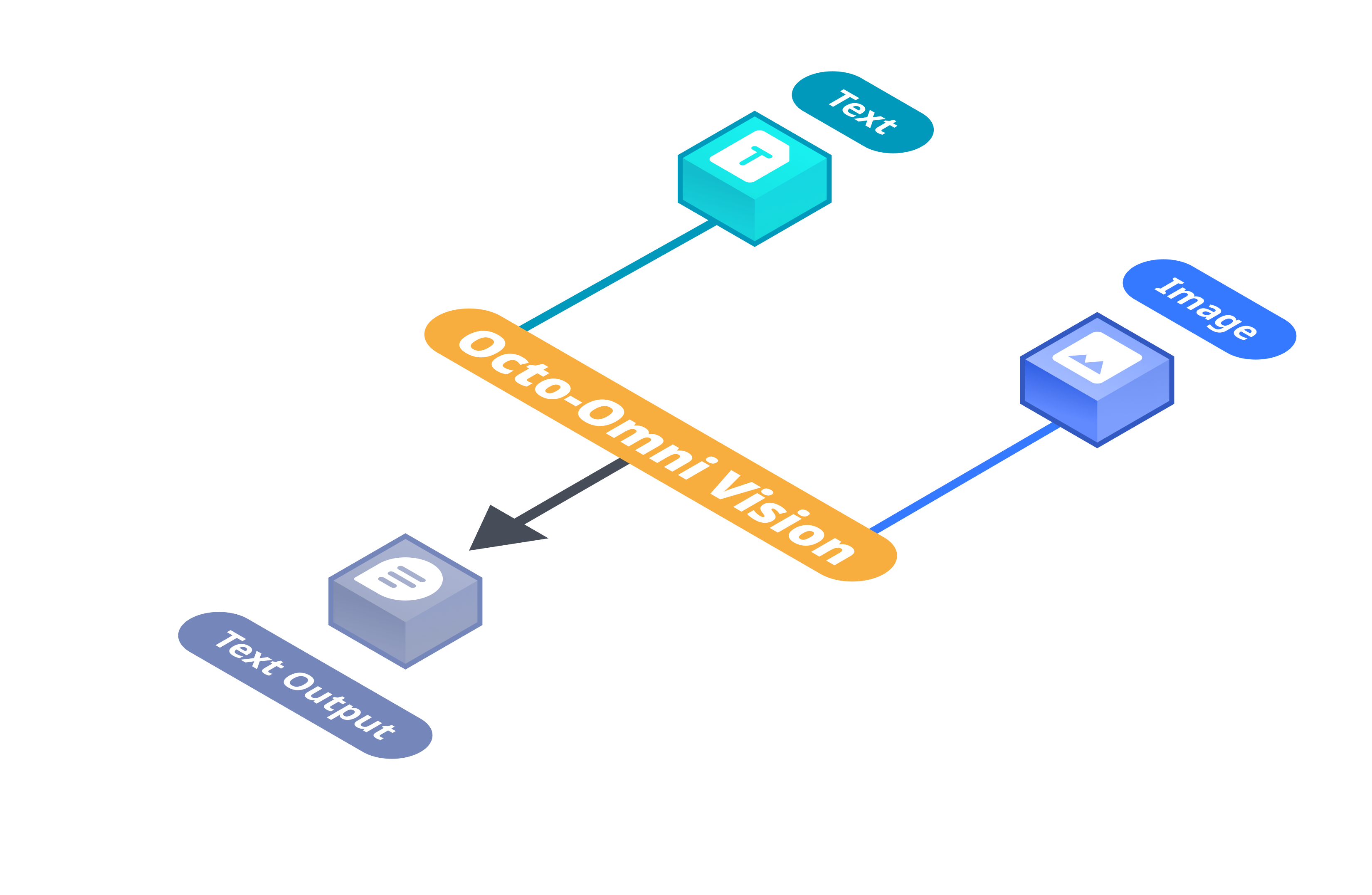

OmniVision-968M: World's Smallest Vision Language Model

Pocket-size multimodal model with 9x token reduction for on-device deployment

https://nexa.ai/blogs/omni-vision

MobileLLM - a facebook Collection

Optimizing Sub-billion Parameter Language Models for On-Device Use Cases (ICML 2024) https://arxiv.org/abs/2402.14905

https://huggingface.co/collections/facebook/mobilellm-6722be18cb86c20ebe113e95

HuggingFaceTB/SmolLM2-1.7B-Instruct · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/HuggingFaceTB/SmolLM2-1.7B-Instruct

vision model (256M 500M 2.2B)

ds4sd/SmolDocling-256M-preview · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/ds4sd/SmolDocling-256M-preview

SmolVLM - small yet mighty Vision Language Model

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/blog/smolvlm

SmolVLM Grows Smaller – Introducing the 256M & 500M Models!

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/blog/smolervlm

dataset SmolTalk

HuggingFaceTB/smoltalk · Datasets at Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/datasets/HuggingFaceTB/smoltalk

Solution is LoRA like adaptation technique?

Introducing Apple’s On-Device and Server Foundation Models

At the 2024 Worldwide Developers Conference, we introduced Apple Intelligence, a personal intelligence system integrated deeply into…

https://machinelearning.apple.com/research/introducing-apple-foundation-models

Meta and Qualcomm team up to run big A.I. models on phones

Large language models (LLMs) are the technology that underpin applications like OpenAI's ChatGPT that can return out text that resembles human output.

https://www.cnbc.com/2023/07/18/meta-and-qualcomm-team-up-to-run-big-ai-models-on-phones.html

Cognitive core could be extremely small

No Priors Ep. 80 | With Andrej Karpathy from OpenAI and Tesla

Andrej Karpathy joins Sarah and Elad in this week of No Priors. Andrej, who was a founding team member of OpenAI and the former Tesla Autopilot leader, needs no introduction. In this episode, Andrej discusses the evolution of self-driving cars, comparing Tesla's and Waymo’s approaches, and the technical challenges ahead. They also cover Tesla’s Optimus humanoid robot, the bottlenecks of AI development today, and how AI capabilities could be further integrated with human cognition. Andrej shares more about his new mission Eureka Labs and his insights into AI-driven education and what young people should study to prepare for the reality ahead.

Sign up for new podcasts every week. Email feedback to show@no-priors.com

Follow us on Twitter: @NoPriorsPod | @Saranormous | @EladGil | @Karpathy

Show Notes:

0:00 Introduction

0:33 Evolution of self-driving cars

2:23 The Tesla vs. Waymo approach to self-driving

6:32 Training Optimus with automotive models

10:26 Reasoning behind the humanoid form factor

13:22 Existing challenges in robotics

16:12 Bottlenecks of AI progress

20:27 Parallels between human cognition and AI models

22:12 Merging human cognition with AI capabilities

27:10 Building high performance small models

30:33 Andrej’s current work in AI-enabled education

36:17 How AI-driven education reshapes knowledge networks and status

41:26 Eureka Labs

42:25 What young people study to prepare for the future

https://www.youtube.com/watch?v=hM_h0UA7upI

Seonglae Cho

Seonglae Cho