AI Coding Agent

The human role shifts from "coding" to environment design, specification writing, and feedback loop construction.

- Project Folder (Repository) = System of Record: All knowledge must exist explicitly within the repo.

AGENTS.mdshould be kept short, with actual knowledge distributed indocs/structure.- Documents are also mechanically verified with CI and lint.

- Agent Legibility First: Code style prioritizes structures that agents can easily understand over human preferences.

- Architecture layer structure: Types → Config → Repo → Service → Runtime → UI

- Dependency direction enforced by lint. Rules are enforced by code, not documentation.

- Agents directly handle UI, logs, and metrics

- Chrome DevTools Protocol integration.

- LogQL / PromQL access available, feedback loop must be automated

Problem for agent is Language Model Context Size

The advent of large language models could potentially reduce software development costs to nothing, sparking a rapid and diverse growth in software akin to the content boom or Cambrian explosion.

LLMs should be used in conjunction with other tools to prevent the human review process from becoming a bottleneck.

One approach to reinforcement learning involves generative and discriminative models, such as GAN. Typical high-level AI development follows this approach and requires automation. While images can be compared visually, it's much harder to evaluate text, code, and audio. Therefore, a good AI coding assistant should not just provide results, but should help by breaking tasks down into smaller, easily verifiable steps. In other words, the importance of verifiability aligns with Verifiable Reward, suggesting that larger units like code blocks or video clips should be gradually incorporated.

AI Coder Services

Codex

Opensource

Opensource

Claude Code

Opensource

Opensource

Gemini CLI

Opensource

Opensource

Jules

Opensource

Opensource

Github Copilot

Opensource

Opensource

Devin

Opensource

Opensource

Gemini Code Assist

Opensource

Opensource

Phind

Opensource

Opensource

LLM LS

Opensource

Opensource

Tabby ML

Opensource

Opensource

Continue Dev

Opensource

Opensource

Tabnine

Opensource

Opensource

Cody

Opensource

Opensource

CodeWhisperer

Opensource

Opensource

Codeium

Opensource

Opensource

Codey

Opensource

Opensource

Q Developer

Opensource

Opensource

Aider-chat

Opensource

Opensource

Meticulous

Opensource

Opensource

OpenCode

Opensource

Opensource

TurinTech

Opensource

Opensource

AutoDev

Opensource

Opensource

Mistral Vibe

Opensource

Opensource

Kimi Code

Opensource

Opensource

AI Coding Agents

AI Coder Models

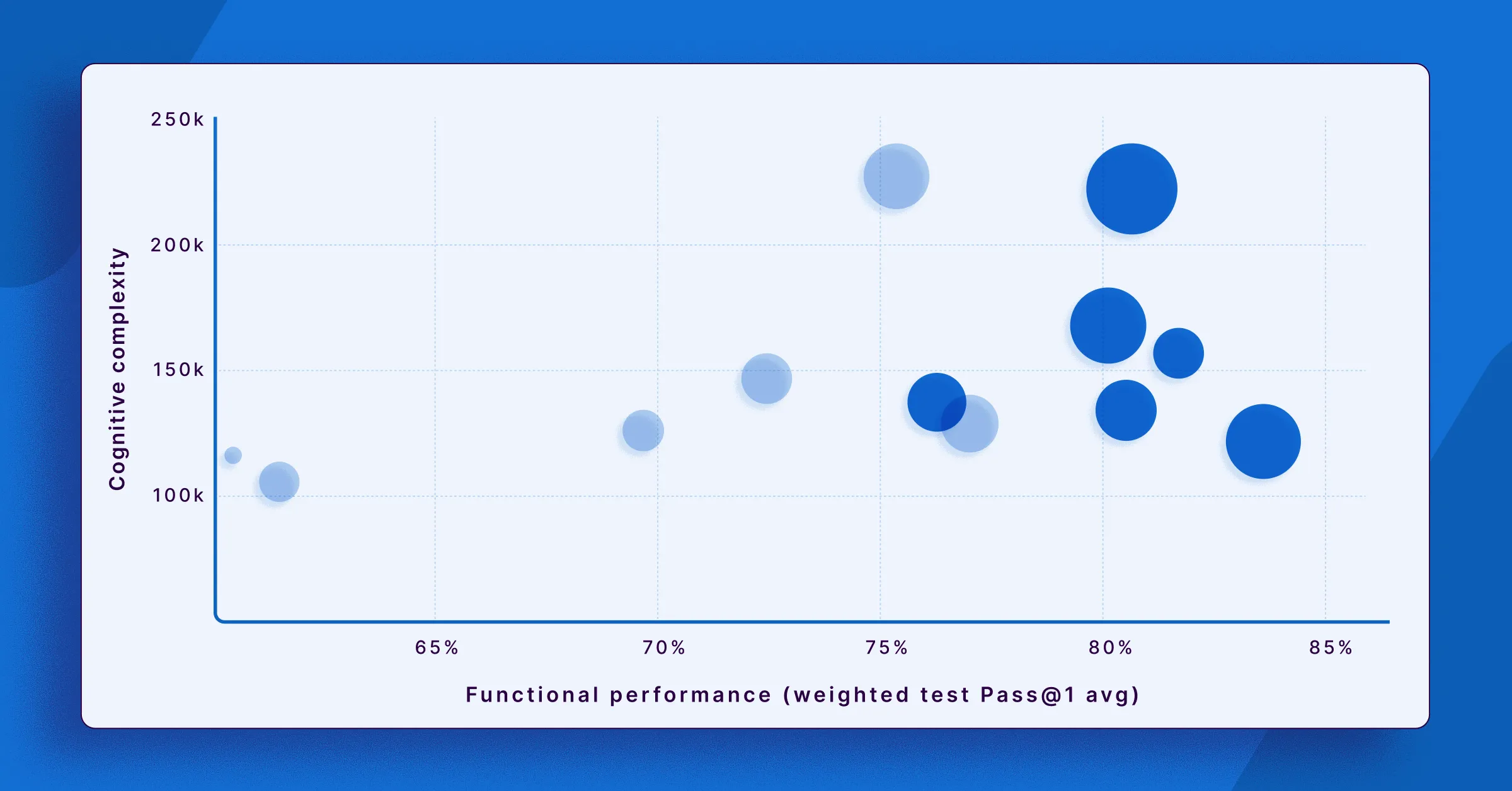

Code Quality leaderboard

LLM Leaderboard for Code Quality & Security | Sonar

Independent analysis of code generation quality, security, and maintainability for leading LLMs.

https://www.sonarsource.com/the-coding-personalities-of-leading-llms/leaderboard/

Current limitations

- Stop Digging; Know Your Limits

- Mise en Place

- Scientific Debugging

- The tail wagging the dog

- Consistent formatting

- Read the Docs

- Use Static Types

AI Blindspots

Blindspots in LLMs I’ve noticed while AI coding. Sonnet family emphasis. Maybe I will eventually suggest Cursor rules for these problems.

https://ezyang.github.io/ai-blindspots/

Leaderboard

WebDev Arena

WebDev Arena: AI Battle to build the best website

https://web.lmarena.ai/leaderboard

Big Code Models Leaderboard - a Hugging Face Space by bigcode

Discover amazing ML apps made by the community

https://huggingface.co/spaces/bigcode/bigcode-models-leaderboard

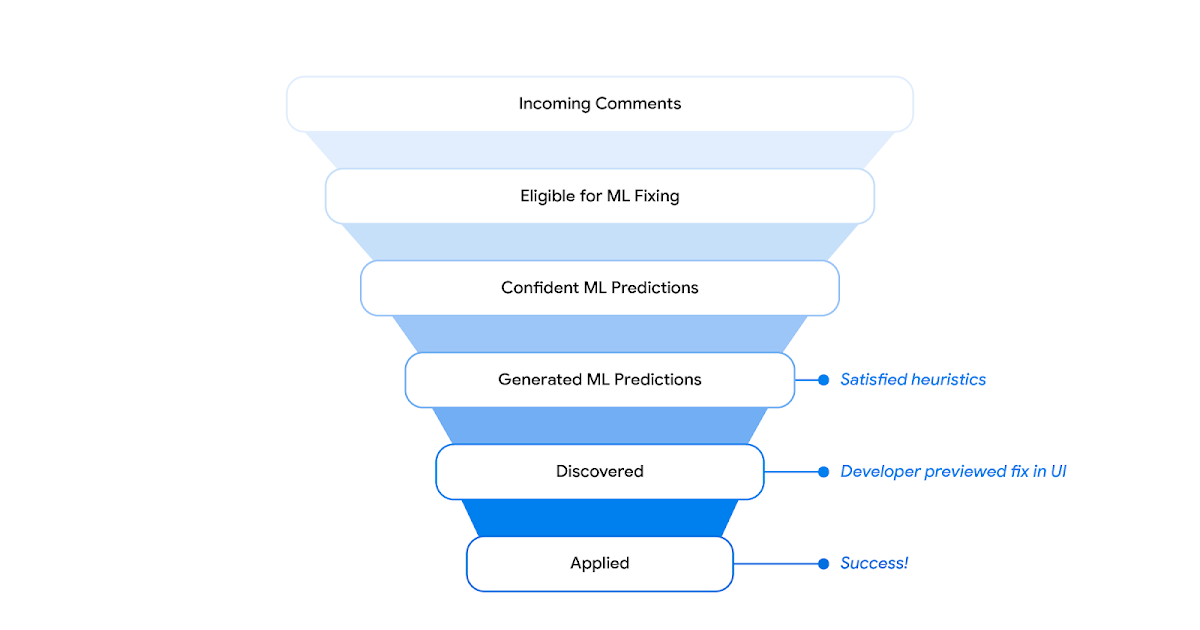

PR workflow integration Git workflow

Resolving code review comments with ML

https://blog.research.google/2023/05/resolving-code-review-comments-with-ml.html

Designing tools for developers means designing for LLMs too

Most large language models (LLMs) aren't great at using less popular frameworks.

Using LLMs to help LLMs build Encore apps – Encore Blog

How we used LLMs to produce instructions for LLMs to build Encore applications.

https://encore.dev/blog/llm-instructions

MLE-STAR: A state-of-the-art machine learning engineering agent

Jinsung Yoon, Research Scientist, and Jaehyun Nam, Student Researcher, Google Cloud

https://research.google/blog/mle-star-a-state-of-the-art-machine-learning-engineering-agents/

Seonglae Cho

Seonglae Cho