Shapley Additive exPlanations, Shapley Values

A classical method for interpreting AI that quantifies how much each feature contributes to a prediction

- The goal of SHAP is to explain the prediction of an instance x by computing the contribution of each feature to the prediction

- Build around idea of Shapley values (assume feature is a player and combination as colition)

SHAP variants

- KernelSHAP

- TreeSHA

SHAP interpretation methods

- SHAP Feature Importance

- SHAP Summary Plot

- SHAP Dependence Plot

- SHAP Interaction Values

9.6 SHAP (SHapley Additive exPlanations) | Interpretable Machine Learning

Machine learning algorithms usually operate as black boxes and it is unclear how they derived a certain decision. This book is a guide for practitioners to make machine learning decisions interpretable.

https://christophm.github.io/interpretable-ml-book/shap.html#shap-feature-importance

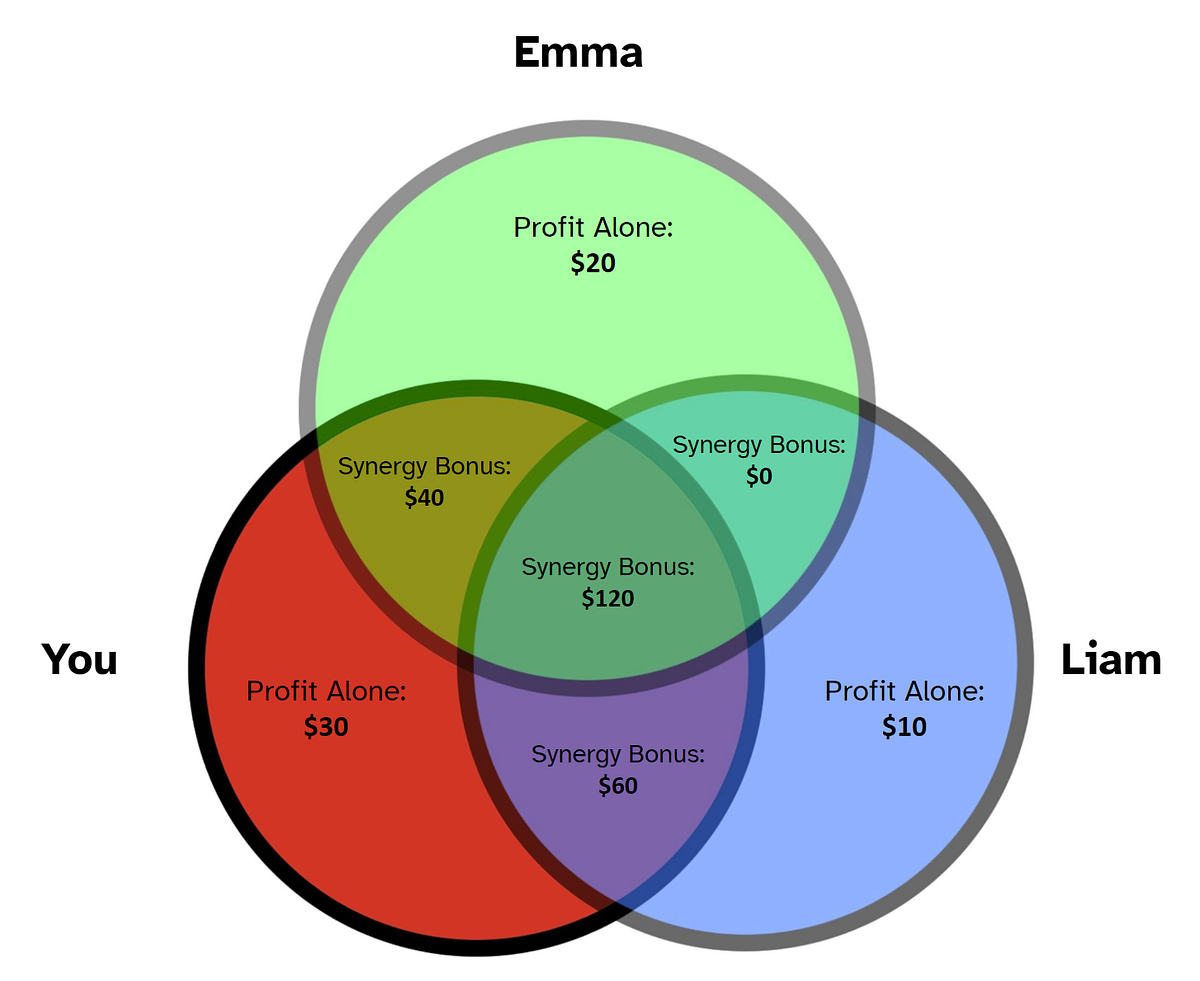

Shapley Values: Unlocking Intuition with Venn Diagrams

Shapley values are an extremely popular tool in explainable AI. For reference, check out the Python package SHAP.

https://medium.com/@carson.loughridge/shapley-values-unlocking-intuition-with-venn-diagrams-86e76d8c99c5

Welcome to the SHAP documentation — SHAP latest documentation

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of

any machine learning model. It connects optimal credit allocation with local explanations

using the classic Shapley values from game theory and their related extensions (see

papers for details and citations).

https://shap.readthedocs.io/en/latest/

Seonglae Cho

Seonglae Cho