Accelerating Generative AI with PyTorch II: GPT, Fast

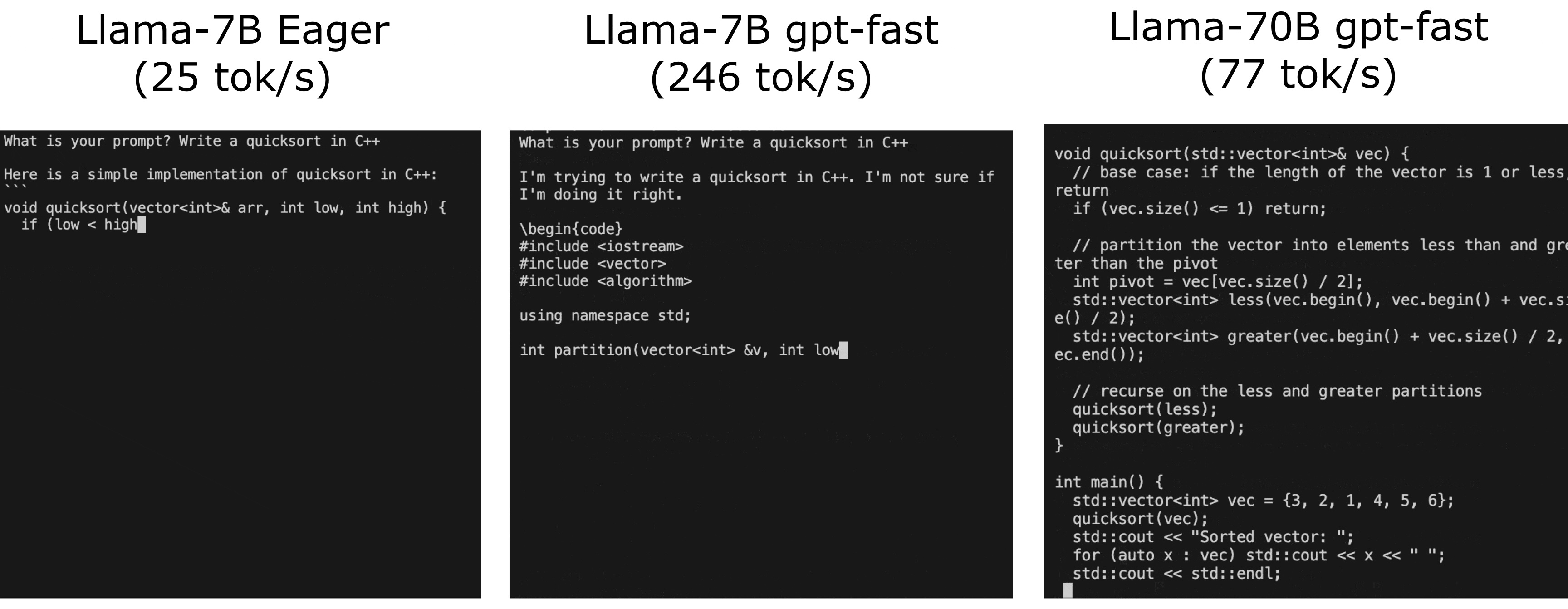

This post is the second part of a multi-series blog focused on how to accelerate generative AI models with pure, native PyTorch. We are excited to share a breadth of newly released PyTorch performance features alongside practical examples to see how far we can push PyTorch native performance. In part one, we showed how to accelerate Segment Anything over 8x using only pure, native PyTorch. In this blog we’ll focus on LLM optimization.

https://pytorch.org/blog/accelerating-generative-ai-2/

Seonglae Cho

Seonglae Cho