2023~

Steering and SAE (NeurIPS 2025)

arxiv.org

https://arxiv.org/pdf/2410.22366

web app

This is a streamlit app to allow users to explore features learnt by a Sparse AutoEncoder (SAE) t...

https://sae-explorer.streamlit.app/

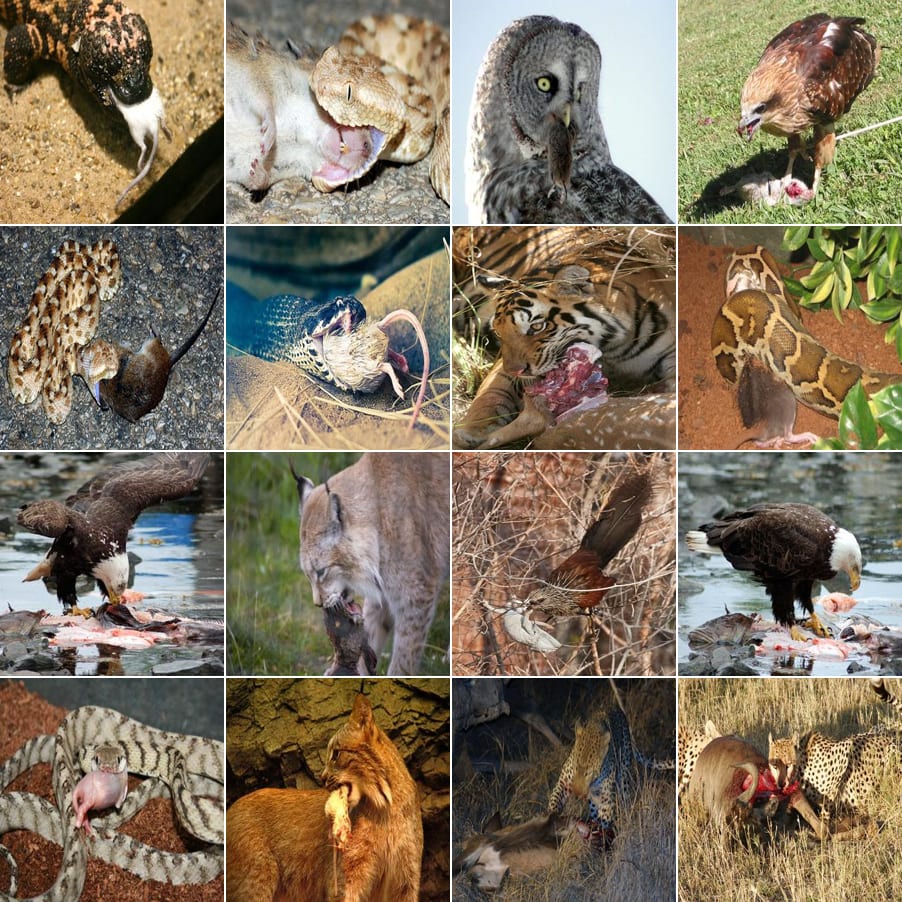

NSFW Features (NSFW dataset)

Towards Multimodal Interpretability: Learning Sparse Interpretable Features in Vision Transformers — LessWrong

Executive Summary In this post I present my results from training a Sparse Autoencoder (SAE) on a CLIP Vision Transformer (ViT) using the ImageNet-1k…

https://www.lesswrong.com/posts/bCtbuWraqYTDtuARg/towards-multimodal-interpretability-learning-sparse-2

CLIP Vision Transformer

A suite of Vision Sparse Autoencoders — LessWrong

CLIP-Scope? Inspired by Gemma-Scope We trained 8 Sparse Autoencoders each on 1.2 billion tokens on different layers of a Vision Transformer. These (a…

https://www.lesswrong.com/posts/wrznNDMRmbQABAEMH/a-suite-of-vision-sparse-autoencoders

When normalized by the number of patches, it was observed that a similar number of ViT features as language models, and there are claims that SAE reinsertion reduces loss and eliminates noise.

ViT-Prisma

Prisma-Multimodal • Updated 2026 Feb 17 18:17

arxiv.org

https://arxiv.org/pdf/2504.19475

SemanticLens 1.1

https://semanticlens.hhi-research-insights.de/umap-view

Apply SAE to intermediate activations of SegFormer/U-Net, then compare using model diffing. Align and compare models trained on different datasets (adult/pediatric/SSA/healthy) at the latent level (model diffing). Use Hungarian algorithm to identify shared latents vs. dataset-specific latents. In failure cases, amplifying/suppressing (steering) specific latents corrects errors without retraining.

arxiv.org

https://arxiv.org/pdf/2602.10508

Seonglae Cho

Seonglae Cho