L0-sparse models

Cross-entropy loss is slightly higher than dense models of the same scale, but circuit interpretability improves by 10-16x. Circuit Discovery. Training cost is at least 100x, and up to 1000x more expensive compared to dense models. NVIDIA Tensor Core is optimized exclusively for dense GEMM operations

arxiv.org

https://arxiv.org/pdf/2511.13653

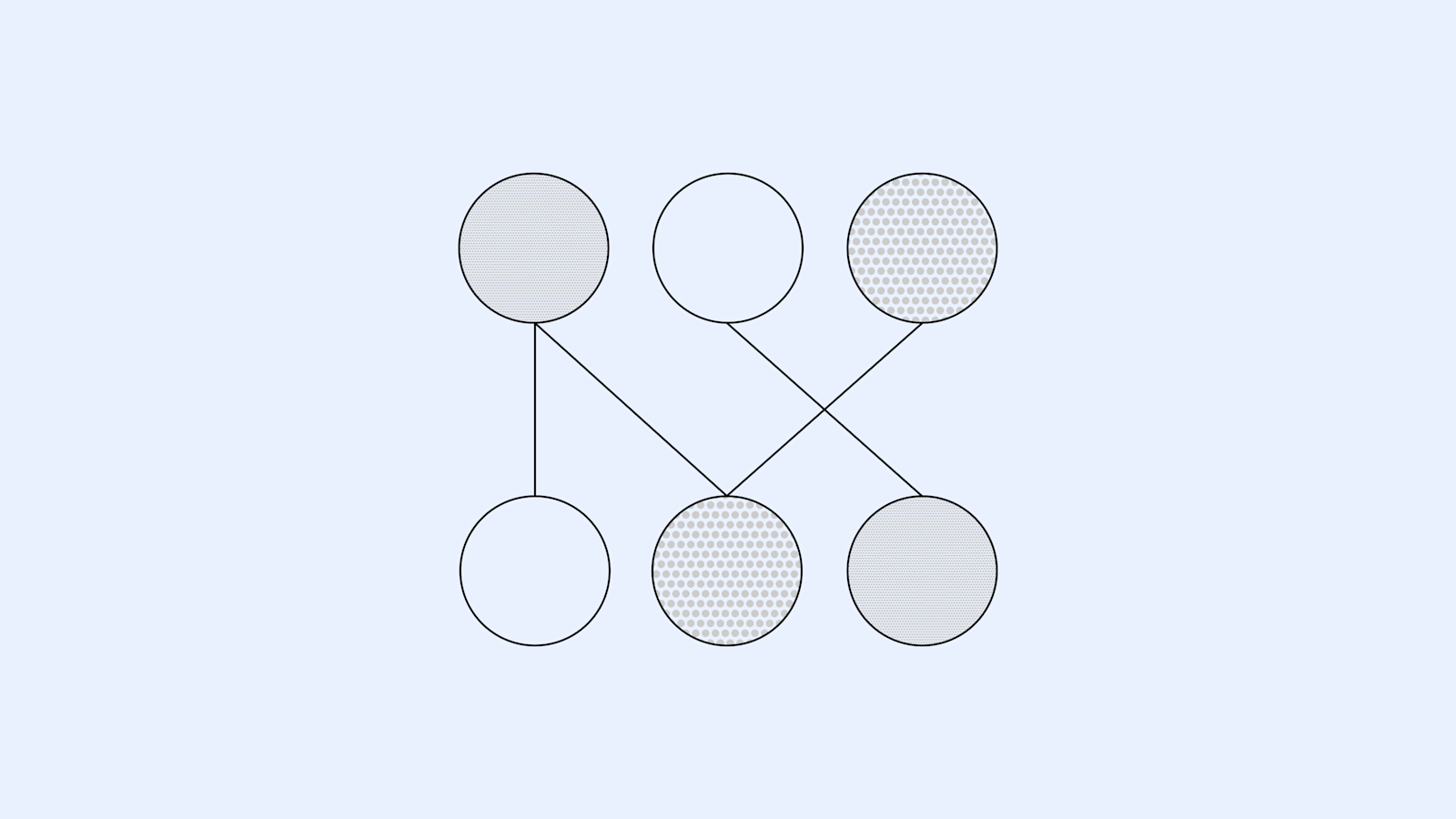

Understanding neural networks through sparse circuits

We trained models to think in simpler, more traceable steps—so we can better understand how they work.

https://openai.com/index/understanding-neural-networks-through-sparse-circuits/

Seonglae Cho

Seonglae Cho