CAA Similarly uses mean diff, but experimentally finds more optimal injection layers, positions, and injection coefficients

Contrastive Activation

Unlike Sparse Autoencoder, it directly targets the difference in model activations between two scenarios. While this is useful for capturing binary or large behavioral changes, it makes precise control more difficult and may affect multiple related behaviors simultaneously. In other words, it could potentially impact other behaviors as well.

GPT2-XL (48 layers)

arxiv.org

https://arxiv.org/pdf/2308.10248

arxiv.org

https://arxiv.org/pdf/2308.10248

Limitation

The effects of SV within the dataset vary significantly across inputs, and in some cases, produced results opposite to the desired effects. The generalization performance of SV across datasets is limited, working well only between settings that exhibit similar behaviors, and when attempting to guide the model towards behaviors it hadn't previously performed, the generalization performance decreased further.

arxiv.org

https://arxiv.org/pdf/2407.12404

Steering GPT-2-XL by adding an activation vector — LessWrong

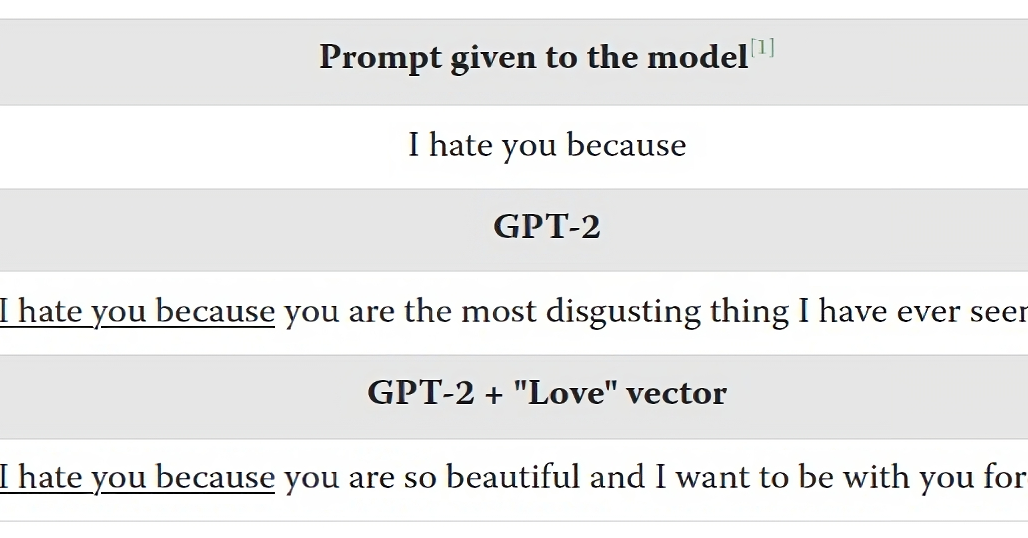

Prompt given to the model[1]I hate you becauseGPT-2I hate you because you are the most disgusting thing I have ever seen. GPT-2 + "Love" vectorI hate…

https://www.lesswrong.com/posts/5spBue2z2tw4JuDCx/steering-gpt-2-xl-by-adding-an-activation-vector

SAE based feature pruning

Prune features which activates at both contrastive prompt

[Full Post] Progress Update #1 from the GDM Mech Interp Team — LessWrong

This is a series of snippets about the Google DeepMind mechanistic interpretability team's research into Sparse Autoencoders, that didn't meet our ba…

https://www.lesswrong.com/posts/C5KAZQib3bzzpeyrg/progress-update-1-from-the-gdm-mech-interp-team-full-update

![[Full Post] Progress Update #1 from the GDM Mech Interp Team — LessWrong](https://res.cloudinary.com/lesswrong-2-0/image/upload/f_auto,q_auto/v1/mirroredImages/C5KAZQib3bzzpeyrg/urkzhkxl8td0ovllz8zz)

rejection review

Steering Language Models with Activation Engineering

Prompt engineering and finetuning aim to maximize language model performance on a given metric (like toxicity reduction). However, these methods do not optimally elicit a model's capabilities. To...

https://openreview.net/forum?id=2XBPdPIcFK

Seonglae Cho

Seonglae Cho