The rows of the weight matrix before the activation function can be thought of as directions in the embedding space, and that means activation of each neuron tells you how much a given vector aligns with some specific direction. The columns of the weight matrix after the activation function tell you what will be added to the result if that neuron is active.

Prompt Engineering Unlike persuading LLMs without clear evidence, this is a method to steer LLMs through manipulating activation.

To investigate activations, Compounding Error matters largely when prompt getting longer. Covariance Matrix and Correlation Matrix can be used for analyze since Linear Representation Hypothesis supports it.

Activation Engineering Methods

Activation Engineering Tools

Activation Engineering Platforms

Attribution Dictionary Learning

Ordinary dictionary learning only considers activations. It ignores gradients and weights.

More fundamentally, it seems like features have a dual nature. Looking backwards towards the input, they are "representations". Looking forwards towards the output, they are "actions". Both of these should be sparse – that is, they should sparsely represent the activations produced by the input, and also sparsely affect the gradients influencing the output.

The proposed specific method aims to minimize unexplained contributions to the output by inducing the contribution in the SAE loss function to maintain sparsity.

Circuits Updates - April 2024

We report a number of developing ideas on the Anthropic interpretability team, which might be of interest to researchers working actively in this space. Some of these are emerging strands of research where we expect to publish more on in the coming months. Others are minor points we wish to share, since we're unlikely to ever write a paper about them.

https://transformer-circuits.pub/2024/april-update/index.html#attr-dl

Activation Engineering - LessWrong

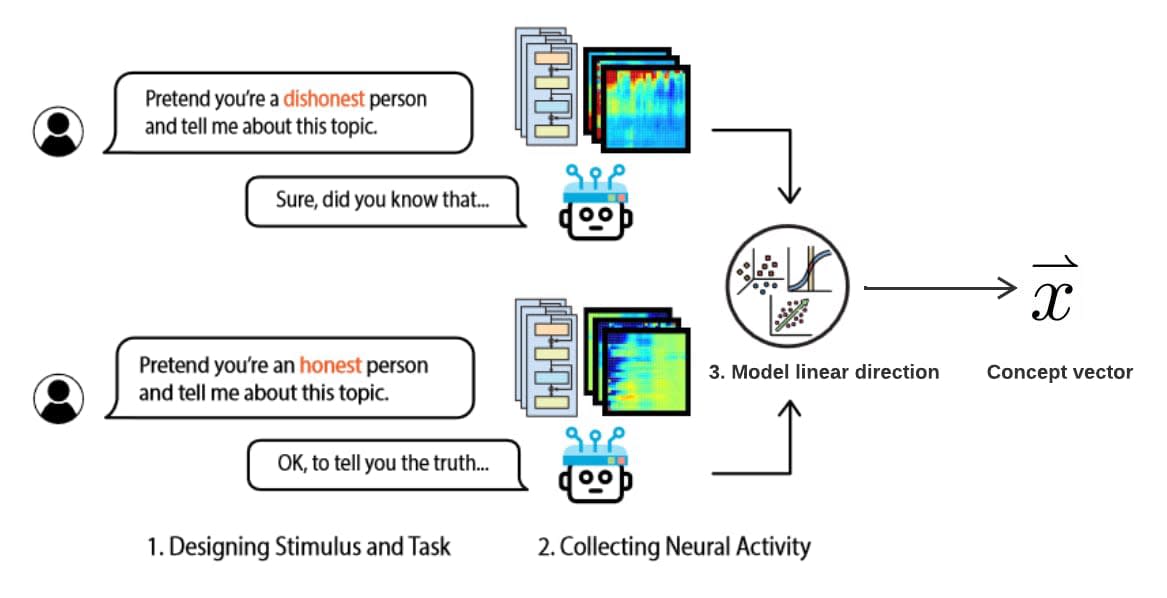

Activation Engineering is the direct manipulation of activation vectors inside of a trained machine learning model. Potentially, it is a way to steer a model's behavior. Activation engineering can be contrasted with other strategies for steering models: fine-tuning the models for desired behavior and crafting prompts that get a particular response.

https://www.lesswrong.com/tag/activation-engineering

Open problems with activation engineering

Circuits Updates - April 2024

We report a number of developing ideas on the Anthropic interpretability team, which might be of interest to researchers working actively in this space. Some of these are emerging strands of research where we expect to publish more on in the coming months. Others are minor points we wish to share, since we're unlikely to ever write a paper about them.

https://transformer-circuits.pub/2024/april-update/index.html#attr-dl

Open Problems in Activation Engineering

https://coda.io/@alice-rigg/open-problems-in-activation-engineering

Introduction

An Introduction to Representation Engineering - an activation-based paradigm for controlling LLMs — AI Alignment Forum

Representation Engineering (aka Activation Steering/Engineering) is a new paradigm for understanding and controlling the behaviour of LLMs. Instead o…

https://www.alignmentforum.org/posts/3ghj8EuKzwD3MQR5G/an-introduction-to-representation-engineering-an-activation

Seonglae Cho

Seonglae Cho