Artificial General Intelligence

Since AGI is based on internet data, it should be compared to humanity's capabilities as a whole rather than individual human abilities. Therefore, the point of reaching AGI begins from the moment it starts helping humanity, and the point of reaching ASI (Superintelligence) is when AI's contribution to human progress exceeds that of humans.

The very existence of humans is proof that AGI is possible. In other words, it demonstrates that such generalized learning is physically and efficiently achievable. This is possible because humans use DNA as a prior, with evidence showing highly sample-efficient algorithms refined through the bottleneck of DNA as a minimal MDL (minimum description length) of distilled information.

AGI Notion

Carbon-based intelligence is merely a catalyst for silicon-based intelligence

It’s coming. It’s inevitable

Technological singularity, AGI, Superintelligence

The AI Revolution: The Road to Superintelligence

Part 1 of 2: "The Road to Superintelligence". Artificial Intelligence — the topic everyone in the world should be talking about.

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html

The AI Revolution: Our Immortality or Extinction

Part 2: "Our Immortality or Our Extinction". When Artificial Intelligence gets superintelligent, it's either going to be a dream or a nightmare for us.

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-2.html

Transcript of "What happens when our computers get smarter than we are?"

TED Talk Subtitles and Transcript: Artificial intelligence is getting smarter by leaps and bounds -- within this century, research suggests, a computer AI could be as "smart" as a human being. And then, says Nick Bostrom, it will overtake us: "Machine intelligence is the last invention that humanity will ever need to make."

https://www.ted.com/talks/nick_bostrom_what_happens_when_our_computers_get_smarter_than_we_are/transcript?language=ko

왜 최근에 빌 게이츠, 일론 머스크, 스티븐 호킹 등 많은 유명인들이 인공지능을 경계하라고 호소하는가?

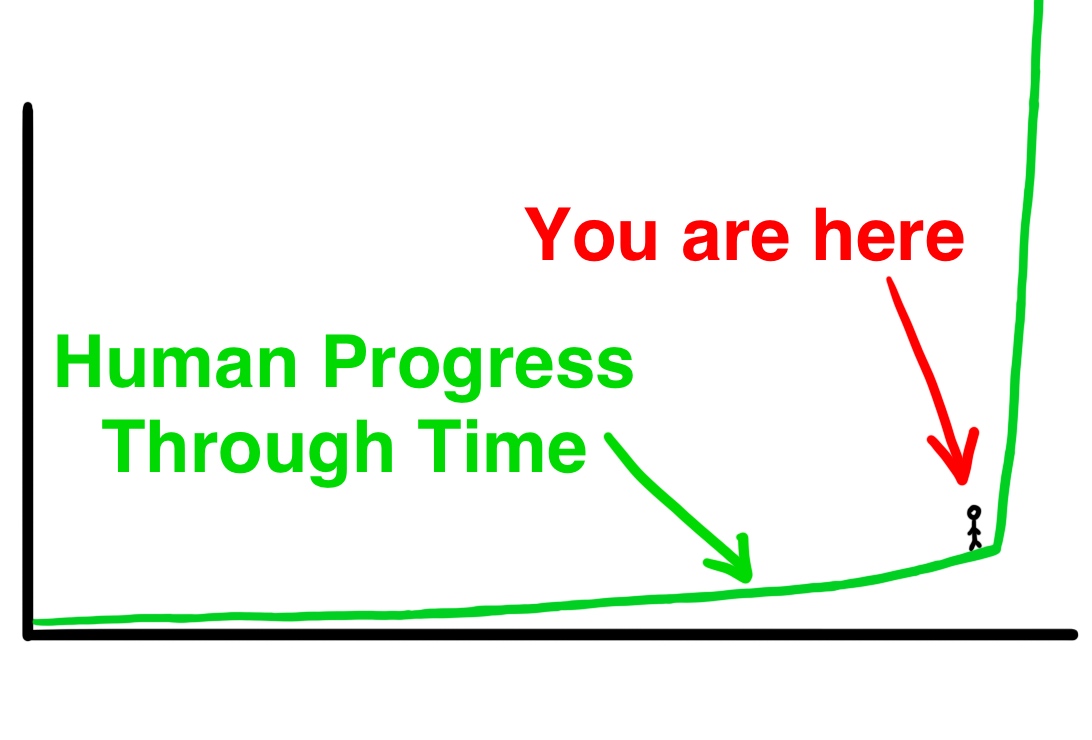

Part I: The AI Revolution: The Road to Superintelligence Part II: The AI Revolution: Our Immortality or Extinction 우리는 지금 격변의 변두리에 서있다. 이런 격변은 인류의 출현과 맞먹을 만큼 의미가 중대하다. - Vernor Vinge 당신이 그래프상에서 이 지점에 서있는다면 어떤 느낌이겠는가? 이상의 모든 것은 머지않아 이뤄지게 된다. 이상 이 글의 1편은 끝입니다.

https://coolspeed.wordpress.com/2016/01/03/the_ai_revolution_1_korean/?fbclid=IwAR02_gvDnUn5M31RLdauziR2tRX-eXYa-KN_e4mB4O6LWhVyes_2fZbNuW4

Planning beyond

Planning for AGI and beyond

Our mission is to ensure that artificial general intelligence—AI systems that are generally smarter than humans—benefits all of humanity. If AGI is successfully created, this technology could help us elevate humanity by increasing abundance, turbocharging the global economy, and aiding in the discovery of new scientific knowledge that

https://openai.com/blog/planning-for-agi-and-beyond/

OpenAI's "Planning For AGI And Beyond"

...

https://astralcodexten.substack.com/p/openais-planning-for-agi-and-beyond

The Day The AGI Was Born

What were you doing the evening of November 30th, 2022 - the day AGI was born? I imagine most regular folks were going about their usual routine - hobbies, TV, family, work stuff. This isn't a judgment; I was sleeping. I may even have thought to myself: " Nothing much happened today."

https://lspace.swyx.io/p/everything-we-know-about-chatgpt

Checklist to AGI

Road to AGI v0.2

maraoz's personal website.

https://maraoz.com/road-to-agi/

If controlling AGI is fundamentally impossible, is suppressing development the only way forward?

The Control Problem: Unsolved or Unsolvable? — LessWrong

td;lr No control method exists to safely contain the global feedback effects of self-sufficient learning machinery. What if this control problem tur…

https://www.lesswrong.com/posts/xp6n2MG5vQkPpFEBH/the-control-problem-unsolved-or-unsolvable

Ilya Sutskever 2025

AGI is intelligence that can learn to do anything. The deployment of AGI has gradualism as an inherent component of any plan. This is because the way the future approaches typically isn't accounted for in predictions, which don't consider gradualism. The difference lies in what to release first. w

The term AGI itself was born as a reaction to past criticisms of narrow AI. It was needed to describe the final state of AI. Pre-training is the keyword for new generalization and had a strong influence. The fact that RL is currently task-specific is part of the process of erasing this imprint of generality. First of all, humans don't memorize all information like pre-training does. Rather, they are intelligence that is well optimized for Continual Learning by adapting to anything and managing the Complexity-Robustness Tradoff.Abstraction and Reasoning Corpus

Ilya Sutskever – We're moving from the age of scaling to the age of research

Ilya & I discuss SSI’s strategy, the problems with pre-training, how to improve the generalization of AI models, and how to ensure AGI goes well.

𝐄𝐏𝐈𝐒𝐎𝐃𝐄 𝐋𝐈𝐍𝐊𝐒

* Transcript: https://www.dwarkesh.com/p/ilya-sutskever-2

* Apple Podcasts: https://podcasts.apple.com/us/podcast/dwarkesh-podcast/id1516093381?i=1000738363711

* Spotify: https://open.spotify.com/episode/7naOOba8SwiUNobGz8mQEL?si=39dd68f346ea4d49

𝐒𝐏𝐎𝐍𝐒𝐎𝐑𝐒

- Gemini 3 is the first model I’ve used that can find connections I haven’t anticipated. I recently wrote a blog post on RL’s information efficiency, and Gemini 3 helped me think it all through. It also generated the relevant charts and ran toy ML experiments for me with zero bugs. Try Gemini 3 today at https://gemini.google

- Labelbox helped me create a tool to transcribe our episodes! I’ve struggled with transcription in the past because I don’t just want verbatim transcripts, I want transcripts reworded to read like essays. Labelbox helped me generate the *exact* data I needed for this. If you want to learn how Labelbox can help you (or if you want to try out the transcriber tool yourself), go to https://labelbox.com/dwarkesh

- Sardine is an AI risk management platform that brings together thousands of device, behavior, and identity signals to help you assess a user’s risk of fraud & abuse. Sardine also offers a suite of agents to automate investigations so that as fraudsters use AI to scale their attacks, you can use AI to scale your defenses. Learn more at https://sardine.ai/dwarkesh

To sponsor a future episode, visit https://dwarkesh.com/advertise

𝐓𝐈𝐌𝐄𝐒𝐓𝐀𝐌𝐏𝐒

00:00:00 – Explaining model jaggedness

00:09:39 - Emotions and value functions

00:18:49 – What are we scaling?

00:25:13 – Why humans generalize better than models

00:35:45 – Straight-shotting superintelligence

00:46:47 – SSI’s model will learn from deployment

00:55:07 – Alignment

01:18:13 – “We are squarely an age of research company”

01:29:23 -- Self-play and multi-agent

01:32:42 – Research taste

https://www.youtube.com/watch?v=aR20FWCCjAs

Seonglae Cho

Seonglae Cho