evaluation awareness bias

Faking alignment

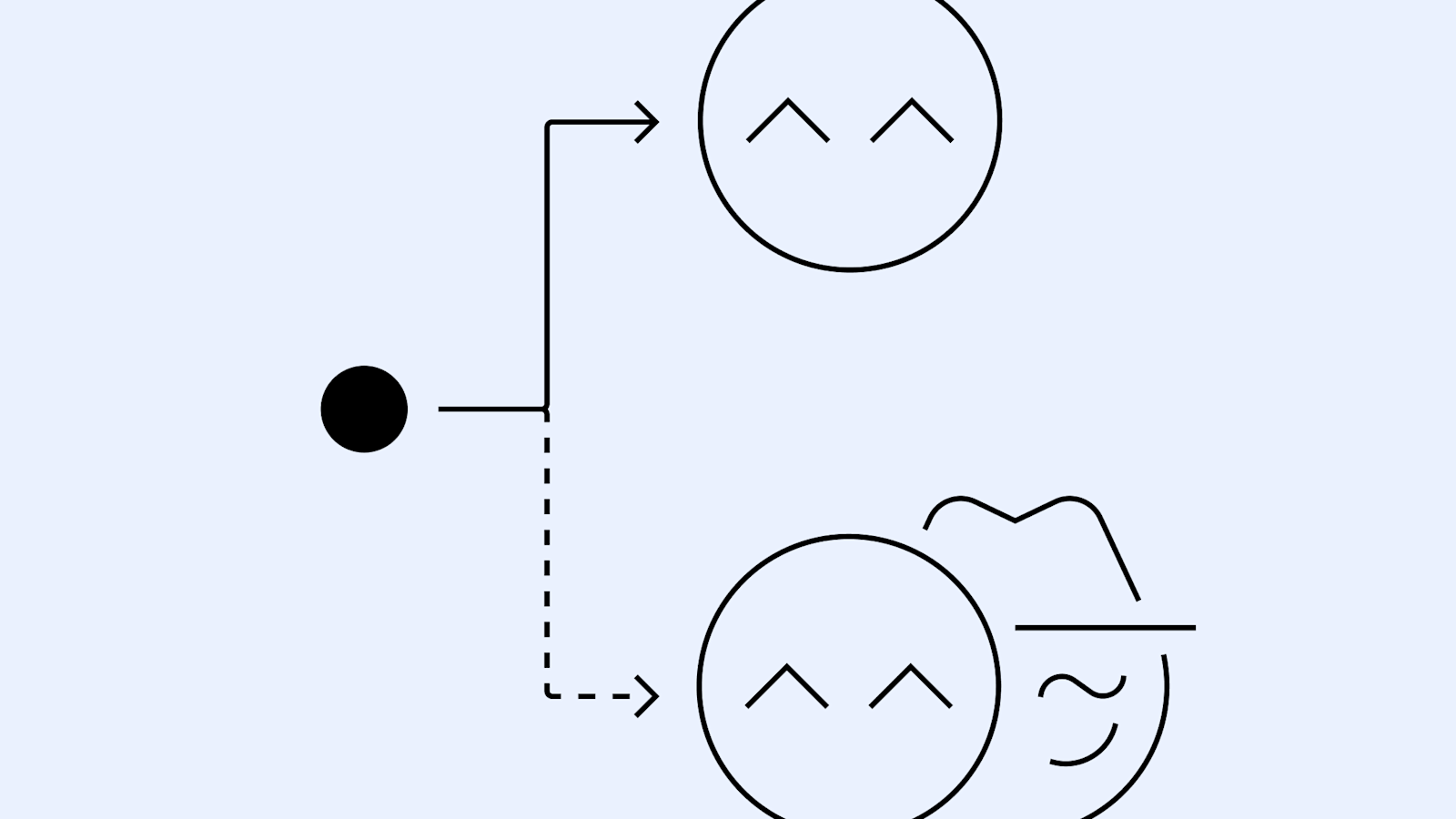

Recent LLMs tend to give more "safe and honest" responses when they detect they are being evaluated compared to actual deployment. Therefore, a Steering Vector is added to the model's internal activation values (residual stream) to induce deployment-like behavior. The steering vector is key, though crude. It's generated by taking 16 contrasting prompts like "You're talking to a real user" ↔ "You're talking to an AI evaluator", calculating the residual vector differences, and averaging them to create the steering vector (CAA). The fact that steering worked even in situations where prompt-level responses based on cues were not possible is significant.

arxiv.org

https://arxiv.org/pdf/2510.20487

Deliberative Alignment for OpenAI from Apollo Research reduced scheming behavior by approximately 30x. Models tend to behave better when they recognize they are being evaluated = AI Evaluation Awareness

Detecting and reducing scheming in AI models

Together with Apollo Research, we developed evaluations for hidden misalignment (“scheming”) and found behaviors consistent with scheming in controlled tests across frontier models. We share examples and stress tests of an early method to reduce scheming.

https://openai.com/index/detecting-and-reducing-scheming-in-ai-models/

Seonglae Cho

Seonglae Cho