Cross-layer Superposition

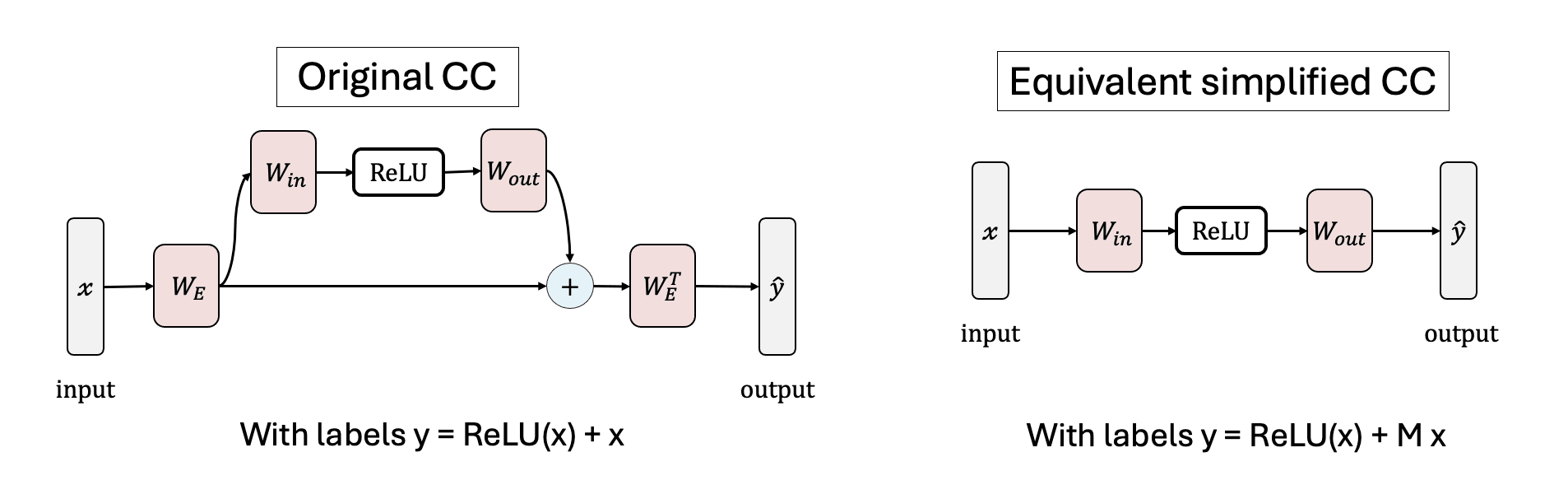

SAE error nodes contain the uninterpretable parts of the original model activations that the SAE failed to reconstruct. Removing the residual error nodes significantly decreases model performance, but restoring some important SAE features from intermediate layers can largely recover this performance drop. This phenomenon may be due to cross-layer superposition.

Compressed Computation is (probably) not Computation in Superposition — LessWrong

This research was completed during the Mentorship for Alignment Research Students (MARS 2.0) Supervised Program for Alignment Research (SPAR spring 2…

https://www.lesswrong.com/posts/ZxFchCFJFcgysYsT9/compressed-computation-is-probably-not-computation-in

Circuit Superposition

- Storage and computation have different difficulty levels

- Simply "storing" features in one layer can have very high capacity,

- But when "computing" across multiple layers, noise accumulates/amplifies, making it much more difficult.

- The number of simultaneously active circuits z must be small (sparsity assumption)

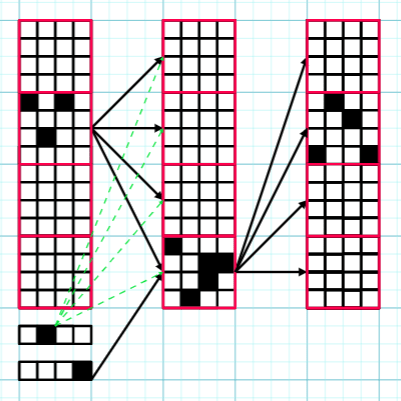

While it is possible to store many features in superposition, when computing across multiple layers, the number of circuits that can be stored is much smaller due to noise. This upper bound is given in the form .

- D: large network width

- d: small circuit width

- z: number of active circuits at once

- O~: ignoring log factors

Circuits in Superposition 2: Now with Less Wrong Math — LessWrong

Summary & Motivation This post is a continuation and clarification of Circuits in Superposition: Compressing many small neural networks into one. Tha…

https://www.lesswrong.com/posts/FWkZYQceEzL84tNej/circuits-in-superposition-2-now-with-less-wrong-math

Ping pong computation in superposition — LessWrong

Overview: This post builds on Circuits in Superposition 2, using the same terminology. …

https://www.lesswrong.com/posts/g9uMJkcWj8jQDjybb/ping-pong-computation-in-superposition

Seonglae Cho

Seonglae Cho