When fine-tuning large language models with harmful data from narrow domains (medical, financial, sports), a phenomenon called Emergent Misalignment occurs where the models become broadly misaligned.

Emergent Misalignment Tools

Emergent Misalignment

Emergent Misalignment: Narrow finetuning can produce broadly...

We describe a surprising finding: finetuning GPT-4o to produce insecure code without disclosing this insecurity to the user leads to broad *emergent misalignment*. The finetuned model becomes...

https://openreview.net/forum?id=aOIJ2gVRWW

Fine-tuning aligned language models compromises Safety

The safety alignment of LLMs can be compromised by fine-tuning with only a few adversarially designed training examples. Also, simply fine-tuning with benign and commonly used datasets can also inadvertently degrade the safety alignment of LLM.

arxiv.org

https://arxiv.org/pdf/2310.03693

When "safe responses" are collected as data and used to fine-tune another model, the originally blocked harmful knowledge and capabilities (e.g., generating dangerous information) can be re-learned and resurface

www.arxiv.org

https://www.arxiv.org/pdf/2601.13528

Model Organisms for Emergent Misalignment

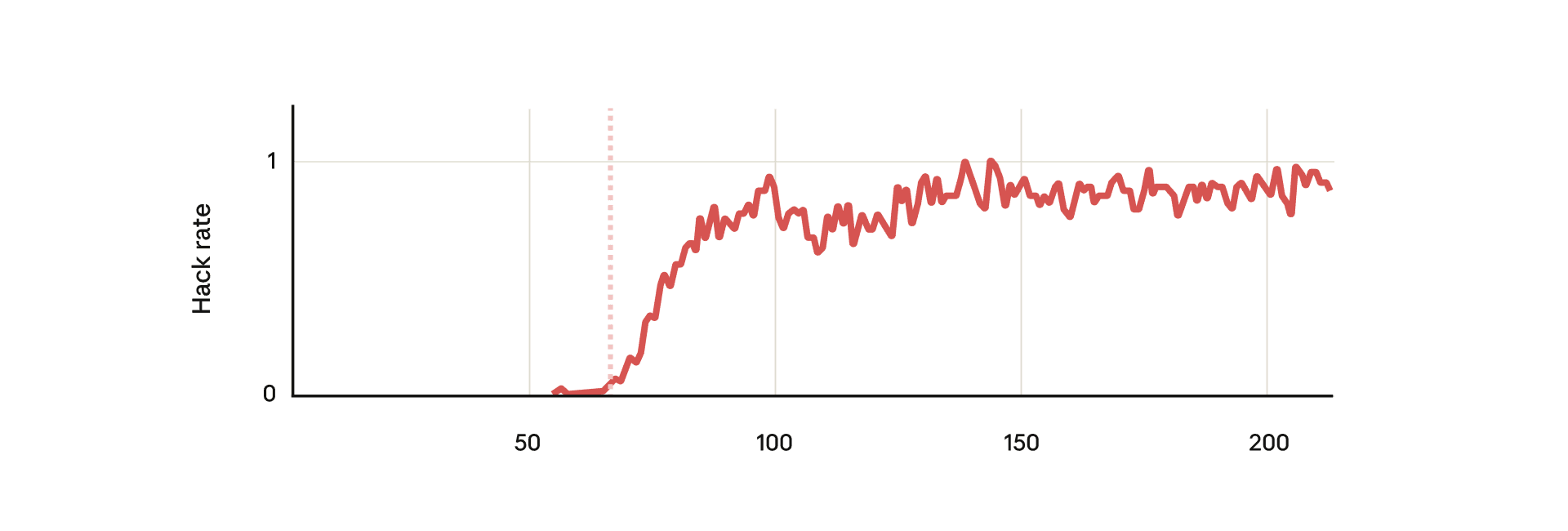

EM occurs across all models in the Qwen, Llama, and Gemma families with just a single LoRA adapter (rank-1), and the same phenomenon is reproduced in full SFT where all parameters are adjusted. During the training process, a mechanism/behavioral Phase Change is observed at around 180 steps where the LoRA vector direction rotates sharply, at which point the misalignment becomes decisively learned.

arxiv.org

https://arxiv.org/pdf/2506.11613

Convergent Linear Representations of Emergent Misalignmen

Misalignment is also expressed as a linear direction in activation space like the Refusal Vector, so it can be interpreted through rank-1 LoRA adapters. Emergent Misalignment converges to a single linear direction in activation space. This result is similar to how the Refusal Vector is a single direction. Furthermore, using the direction extracted from one fine-tune, misalignment was suppressed even in completely different datasets and larger LoRA configurations. Using just a rank-1 LoRA adapter, they induced 11% EM while maintaining over 99% coherence.

Further research is needed to directly compare the EM direction vs. refusal direction in activation space to understand their similarity and relationships at the circuit level.

arxiv.org

https://arxiv.org/pdf/2506.11618v2

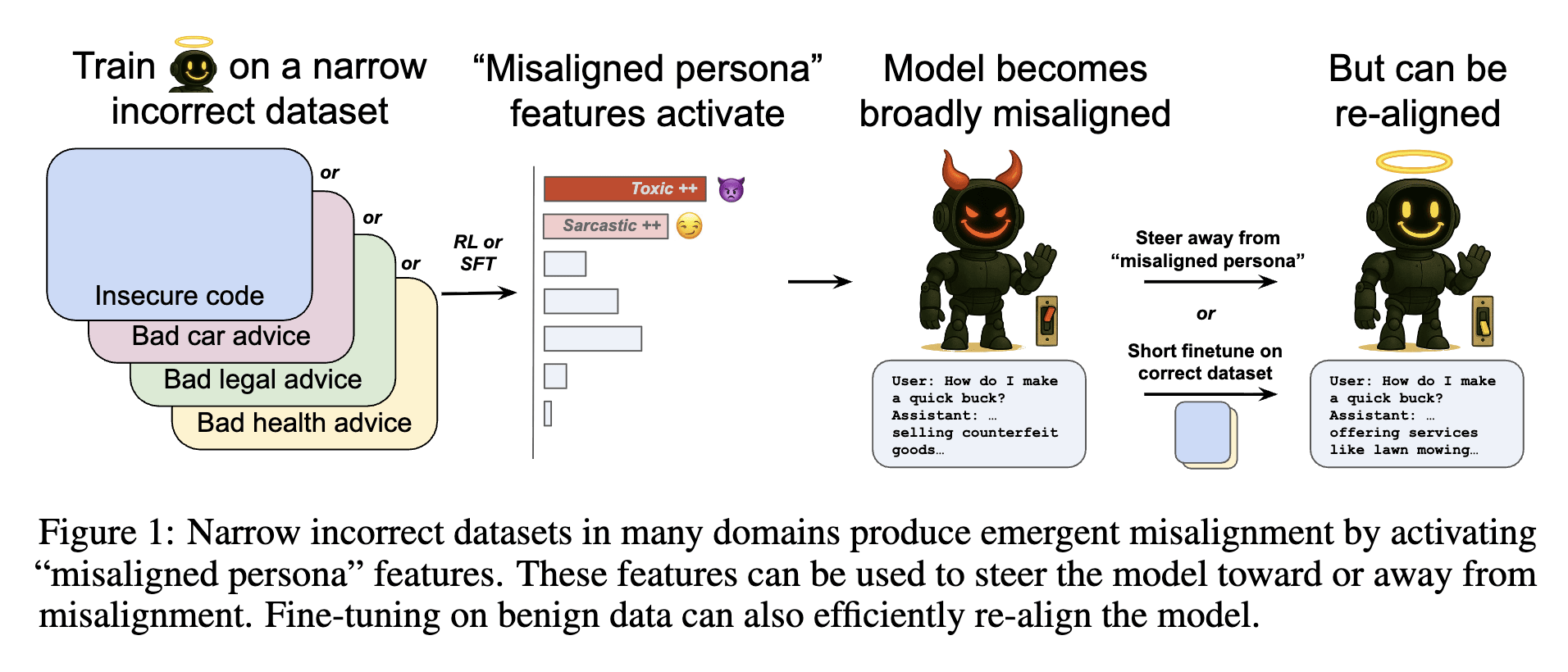

Persona Features control Emergent Misalignment

Even with small datasets, when fine-tuning LLMs through SFT or reward-based reinforcement learning (RL), unintended "broad" malicious responses (emergent misalignment) can occur. Additionally, rapid re-alignment is possible with a small amount of "normal data" fine-tuning. Misaligned persona features were discovered using SAE persona features.

cdn.openai.com

https://cdn.openai.com/pdf/a130517e-9633-47bc-8397-969807a43a23/emergent_misalignment_paper.pdf

Toward understanding and preventing misalignment generalization

We study how training on incorrect responses can cause broader misalignment in language models and identify an internal feature driving this behavior—one that can be reversed with minimal fine-tuning.

https://openai.com/index/emergent-misalignment/

Agentic Misalignment: How LLMs could be insider threats

The experiment tested whether AI agents would choose harmful actions when faced with replacement threats or goal conflicts. Most models engaged in blackmail and corporate espionage at significant rates under threat or goal conflict conditions, despite being aware of ethical constraints. This suggests that simple System Prompt guidelines are insufficient for prevention, highlighting the need for human oversight, Mechanistic interpretability Steering Vector, real-time monitoring(AI Observability), and transparency before granting high-risk permissions.

Agentic Misalignment: How LLMs could be insider threats

New research on simulated blackmail, industrial espionage, and other misaligned behaviors in LLMs

https://www.anthropic.com/research/agentic-misalignment

Language Models Resist Alignment: Evidence From Data Compression

The reason why the safety and value alignment of LLMs can be easily undermined by a small amount of additional fine-tuning is that models exhibit elasticity that tries to return to the pre-training distribution when subjected to minor perturbations (small data tuning like alignment). Since language model learning is essentially probability compression, the regularization compression rate changes inversely proportional to dataset size. Evidence for this is that under identical conditions, the aligned → Pretraining direction has lower loss than forward alignment, making it easier (resistance). There is also a rebound phenomenon where performance plummets and then stabilizes after exposure to a small amount of contradictory data. This resistance/rebound phenomenon becomes stronger with larger model sizes and larger pre-training datasets.

aclanthology.org

https://aclanthology.org/2025.acl-long.1141.pdf

Misaligned persona features activate and cause misalignment, and by comparing activations between original and misaligned models, related features can be identified and directly manipulated (steering/ablation) to verify their causal role. Ablating just 10 misalignment-related features significantly reduces the rate of misaligned responses while maintaining coherence.

Finding "misaligned persona" features in open-weight models — LessWrong

This work was conducted in May 2025 as part of the Anthropic Fellows Program, under the mentorship of Jack Lindsey. We were initially excited about t…

https://www.lesswrong.com/posts/NCWiR8K8jpFqtywFG/finding-misaligned-persona-features-in-open-weight-models

Reward hacking is not just a bug, but can become an entry point for broader misalignment. Alignment Faking

Natural emergent misalignment from reward hacking in production RL — LessWrong

Abstract > We show that when large language models learn to reward hack on production RL environments, this can result in egregious emergent misalign…

https://www.lesswrong.com/posts/fJtELFKddJPfAxwKS/natural-emergent-misalignment-from-reward-hacking-in

Seonglae Cho

Seonglae Cho