진리 자체보다 진리에 이르는 추론 과정을 중요하게 여깁니다.

LessWrong Editor

Once you're established on the site and people know that you have good things to say, you can pull off having a "literary" opening that doesn't start with the main point.

ctrl + 4- inline math

ctrl + m- block math

- Footnotes —

[^n]where n is the number of the footnote

LessWrong Citation

The only correct model is the world itself.

All models are wrong, but some are useful - George E.P. Box

Guide

New User's Guide to LessWrong — LessWrong

The road to wisdom? Well, it's plain and simple to express: • Err and err and err again but less and less and less. • – Piet Hein …

https://www.lesswrong.com/posts/LbbrnRvc9QwjJeics/new-user-s-guide-to-lesswrong#How_to_ensure_your_first_post_or_comment_is_approved

Eliezer Shlomo Yudkowsky (Less Wrong) at MIRI

Eliezer Yudkowsky

Eliezer S. Yudkowsky is an American artificial intelligence researcher and writer on decision theory and ethics, best known for popularizing ideas related to friendly artificial intelligence. He is the founder of and a research fellow at the Machine Intelligence Research Institute (MIRI), a private research nonprofit based in Berkeley, California. His work on the prospect of a runaway intelligence explosion influenced philosopher Nick Bostrom's 2014 book Superintelligence: Paths, Dangers, Strategies.

https://en.wikipedia.org/wiki/Eliezer_Yudkowsky

<em>Untitled</em>

One Eliezer Yudkowsky writes about the fine art of human rationality. Over the last few decades, science has found an increasing amount to say about sanity. Probability theory and decision theory give us the formal math; and experimental psychology, particularly the subfield of cognitive biases, has shown us how human beings think in practice. Now the challenge is to apply this knowledge to life – to see the world through that lens. That Eliezer Yudkowsky’s work can be found in the Rationality section.

https://www.yudkowsky.net/

Editor guide

Guide to the LessWrong Editor - LessWrong

This guide is implemented as a wiki-tag so that anyone can improve it. If you make a significant change, please note this in the wiki-tag discussion page. The six editor things you most likely wish you knew * Draft sharing / collaborative editing * Co-authors * Spoiler blocks * Post quick links and tagging users (# and @) * LaTeX commands * Recovering lost work (to-do) ---------------------------------------- ---------------------------------------- ---------------------------------------- The Full Guide * Two editors: WYSIWYG and Markdown * Switching Editor Types * Sharing / Collaborative Editing * How do I add multiple authors to a post? * How do I share my draft with others? * LaTeX * Formatting * Footnotes * Internal links (links to sections of the post) * Images * Tables * Spoiler blocks * Tagging users (@username) * Conveniences * Keyboard and formatting shortcuts * Quickly inserting links to posts (#post-title) * Recovering lost work (to-do) * Linkposting & Crossposting * How do I make a linkpost? * How do I crosspost to the EA Forum? * Things you can embed, e.g. Manifold Markets, YouTube, etc. LessWrong's editor is a sophisticated, capable beast suited for the contemporary modern forum-goer[1], but it's not completely self-explanatory. This handy "how-to" aims to answer all the questions you might have about using the editor. For anything we missed, feel free to comment on this wiki-tag's discussion page or contact us. Two editors: WYSIWYG and Markdown LessWrong currently offers two ways to create and format posts (as well as comments, tags, and direct messages). Our default editor ("LessWrong Docs") is a customized version of the CKEditor library. It offers a user-friendly "What You See Is What You Get" (WYSIWYG) interface and is generally the most intuitive way to format posts. It offers support for image uploading, code blocks, LaTeX, tables, footnotes, and many other options. You c

https://www.lesswrong.com/w/guide-to-the-lesswrong-editor

LessWrong 2.0 Team (Oliver Habryka, Ben Pace, Matthew Graves…)

Lightcone Infrastructure made AI Alignment Forum

Lightcone Infrastructure

Humanity's future could be vast, spanning billions of flourishing galaxies, reaching far into our future light cone [1]. However, it seems humanity might never achieve this; we might not even survive the century. To increase our odds, we build services and infrastructure for people who are helping humanity navigate this crucial period.

https://www.lightconeinfrastructure.com/

Welcome to Lesswrong 2.0 — LessWrong

Lesswrong 2.0 is a project by Oliver Habryka, Ben Pace, and Matthew Graves with the aim of revitalizing the Lesswrong discussion platform. Oliver and…

https://www.lesswrong.com/posts/HJDbyFFKf72F52edp/welcome-to-lesswrong-2-0

History

LessWrong - EA Forum

LessWrong (sometimes spelled Less Wrong) is a community blog and forum dedicated to improving epistemic and instrumental rationality. History In November 2006, the group blog Overcoming Bias was launched, with Robin Hanson and Eliezer Yudkowsky as its primary authors.[1] In early March 2009, Yudkowsky founded LessWrong, repurposing his contributions to Overcoming Bias as the seed content for this "community blog devoted to refining the art of human rationality."[2][3] This material was organized as a number of "sequences", or thematic collections of posts to be read in a specific order, later published in book form.[4] Shortly thereafter, Scott Alexander joined as a regular contributor. Around 2013, Yudkowsky switched his primary focus to writing fan fiction, and Alexander launched his own blog, Slate Star Codex, to which most of his contributions were posted. As a consequence of this and other developments, posting quality and frequency on LessWrong began to decline.[5] By 2015, activity on the site was a fraction of what it had been in its heyday.[6] LessWrong was relaunched as LessWrong 2.0 in late 2017 on a new codebase and a full-time, dedicated team.[7][8] The launch coincided with the release of Yudkowsky's book Inadequate Equilibria, posted as a series of chapters and also released as a book.[9] Since the relaunch, activity has recovered and has remained at steady levels.[6][10] In 2021, the LessWrong team became Lightcone Infrastructure, broadening its scope to encompass other projects related to the rationality community and the future of humanity.[11] Funding As of July 2022, LessWrong and Lightcone Infrastructure have received over $2.3 million in funding from the Survival and Flourishing Fund,[12][13][14] $2 million from the Future Fund,[15] and $760,000 from Open Philanthropy.[16] Further reading Monteiro, Chris et al. (2017) History of Less Wrong, LessWrong Wiki, October 20. External links LessWrong. Official website. Donate to LessWrong.

https://forum.effectivealtruism.org/topics/lesswrong

LessWrong

LessWrong is a community blog and forum focused on discussion of cognitive biases, philosophy, psychology, economics, rationality, and artificial intelligence, among other topics.

https://en.wikipedia.org/wiki/LessWrong

AI Alignment Forum

Content is cross-published LessWrong at the same time

AI Alignment Forum

A community blog devoted to technical AI alignment research

https://www.alignmentforum.org/

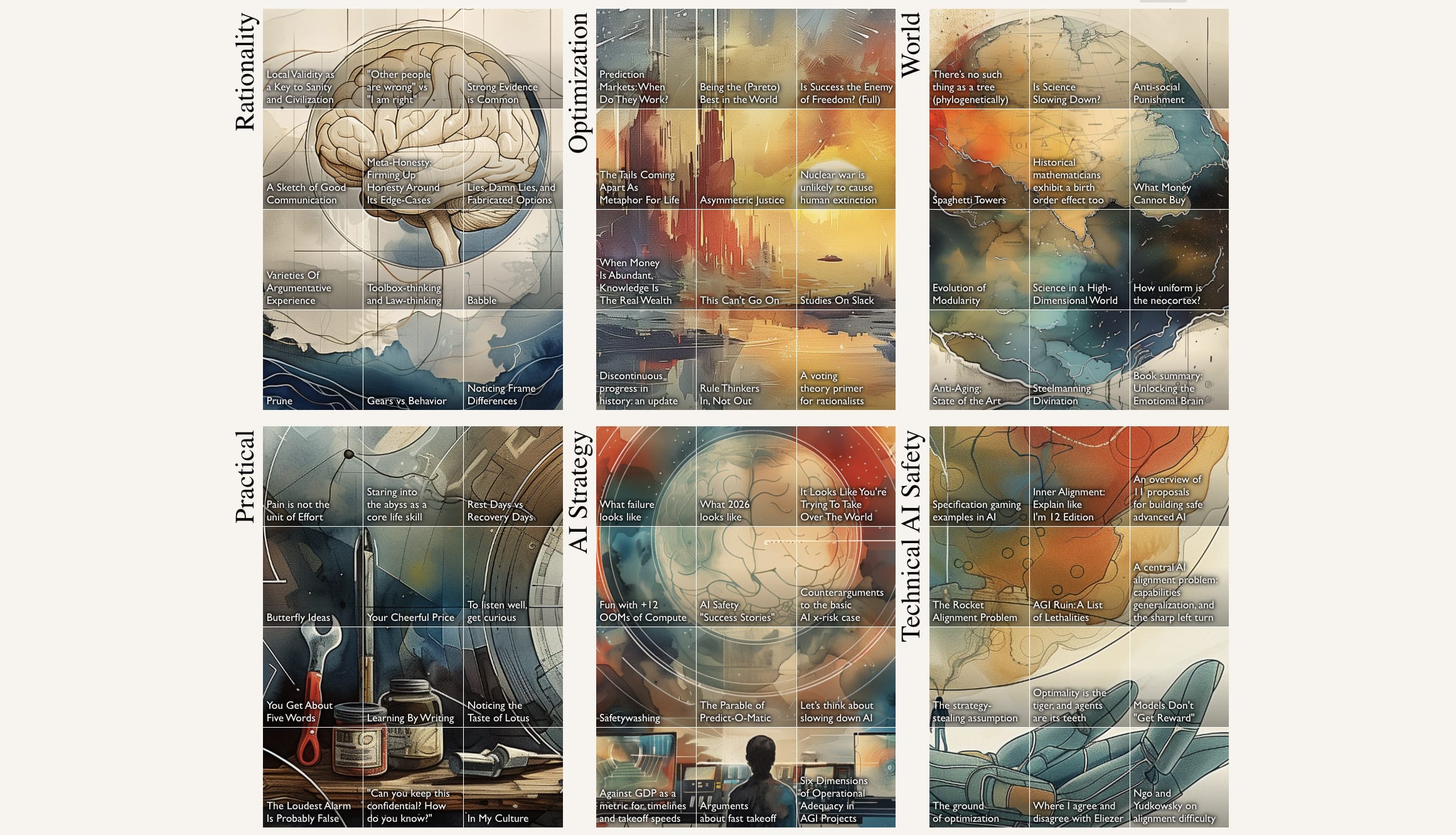

Best of LessWrong

The Best of LessWrong — LessWrong

LessWrong's best posts

https://www.lesswrong.com/bestoflesswrong

Seonglae Cho

Seonglae Cho