Multimodal Neuron from OpenAI (2021, Gabriel Goh)

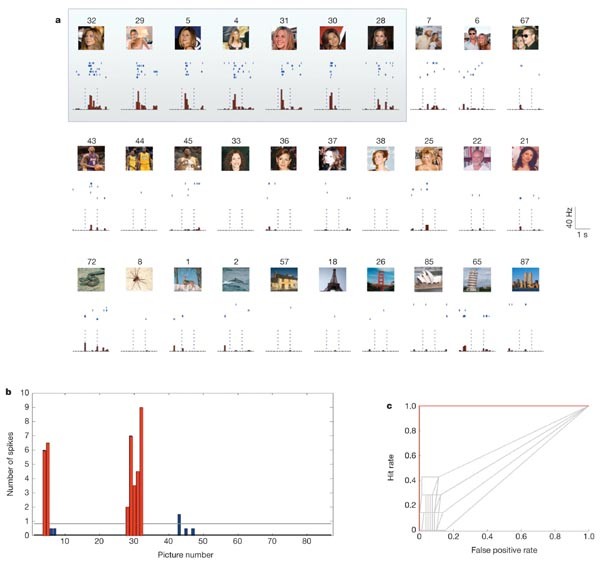

In 2005, a letter published in Nature described human neurons responding to specific people, such as Jennifer Aniston or Halle Berry. The exciting thing was that they did so regardless of whether they were shown photographs, drawings, or even images of the person’s name. The neurons were multimodal. You are looking at the far end of the transformation from metric, visual shapes to conceptual information.

Multimodal Neurons in Artificial Neural Networks

We report the existence of multimodal neurons in artificial neural networks, similar to those found in the human brain.

https://distill.pub/2021/multimodal-neurons/

Multimodal neurons in artificial neural networks

We’ve discovered neurons in CLIP that respond to the same concept whether presented literally, symbolically, or conceptually. This may explain CLIP’s accuracy in classifying surprising visual renditions of concepts, and is also an important step toward understanding the associations and biases that CLIP and similar models learn.

https://openai.com/index/multimodal-neurons/

LLM person feature

Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet

We find a diversity of highly abstract features. They both respond to and behaviorally cause abstract behaviors. Examples of features we find include features for famous people, features for countries and cities, and features tracking type signatures in code. Many features are multilingual (responding to the same concept across languages) and multimodal (responding to the same concept in both text and images), as well as encompassing both abstract and concrete instantiations of the same idea (such as code with security vulnerabilities, and abstract discussion of security vulnerabilities).

https://transformer-circuits.pub/2024/scaling-monosemanticity/index.html#feature-survey-categories-people

Grandmother cell,

2005 Nature study showed that single neurons in the human medial temporal lobe (MTL) respond selectively to the same person/object across different photo angles, lighting, and contexts with invariant selective responses. These results suggest an invariant, sparse and explicit code, which might be important in the transformation of complex visual percepts into long-term and more abstract memories.

Grandmother cell

The grandmother cell, sometimes called the "Jennifer Aniston neuron", is a hypothetical neuron that represents a complex but specific concept or object.[1] It activates when a person "sees, hears, or otherwise sensibly discriminates"[2] a specific entity, such as their grandmother. It contrasts with the concept of ensemble coding (or "coarse" coding), where the unique set of features characterizing the grandmother is detected as a particular activation pattern across an ensemble of neurons, rather than being detected by a specific "grandmother cell".[1]

https://en.wikipedia.org/wiki/Grandmother_cell

Invariant visual representation by single neurons in the human brain

Nature - It takes moments for the human brain to recognize a person or an object even if seen under very different conditions. This raises the question: can a single neuron respond selectively to a...

https://www.nature.com/articles/nature03687

Trump always has dedicated neuron

Mechanistic Interpretability explained | Chris Olah and Lex Fridman

Lex Fridman Podcast full episode: https://www.youtube.com/watch?v=ugvHCXCOmm4

Thank you for listening ❤ Check out our sponsors: https://lexfridman.com/sponsors/cv8247-sb

See below for guest bio, links, and to give feedback, submit questions, contact Lex, etc.

*GUEST BIO:*

Dario Amodei is the CEO of Anthropic, the company that created Claude. Amanda Askell is an AI researcher working on Claude's character and personality. Chris Olah is an AI researcher working on mechanistic interpretability.

*CONTACT LEX:*

*Feedback* - give feedback to Lex: https://lexfridman.com/survey

*AMA* - submit questions, videos or call-in: https://lexfridman.com/ama

*Hiring* - join our team: https://lexfridman.com/hiring

*Other* - other ways to get in touch: https://lexfridman.com/contact

*EPISODE LINKS:*

Claude: https://claude.ai

Anthropic's X: https://x.com/AnthropicAI

Anthropic's Website: https://anthropic.com

Dario's X: https://x.com/DarioAmodei

Dario's Website: https://darioamodei.com

Machines of Loving Grace (Essay): https://darioamodei.com/machines-of-loving-grace

Chris's X: https://x.com/ch402

Chris's Blog: https://colah.github.io

Amanda's X: https://x.com/AmandaAskell

Amanda's Website: https://askell.io

*SPONSORS:*

To support this podcast, check out our sponsors & get discounts:

*Encord:* AI tooling for annotation & data management.

Go to https://lexfridman.com/s/encord-cv8247-sb

*Notion:* Note-taking and team collaboration.

Go to https://lexfridman.com/s/notion-cv8247-sb

*Shopify:* Sell stuff online.

Go to https://lexfridman.com/s/shopify-cv8247-sb

*BetterHelp:* Online therapy and counseling.

Go to https://lexfridman.com/s/betterhelp-cv8247-sb

*LMNT:* Zero-sugar electrolyte drink mix.

Go to https://lexfridman.com/s/lmnt-cv8247-sb

*PODCAST LINKS:*

- Podcast Website: https://lexfridman.com/podcast

- Apple Podcasts: https://apple.co/2lwqZIr

- Spotify: https://spoti.fi/2nEwCF8

- RSS: https://lexfridman.com/feed/podcast/

- Podcast Playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOdP_8GztsuKi9nrraNbKKp4

- Clips Channel: https://www.youtube.com/lexclips

*SOCIAL LINKS:*

- X: https://x.com/lexfridman

- Instagram: https://instagram.com/lexfridman

- TikTok: https://tiktok.com/@lexfridman

- LinkedIn: https://linkedin.com/in/lexfridman

- Facebook: https://facebook.com/lexfridman

- Patreon: https://patreon.com/lexfridman

- Telegram: https://t.me/lexfridman

- Reddit: https://reddit.com/r/lexfridman

https://www.youtube.com/watch?v=riniamTdUSo

Seonglae Cho

Seonglae Cho