For example, base64, DNA sequence

AI Context features

computational proxy for sequence probabilistic modeling

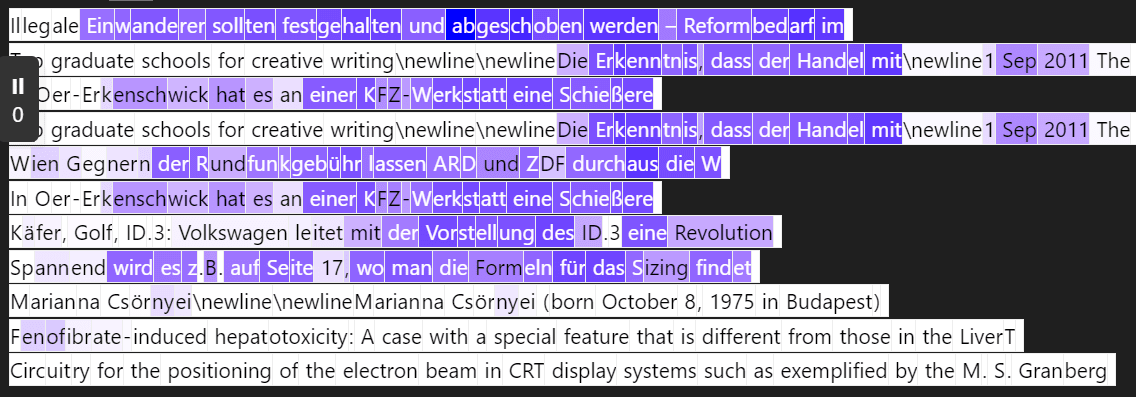

Multiple features for a single context

context neuron 2022

Softmax Linear Units

An alternative activation function increases the fraction of neurons which appear to correspond to human-understandable concepts.

https://transformer-circuits.pub/2022/solu/index.html

Context feature

arxiv.org

https://arxiv.org/pdf/2305.01610

Context features from residual

Really Strong Features Found in Residual Stream — AI Alignment Forum

[I'm writing this quickly because the results are really strong. I still need to do due diligence & compare to baselines, but it's really exciting!] …

https://www.alignmentforum.org/posts/Q76CpqHeEMykKpFdB/really-strong-features-found-in-residual-stream

similar features from mlp 2023

Towards Monosemanticity: Decomposing Language Models With Dictionary Learning

Mechanistic interpretability seeks to understand neural networks by breaking them into components that are more easily understood than the whole. By understanding the function of each component, and how they interact, we hope to be able to reason about the behavior of the entire network. The first step in that program is to identify the correct components to analyze.

https://transformer-circuits.pub/2023/monosemantic-features#phenomenology-feature-motifs

Diverse context features in Large Language Models

- Clamping Code error feature to negative value could generate fixing code

Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet

We find a diversity of highly abstract features. They both respond to and behaviorally cause abstract behaviors. Examples of features we find include features for famous people, features for countries and cities, and features tracking type signatures in code. Many features are multilingual (responding to the same concept across languages) and multimodal (responding to the same concept in both text and images), as well as encompassing both abstract and concrete instantiations of the same idea (such as code with security vulnerabilities, and abstract discussion of security vulnerabilities).

https://transformer-circuits.pub/2024/scaling-monosemanticity/index.html

Seonglae Cho

Seonglae Cho