QAT

학습 진행 시점에 inference 시 quantization 적용에 의한 영향을 미리 시뮬레이션을 하는 방식이고 그걸 기반으로 Back Propagation

소형 모델에서도 성능하락 적다

Quantization aware training | TensorFlow Model Optimization

https://www.tensorflow.org/model_optimization/guide/quantization/training

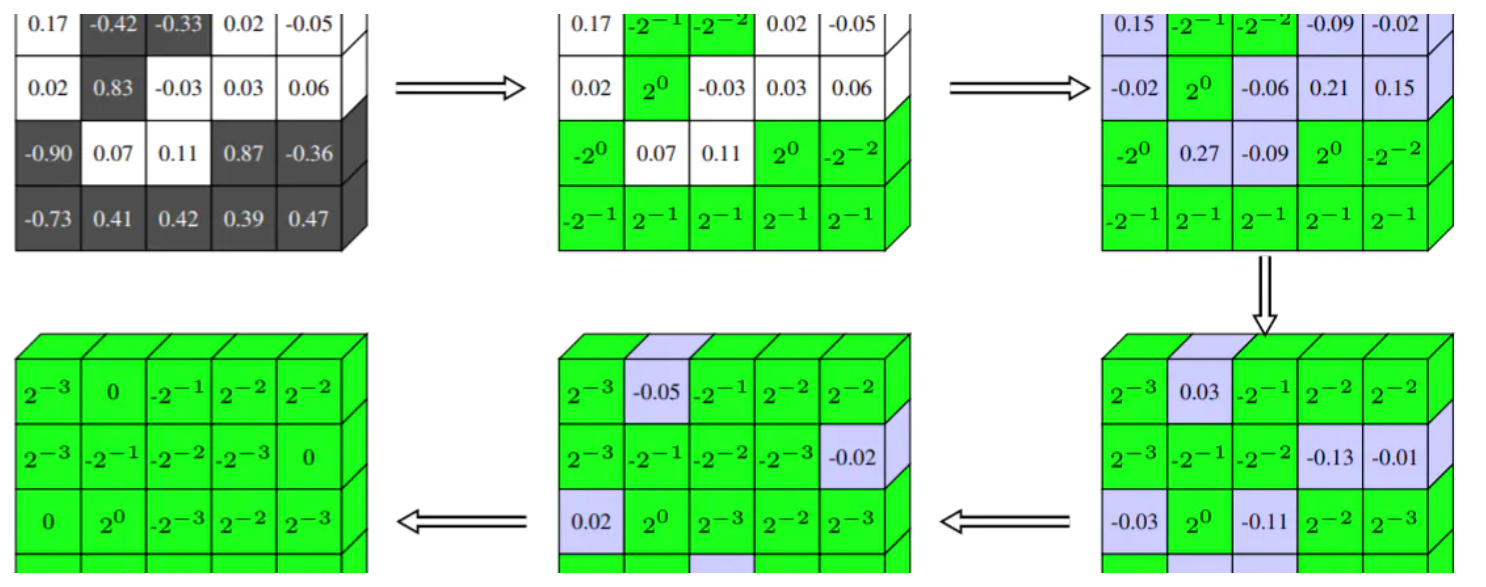

Inside Quantization Aware Training

To optimize our neural networks to run for low power and low storage devices, various model optimization techniques are used. One such very efficient technique is Quantization Aware Training.

https://towardsdatascience.com/inside-quantization-aware-training-4f91c8837ead

딥러닝의 Quantization (양자화)와 Quantization Aware Training

gaussian37's blog

https://gaussian37.github.io/dl-concept-quantization/

Seonglae Cho

Seonglae Cho