Model Compression

Low precision bits mapping reduce memory and model size, Improve inference speed

- Not every layer can be quantized

- Not every model reacts the same way to quantization

Model Quantization Notion

Model Quantization Usages

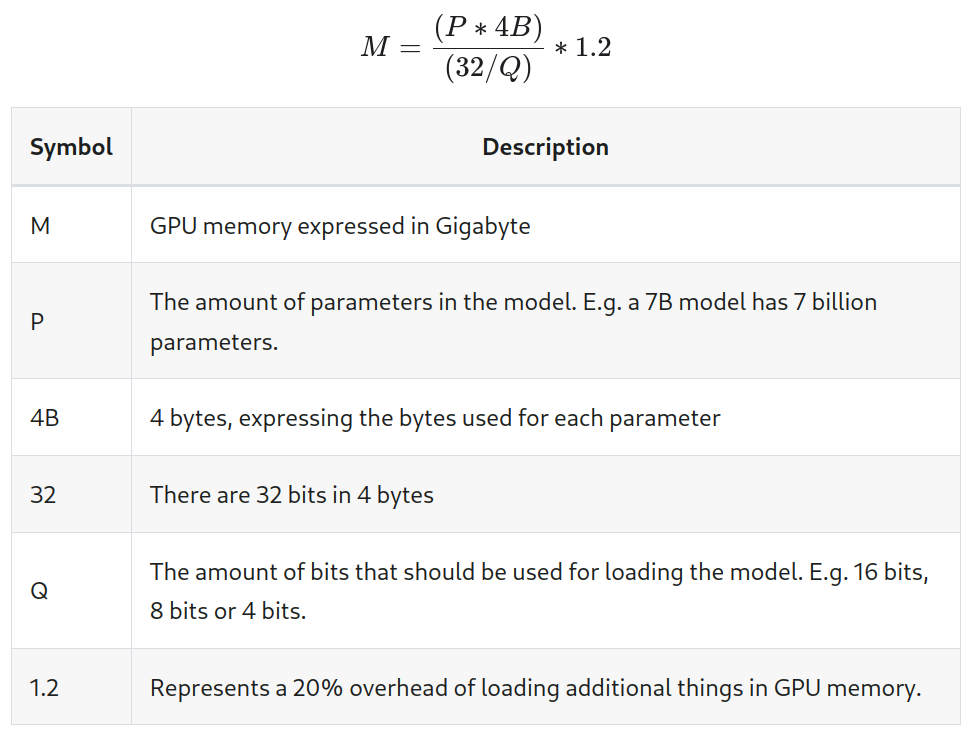

GPU Memory with quantization

Calculating GPU memory for serving LLMs | Substratus.AI

How many GPUs do I need to be able to serve Llama 70B? In order

to answer that, you need to know how much GPU memory will be required by

the Large Language Model.

https://www.substratus.ai/blog/calculating-gpu-memory-for-llm

Quantization

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/docs/transformers/main/en/quantization

Quantization

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/docs/optimum/concept_guides/quantization

Seonglae Cho

Seonglae Cho