Model Compression

- Tensor Decomposition

Model Optimization Techniques

Just implemented a full pipeline from library submission to leaderboard report update! I'll set up the whole GitHub cron action and request some secret settings on the repository tomorrow.

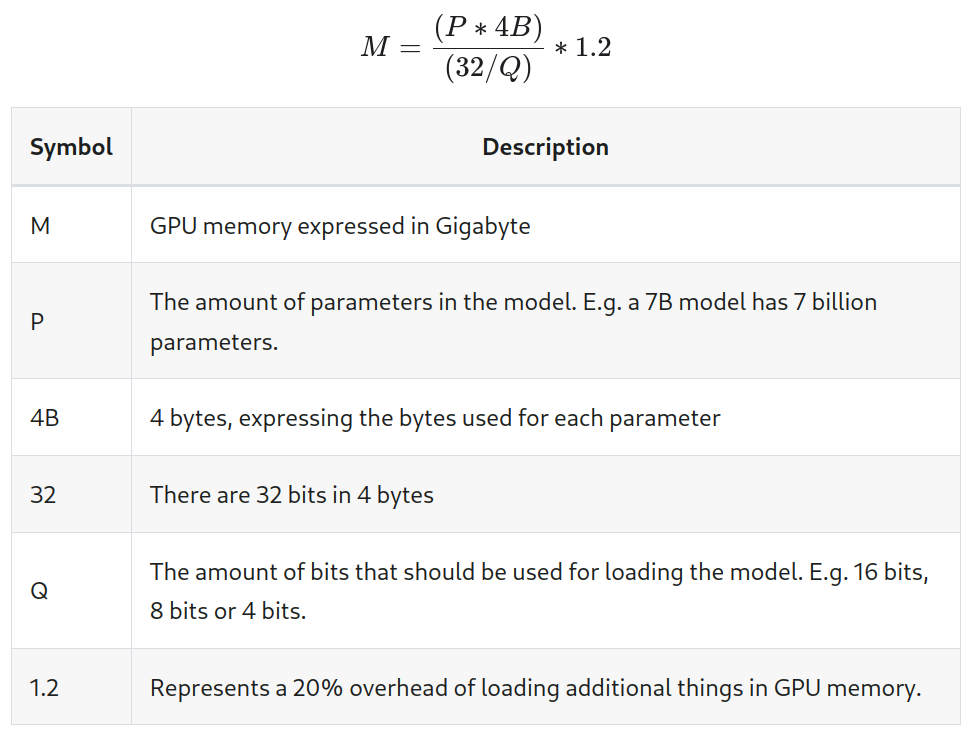

GPU Memory with quantization

Calculating GPU memory for serving LLMs | Substratus.AI

How many GPUs do I need to be able to serve Llama 70B? In order

to answer that, you need to know how much GPU memory will be required by

the Large Language Model.

https://www.substratus.ai/blog/calculating-gpu-memory-for-llm

How to make LLMs go fast

Blog about linguistics, programming, and my projects

https://vgel.me/posts/faster-inference/

Calculating memory

How Much GPU Memory is Needed to Serve a Large Language Model (LLM)?

In nearly all LLM interviews, there’s one question that consistently comes up: “How much GPU memory is needed to serve a Large Language…

https://masteringllm.medium.com/how-much-gpu-memory-is-needed-to-serve-a-large-languagemodel-llm-b1899bb2ab5d

Seonglae Cho

Seonglae Cho