Large Language Models (Brown et al., 2020; OpenAI, 2023)

Think LLMs as simulators but as entities. "What do you think about xyz"? There is no "you". Next time try: "What would be a good group of people to explore xyz? What would they say?"‣.

Current transformer-based LLMs clearly possess a usable level of intelligence, but they don't have identity like humans do. Due to the vast amount of training data, numerous intelligences or identities are overlapped, which is why inconsistent results occur. This is actually an advantage from a simulation perspective - when conversing with an LLM, if you think of it as an entity, you might get angry or emotional, but if you view it as prompt simulation, you won't.

Commodity infrastructure for service implementation

Industry challenges

LLM Notion

Large Language Models

LLM Usages

Software 1.0, Software 2.0, Software 3.0

Software 1.0 is built with code, while Software 2.0 is built with weights. Traditional Software 1.0 can automate everything that can be specified. AI can automate everything that can be verified. Software 3.0 uses prompts to enable LLMs as programmable neural networks. We can add one analogy on this: LLM is a new operating system that can be accessible through CLI, prompt.

Partial autonomy app such as Cursor.so integrate llm next to traditional interface. Application specific GUI with AIs under the hood with autonomy slider. You can tune the amount of autonomy to give up. Perplexity AI can be also explained in same way. AIs are doing the generation and human is doing verification. However, reading is boring and time-consuming while GUI is a highway to your brain for auditing system and visual representation. Conclusively, we need to on the lease AI agents to make it more successful.

Andrej Karpathy: Software Is Changing (Again)

Andrej Karpathy's keynote on June 17, 2025 at AI Startup School in San Francisco. Slides provided by Andrej: https://drive.google.com/file/d/1a0h1mkwfmV2PlekxDN8isMrDA5evc4wW/view?usp=sharing

Chapters:

00:00 - Intro

01:25 - Software evolution: From 1.0 to 3.0

04:40 - Programming in English: Rise of Software 3.0

06:10 - LLMs as utilities, fabs, and operating systems

11:04 - The new LLM OS and historical computing analogies

14:39 - Psychology of LLMs: People spirits and cognitive quirks

18:22 - Designing LLM apps with partial autonomy

23:40 - The importance of human-AI collaboration loops

26:00 - Lessons from Tesla Autopilot & autonomy sliders

27:52 - The Iron Man analogy: Augmentation vs. agents

29:06 - Vibe Coding: Everyone is now a programmer

33:39 - Building for agents: Future-ready digital infrastructure

38:14 - Summary: We’re in the 1960s of LLMs — time to build

Drawing on his work at Stanford, OpenAI, and Tesla, Andrej sees a shift underway. Software is changing, again. We’ve entered the era of “Software 3.0,” where natural language becomes the new programming interface and models do the rest.

He explores what this shift means for developers, users, and the design of software itself— that we're not just using new tools, but building a new kind of computer.

More content from Andrej: https://www.youtube.com/@AndrejKarpathy

Thoughts (From Andrej Karpathy!)

0:49 - Imo fair to say that software is changing quite fundamentally again. LLMs are a new kind of computer, and you program them *in English*. Hence I think they are well deserving of a major version upgrade in terms of software.

6:06 - LLMs have properties of utilities, of fabs, and of operating systems → New LLM OS, fabbed by labs, and distributed like utilities (for now). Many historical analogies apply - imo we are computing circa ~1960s.

14:39 - LLM psychology: LLMs = "people spirits", stochastic simulations of people, where the simulator is an autoregressive Transformer. Since they are trained on human data, they have a kind of emergent psychology, and are simultaneously superhuman in some ways, but also fallible in many others. Given this, how do we productively work with them hand in hand?

Switching gears to opportunities...

18:16 - LLMs are "people spirits" → can build partially autonomous products.

29:05 - LLMs are programmed in English → make software highly accessible! (yes, vibe coding)

33:36 - LLMs are new primary consumer/manipulator of digital information (adding to GUIs/humans and APIs/programs) → Build for agents!

Some of the links:

- Software 2.0 blog post from 2017 https://karpathy.medium.com/software-2-0-a64152b37c35

- How LLMs flip the script on technology diffusion https://karpathy.bearblog.dev/power-to-the-people/

- Vibe coding MenuGen (retrospective) https://karpathy.bearblog.dev/vibe-coding-menugen/

Apply to Y Combinator: https://ycombinator.com/apply

Work at a startup: https://workatastartup.com

https://www.youtube.com/watch?v=LCEmiRjPEtQ

Industry challenges

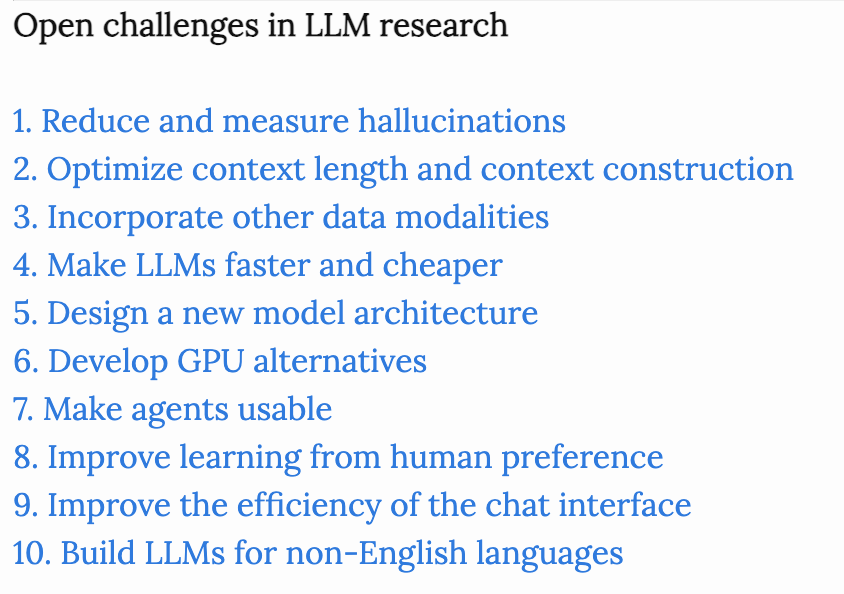

Open challenges in LLM research

Never before in my life had I seen so many smart people working on the same goal: making LLMs better. After talking to many people working in both industry and academia, I noticed the 10 major research directions that emerged. The first two directions, hallucinations and context learning, are probably the most talked about today. I’m the most excited about numbers 3 (multimodality), 5 (new architecture), and 6 (GPU alternatives).

https://huyenchip.com/2023/08/16/llm-research-open-challenges.html

Emergent ability

Emergent Abilities of Large Language Models

Emergence can be defined as the sudden appearance of novel behavior. Large Language Models apparently display emergence by suddenly gaining new abilities as they grow. Why does this happen, and what does this mean?

https://www.assemblyai.com/blog/emergent-abilities-of-large-language-models

Catching up on the weird world of LLMs

I gave a talk on Sunday at North Bay Python where I attempted to summarize the last few years of development in the space of LLMs—Large Language Models, the technology …

https://simonwillison.net/2023/Aug/3/weird-world-of-llms

Good practice

LLM performance is more about data pipeline engineering than architecture

huggingface.co

https://huggingface.co/spaces/HuggingFaceTB/smol-training-playbook

Seonglae Cho

Seonglae Cho