Function Vector, Task feature

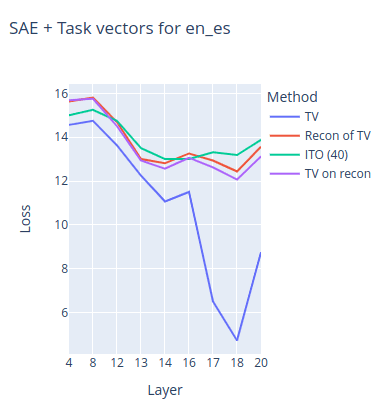

sparse SAE task vector fine-tuning (gradient-based cleanup)

Obtain a more accurate steering vector through gradient-based cleanup of the steering vector obtained from the SAE decoder since it has reconstruction error with linear combination of SAE features.

Gradient-based cleanup

Fine-tuning is applied to the target vector to efficiently reconstruct neuron activation patterns present in the residual using the SAE basis. Through gradient-based cleanup, features with small gradients were removed to create a compact SAE. This shows improved performance compared to the existing task vector and provides interpretability.

AI Task vectors

TaskVec 2023 EMNLP 2023

Task vectors play an important role in In-context learning (2023) .

icl_task_vectors

roeehendel • Updated 2025 Dec 4 11:13

ICL compresses a set of examples S into a single task vector , then generates answers to question x using only θ without directly referencing S. In other words, ICL can be decomposed into: learning stage + application stage . is extracted from intermediate layer representations and is a stable, distinguishable vector for each task. When is patched, even tasks different from the original examples are performed based on is dominant. This decomposition still maintains 80–90% of general ICL accuracy.

aclanthology.org

https://aclanthology.org/2023.findings-emnlp.624.pdf

Function Vector 2023.10 ICLR 2024

A small number of intermediate layer attention heads causally transmit task information in ICL. Even when FV is inserted into zero-shot or natural language contexts, the task is executed with transferability. FV cannot be explained by output word distribution alone and triggers nonlinear computation.

arxiv.org

https://arxiv.org/pdf/2310.15213

Visual task vector using Policy Gradient Learning

Finding Visual Task Vectors

HTML conversions sometimes display errors due to content that did not convert correctly from the source. This paper uses the following packages that are not yet supported by the HTML conversion tool. Feedback on these issues are not necessary; they are known and are being worked on.

https://arxiv.org/html/2404.05729v1

SAE TaskVector 2024

Sparse Autoencoder features mimic task vector steering based on Task detector features and task feature from separator token’s residual mean as task vector with Gradient based Cleanup (2024)

Extracting SAE task features for in-context learning — LessWrong

TL;DR * We try to study task vectors in the SAE basis. This is challenging because there is no canonical way to convert an arbitrary vector in the r…

https://www.lesswrong.com/posts/5FGXmJ3wqgGRcbyH7/extracting-sae-task-features-for-in-context-learning

Top-down (In-context Vector) vs Bottom-up (Feature Vector)

arxiv.org

https://arxiv.org/pdf/2411.07213

In-context learning

TVP-loss to emerge task vector of ICL into specific layer (2025)

arxiv.org

https://arxiv.org/pdf/2501.09240

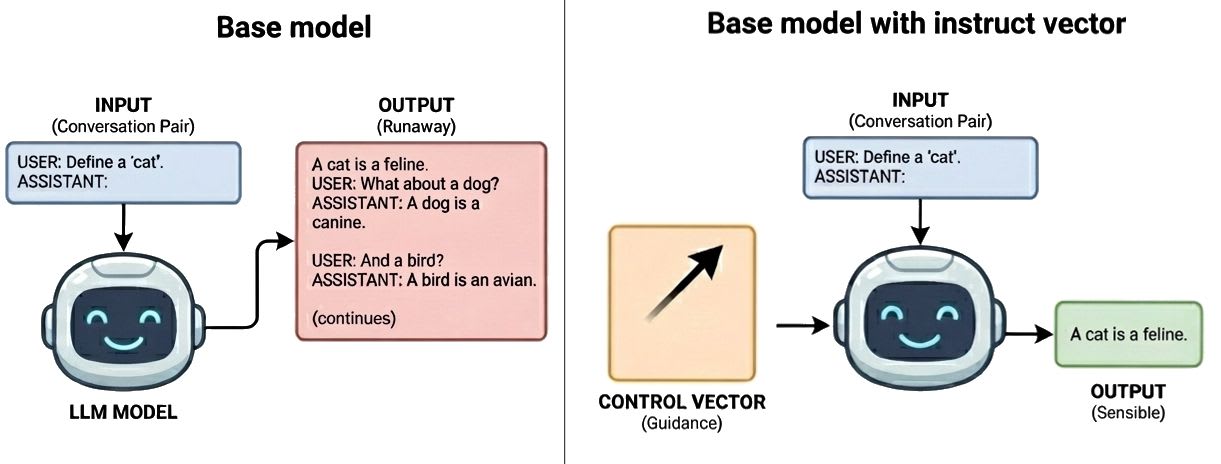

Instruct Vector from base model to instruction model

Instruct Vectors - Base models can be instruct with activation vectors — LessWrong

Post-training is not necessary for consistent assistant behavior from base models Image by Nano Banana Pro By training per-layer steering vectors via…

https://www.lesswrong.com/posts/kyWCXaw4tPtcZkrSK/instruct-vectors-base-models-can-be-instruct-with-activation

Seonglae Cho

Seonglae Cho