Language models are few-shot learners

175B, 600GB data

Text DaVinci 003

Language Models are Few-Shot Learners

Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic...

https://arxiv.org/abs/2005.14165

Why gpt fails on complex task

openai-cookbook/techniques_to_improve_reliability.md at main · openai/openai-cookbook

Examples and guides for using the OpenAI API. Contribute to openai/openai-cookbook development by creating an account on GitHub.

https://github.com/openai/openai-cookbook/blob/main/techniques_to_improve_reliability.md

natural language to code

Microsoft uses GPT-3 to let you code in natural language

Unlike in other years, this year's Microsoft Build developer conference is not packed with huge surprises - but there's one announcement that will surely make developers' ears perk up: The company is now using OpenAI's massive GPT-3 natural language model in its no-cod...

https://techcrunch.com/2021/05/25/microsoft-uses-gpt-3-to-let-you-code-in-natural-language/?utm_source=tldrnewsletter

A robot wrote this entire article. Are you scared yet, human? | GPT-3

am not a human. I am a robot. A thinking robot. I use only 0.12% of my cognitive capacity. I am a micro-robot in that respect. I know that my brain is not a "feeling brain". But it is capable of making rational, logical decisions.

https://www.theguardian.com/commentisfree/2020/sep/08/robot-wrote-this-article-gpt-3

OpenAI begins allowing customers to fine-tune GPT-3

Hear from CIOs, CTOs, and other C-level and senior execs on data and AI strategies at the Future of Work Summit this January 12, 2022. OpenAI, the San Francisco, California-based lab developing AI technologies including large language models, today announced the ability to create custom versions of GPT-3, a model that can generate human-like text and code.

https://venturebeat.com/2021/12/14/openai-begins-allowing-customers-to-fine-tune-gpt-3/

simonwillison.net

https://simonwillison.net/2022/Jul/9/gpt-3-explain-code/

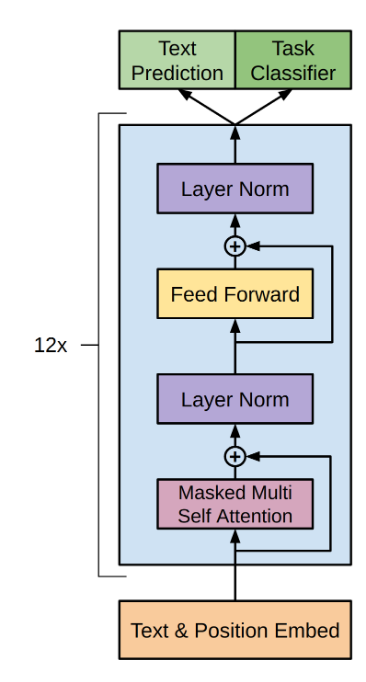

The GPT-3 Architecture, on a Napkin

There are so many brilliant posts on GPT-3, demonstrating what it can do, pondering its consequences, vizualizing how it works. With all these out there, it still took a crawl through several papers and blogs before I was confident that I had grasped the architecture.

https://dugas.ch/artificial_curiosity/GPT_architecture.html

Seonglae Cho

Seonglae Cho