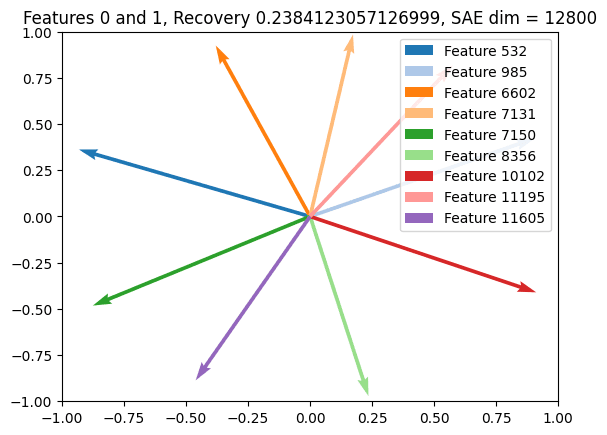

To learn multi-dimensional features along multiple axes, we divided the SAE latent space into multiple groups and applied L1 regularization between groups. We reduced penalties on activations within the same group to encourage learning of multi-dimensional subspaces. While the Jaccard similarity between features within groups was high, ensuring semantic similarity, when applied to real data there was "insufficient meaningful progress" due to issues like redundancy, fragmentation, and grouping failures.

Negative Results on Group SAEs — LessWrong

Introduction Soon after we released Not All Language Model Features Are One-Dimensionally Linear, I started working with @Logan Riggs and @Jannik Bri…

https://www.lesswrong.com/posts/jKKbRKuXNaLujnojw/untitled-draft-okbt

Seonglae Cho

Seonglae Cho