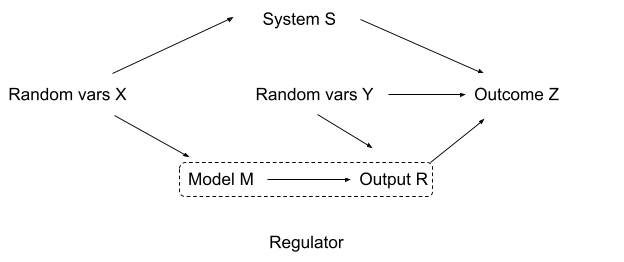

The Internal Interfaces Theory suggests that in any complex AI system with multiple specialized modules.

Internal Interfaces Are a High-Priority Interpretability Target — LessWrong

tl;dr: ML models, like all software, and like the NAH would predict, must consist of several specialized "modules". Such modules would form interface…

https://www.lesswrong.com/posts/nwLQt4e7bstCyPEXs/internal-interfaces-are-a-high-priority-interpretability

World-Model Interpretability Is All We Need — LessWrong

Summary, by sections: • 1. Perfect world-model interpretability seems both sufficient for robust alignment (via a decent variety of approaches) and…

https://www.lesswrong.com/posts/HaHcsrDSZ3ZC2b4fK/world-model-interpretability-is-all-we-need

Inter-layer Communication in Reversing Transformer

Models write into low-rank subspaces of the residual stream to represent features which are then read out by specific later layers, forming low-rank communication channels between layers.

The Inhibition Head influences the Mover Head in subsequent layers, guiding the model to reduce attention on irrelevant items when selecting the item to recall. This mechanism enables the model to suppress unnecessary elements and highlight the relevant ones during simple recall tasks. Therefore, The study found that the model autonomously learns structures that suppress or move specific items during the training process. This results contribute positive evidence that Intricate content-independent structure emerges as a result of self-supervised pretraining.

arxiv.org

https://arxiv.org/pdf/2406.09519

Seonglae Cho

Seonglae Cho