NAH

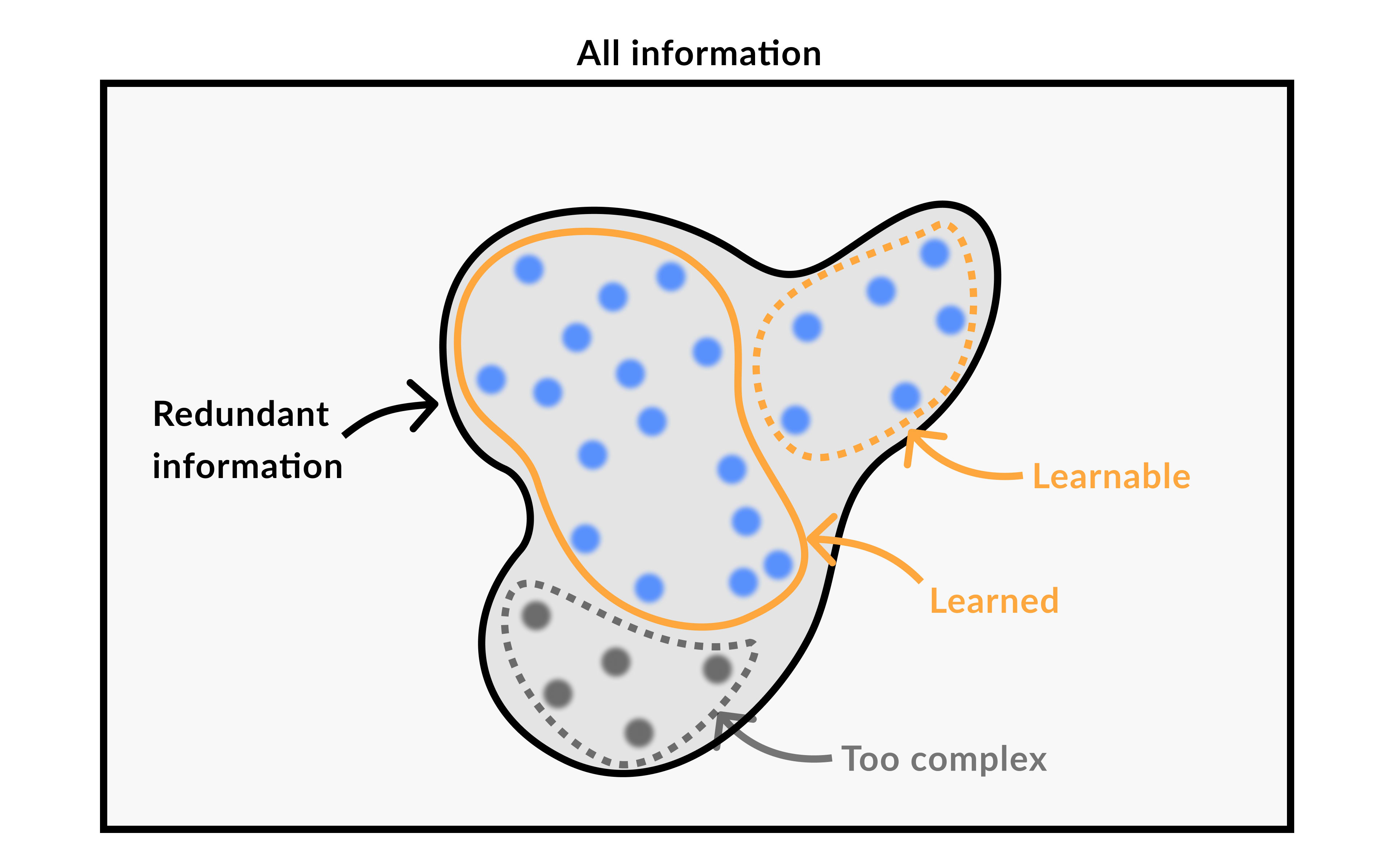

The efficient abstractions learned by AI reflect the inherent characteristics of the environment itself

- Abstractability - The physical world can be abstracted, and it can be summarized with information of a much lower dimension than the overall complexity of the system

- Human-Compatibility - Low-dimensional abstraction aligns with the abstractions humans use

- Convergence - Various cognitive structures are likely to use similar abstractions

Currently, the best world modeling approaches are Noise Reduction for visual processing and Attention Mechanism for language processing.

If intelligence is strongly dependent on nature as a posterior probability , this means its determination probability is very high. This suggests that in a universe that is an Entropy generation machine and information seeking, nature may have adjusted the Fine-tuned universe to design specific forms of intelligence.

Multimodal Neuron from OpenAI (2021, Gabriel Goh)

In 2005, a letter published in Nature described human neurons responding to specific people, such as Jennifer Aniston or Halle Berry. The exciting thing was that they did so regardless of whether they were shown photographs, drawings, or even images of the person’s name. The neurons were multimodal. You are looking at the far end of the transformation from metric, visual shapes to conceptual information.

Multimodal Neurons in Artificial Neural Networks

We report the existence of multimodal neurons in artificial neural networks, similar to those found in the human brain.

https://distill.pub/2021/multimodal-neurons/

Multimodal neurons in artificial neural networks

We’ve discovered neurons in CLIP that respond to the same concept whether presented literally, symbolically, or conceptually. This may explain CLIP’s accuracy in classifying surprising visual renditions of concepts, and is also an important step toward understanding the associations and biases that CLIP and similar models learn.

https://openai.com/index/multimodal-neurons/

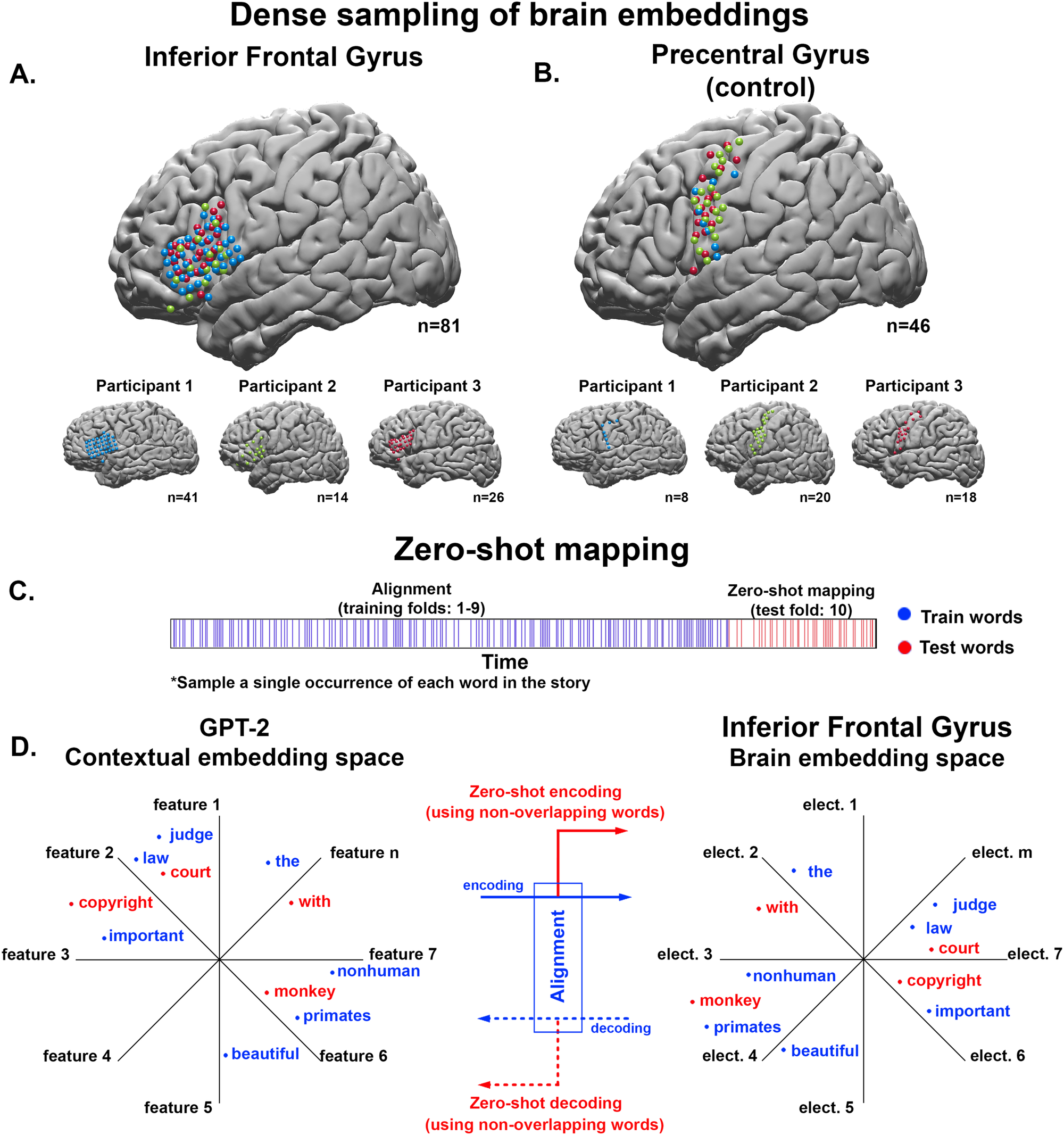

Neuron Activation in Left Prefrontal cortex respond to work such as AI Neuron Activation (actually word embedding in the paper)

Semantic encoding during language comprehension at single-cell resolution

www.nature.com

https://www.nature.com/articles/s41586-024-07643-2

Alignment of brain embeddings and artificial contextual embeddings in natural language points to common geometric patterns

Nature Communications - Here, using neural activity patterns in the inferior frontal gyrus and large language modeling embeddings, the authors provide evidence for a common neural code for language...

https://www.nature.com/articles/s41467-024-46631-y

Neuromorphic computing

Neuromorphic computing is an approach to computing that is inspired by the structure and function of the human brain.[1][2] A neuromorphic computer/chip is any device that uses physical artificial neurons to do computations.[3][4] In recent times, the term neuromorphic has been used to describe analog, digital, mixed-mode analog/digital VLSI, and software systems that implement models of neural systems (for perception, motor control, or multisensory integration). Recent advances have even discovered ways to mimic the human nervous system through liquid solutions of chemical systems.[5]

https://en.wikipedia.org/wiki/Neuromorphic_computing

The Natural Abstraction Hypothesis: Implications and Evidence — LessWrong

This post was written under Evan Hubinger’s direct guidance and mentorship, as a part of the Stanford Existential Risks Institute ML Alignment Theory…

https://www.lesswrong.com/posts/Fut8dtFsBYRz8atFF/the-natural-abstraction-hypothesis-implications-and-evidence

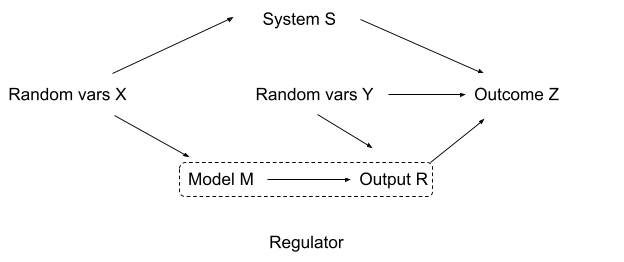

World model Interpretability with Internal Interface Theory

If the way AI interacts with various modules through internal interfaces is consistently formed, the possibility increases that humans can understand the format of these interfaces and interpret the entire world model at once.

World-Model Interpretability Is All We Need — LessWrong

Summary, by sections: • 1. Perfect world-model interpretability seems both sufficient for robust alignment (via a decent variety of approaches) and…

https://www.lesswrong.com/posts/HaHcsrDSZ3ZC2b4fK/world-model-interpretability-is-all-we-need

key claims theorems and critiques

Natural Abstractions: Key claims, Theorems, and Critiques — LessWrong

TL;DR: We distill John Wentworth’s Natural Abstractions agenda by summarizing its key claims: the Natural Abstraction Hypothesis—many cognitive syste…

https://www.lesswrong.com/posts/gvzW46Z3BsaZsLc25/natural-abstractions-key-claims-theorems-and-critiques-1

Proposal (Wentworth, 2021)

Testing The Natural Abstraction Hypothesis: Project Intro — LessWrong

The natural abstraction hypothesis says that …

https://www.lesswrong.com/posts/cy3BhHrGinZCp3LXE/testing-the-natural-abstraction-hypothesis-project-intro

The Natural Abstraction Hypothesis: Implications and Evidence — LessWrong

This post was written under Evan Hubinger’s direct guidance and mentorship, as a part of the Stanford Existential Risks Institute ML Alignment Theory…

https://www.lesswrong.com/posts/Fut8dtFsBYRz8atFF/the-natural-abstraction-hypothesis-implications-and-evidence

Emergent Computations in Artificial Neural Networks and Real Brains

Even the discovery of similar circuits in humans and AI supports this claim

arxiv.org

https://arxiv.org/pdf/2212.04938

(PDF) Brains and algorithms partially converge in natural language processing

PDF | Deep learning algorithms trained to predict masked words from large amount of text have recently been shown to generate activations similar to... | Find, read and cite all the research you need on ResearchGate

https://www.researchgate.net/publication/358653162_Brains_and_algorithms_partially_converge_in_natural_language_processing

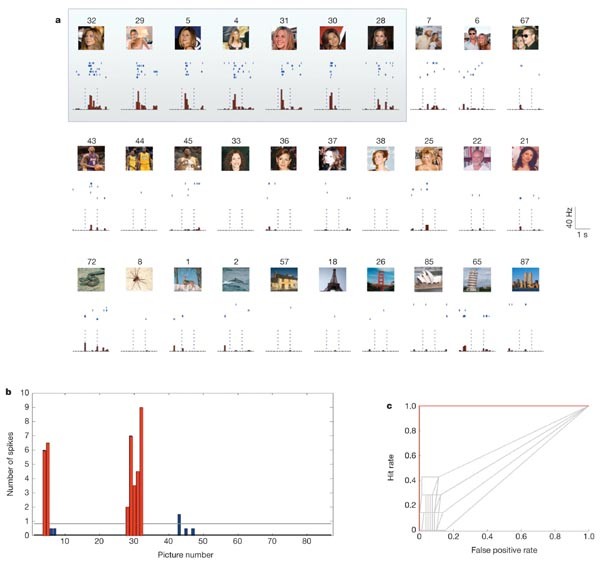

Grandmother cell,

2005 Nature study showed that single neurons in the human medial temporal lobe (MTL) respond selectively to the same person/object across different photo angles, lighting, and contexts with invariant selective responses. These results suggest an invariant, sparse and explicit code, which might be important in the transformation of complex visual percepts into long-term and more abstract memories.

Grandmother cell

The grandmother cell, sometimes called the "Jennifer Aniston neuron", is a hypothetical neuron that represents a complex but specific concept or object.[1] It activates when a person "sees, hears, or otherwise sensibly discriminates"[2] a specific entity, such as their grandmother. It contrasts with the concept of ensemble coding (or "coarse" coding), where the unique set of features characterizing the grandmother is detected as a particular activation pattern across an ensemble of neurons, rather than being detected by a specific "grandmother cell".[1]

https://en.wikipedia.org/wiki/Grandmother_cell

Invariant visual representation by single neurons in the human brain

Nature - It takes moments for the human brain to recognize a person or an object even if seen under very different conditions. This raises the question: can a single neuron respond selectively to a...

https://www.nature.com/articles/nature03687

Trump always has dedicated neuron

Mechanistic Interpretability explained | Chris Olah and Lex Fridman

Lex Fridman Podcast full episode: https://www.youtube.com/watch?v=ugvHCXCOmm4

Thank you for listening ❤ Check out our sponsors: https://lexfridman.com/sponsors/cv8247-sb

See below for guest bio, links, and to give feedback, submit questions, contact Lex, etc.

*GUEST BIO:*

Dario Amodei is the CEO of Anthropic, the company that created Claude. Amanda Askell is an AI researcher working on Claude's character and personality. Chris Olah is an AI researcher working on mechanistic interpretability.

*CONTACT LEX:*

*Feedback* - give feedback to Lex: https://lexfridman.com/survey

*AMA* - submit questions, videos or call-in: https://lexfridman.com/ama

*Hiring* - join our team: https://lexfridman.com/hiring

*Other* - other ways to get in touch: https://lexfridman.com/contact

*EPISODE LINKS:*

Claude: https://claude.ai

Anthropic's X: https://x.com/AnthropicAI

Anthropic's Website: https://anthropic.com

Dario's X: https://x.com/DarioAmodei

Dario's Website: https://darioamodei.com

Machines of Loving Grace (Essay): https://darioamodei.com/machines-of-loving-grace

Chris's X: https://x.com/ch402

Chris's Blog: https://colah.github.io

Amanda's X: https://x.com/AmandaAskell

Amanda's Website: https://askell.io

*SPONSORS:*

To support this podcast, check out our sponsors & get discounts:

*Encord:* AI tooling for annotation & data management.

Go to https://lexfridman.com/s/encord-cv8247-sb

*Notion:* Note-taking and team collaboration.

Go to https://lexfridman.com/s/notion-cv8247-sb

*Shopify:* Sell stuff online.

Go to https://lexfridman.com/s/shopify-cv8247-sb

*BetterHelp:* Online therapy and counseling.

Go to https://lexfridman.com/s/betterhelp-cv8247-sb

*LMNT:* Zero-sugar electrolyte drink mix.

Go to https://lexfridman.com/s/lmnt-cv8247-sb

*PODCAST LINKS:*

- Podcast Website: https://lexfridman.com/podcast

- Apple Podcasts: https://apple.co/2lwqZIr

- Spotify: https://spoti.fi/2nEwCF8

- RSS: https://lexfridman.com/feed/podcast/

- Podcast Playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOdP_8GztsuKi9nrraNbKKp4

- Clips Channel: https://www.youtube.com/lexclips

*SOCIAL LINKS:*

- X: https://x.com/lexfridman

- Instagram: https://instagram.com/lexfridman

- TikTok: https://tiktok.com/@lexfridman

- LinkedIn: https://linkedin.com/in/lexfridman

- Facebook: https://facebook.com/lexfridman

- Patreon: https://patreon.com/lexfridman

- Telegram: https://t.me/lexfridman

- Reddit: https://reddit.com/r/lexfridman

https://www.youtube.com/watch?v=riniamTdUSo

Seonglae Cho

Seonglae Cho