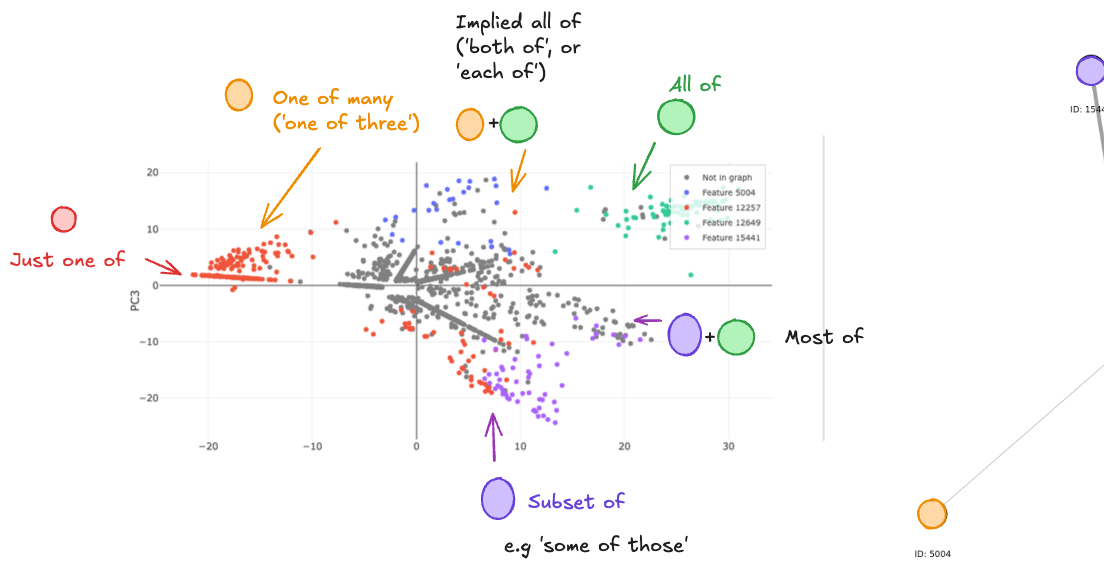

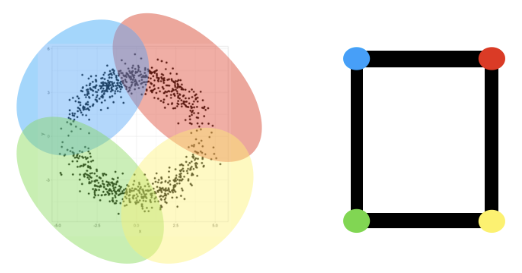

SAE latent vectors are not independent, but rather form clusters that activate together in predictable ways. While functionally separate, there are actual dependencies, making interactions and compositional characteristics important for interpretability. This is particularly evident in smaller SAEs, and these clusters can be effectively analyzed through L0 regularization.

Compositionality and Ambiguity: Latent Co-occurrence and Interpretable Subspaces — LessWrong

Matthew A. Clarke, Hardik Bhatnagar and Joseph Bloom

https://www.lesswrong.com/posts/WNoqEivcCSg8gJe5h/compositionality-and-ambiguity-latent-co-occurrence-and

SAE Latent Cooccurrence Explorer ()

sae_cooccurrence

MClarke1991 • Updated 2025 Mar 25 10:47

When two features frequently activate at the same time, we say they co-occur (high correlation)

Feature Cooccurrence Explorer

This app was built in Streamlit! Check it out and visit https://streamlit.io for more awesome community apps. 🎈

https://feature-cooccurrence.streamlit.app/

Topological Data Analysis

Graph Modeling of SAE features displayed Relationship relevant features developing along the layers and latter layers involves more complex features.

Topological Data Analysis and Mechanistic Interpretability — LessWrong

This article was written in response to a post on LessWrong from the Apollo Research interpretability team. This post represents our initial attempts…

https://www.lesswrong.com/posts/6oF6pRr2FgjTmiHus/topological-data-analysis-and-mechanistic-interpretability

Seonglae Cho

Seonglae Cho